Alberto Montes

The 2019 DAVIS Challenge on VOS: Unsupervised Multi-Object Segmentation

May 02, 2019

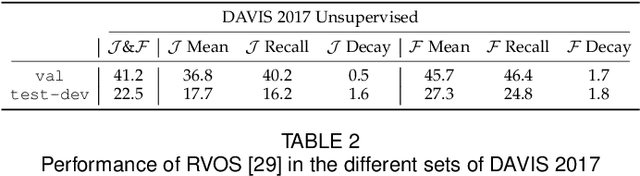

Abstract:We present the 2019 DAVIS Challenge on Video Object Segmentation, the third edition of the DAVIS Challenge series, a public competition designed for the task of Video Object Segmentation (VOS). In addition to the original semi-supervised track and the interactive track introduced in the previous edition, a new unsupervised multi-object track will be featured this year. In the newly introduced track, participants are asked to provide non-overlapping object proposals on each image, along with an identifier linking them between frames (i.e. video object proposals), without any test-time human supervision (no scribbles or masks provided on the test video). In order to do so, we have re-annotated the train and val sets of DAVIS 2017 in a concise way that facilitates the unsupervised track, and created new test-dev and test-challenge sets for the competition. Definitions, rules, and evaluation metrics for the unsupervised track are described in detail in this paper.

Blazingly Fast Video Object Segmentation with Pixel-Wise Metric Learning

Apr 09, 2018

Abstract:This paper tackles the problem of video object segmentation, given some user annotation which indicates the object of interest. The problem is formulated as pixel-wise retrieval in a learned embedding space: we embed pixels of the same object instance into the vicinity of each other, using a fully convolutional network trained by a modified triplet loss as the embedding model. Then the annotated pixels are set as reference and the rest of the pixels are classified using a nearest-neighbor approach. The proposed method supports different kinds of user input such as segmentation mask in the first frame (semi-supervised scenario), or a sparse set of clicked points (interactive scenario). In the semi-supervised scenario, we achieve results competitive with the state of the art but at a fraction of computation cost (275 milliseconds per frame). In the interactive scenario where the user is able to refine their input iteratively, the proposed method provides instant response to each input, and reaches comparable quality to competing methods with much less interaction.

The 2018 DAVIS Challenge on Video Object Segmentation

Mar 27, 2018

Abstract:We present the 2018 DAVIS Challenge on Video Object Segmentation, a public competition specifically designed for the task of video object segmentation. It builds upon the DAVIS 2017 dataset, which was presented in the previous edition of the DAVIS Challenge, and added 100 videos with multiple objects per sequence to the original DAVIS 2016 dataset. Motivated by the analysis of the results of the 2017 edition, the main track of the competition will be the same than in the previous edition (segmentation given the full mask of the objects in the first frame -- semi-supervised scenario). This edition, however, also adds an interactive segmentation teaser track, where the participants will interact with a web service simulating the input of a human that provides scribbles to iteratively improve the result.

Temporal Activity Detection in Untrimmed Videos with Recurrent Neural Networks

Mar 02, 2017

Abstract:This thesis explore different approaches using Convolutional and Recurrent Neural Networks to classify and temporally localize activities on videos, furthermore an implementation to achieve it has been proposed. As the first step, features have been extracted from video frames using an state of the art 3D Convolutional Neural Network. This features are fed in a recurrent neural network that solves the activity classification and temporally location tasks in a simple and flexible way. Different architectures and configurations have been tested in order to achieve the best performance and learning of the video dataset provided. In addition it has been studied different kind of post processing over the trained network's output to achieve a better results on the temporally localization of activities on the videos. The results provided by the neural network developed in this thesis have been submitted to the ActivityNet Challenge 2016 of the CVPR, achieving competitive results using a simple and flexible architecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge