Ajay B. Satpute

Variation is the Norm: Brain State Dynamics Evoked By Emotional Video Clips

Oct 24, 2021

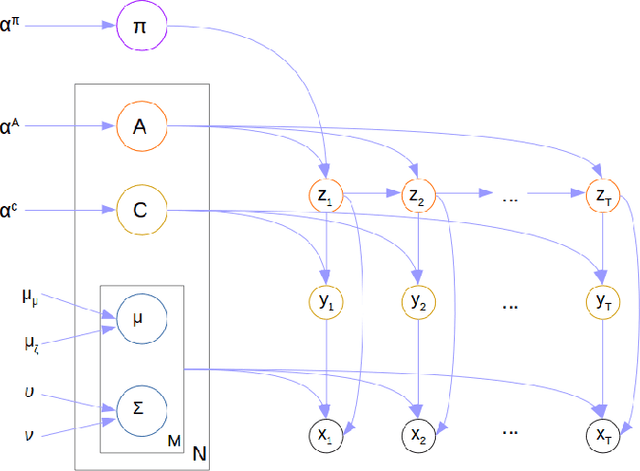

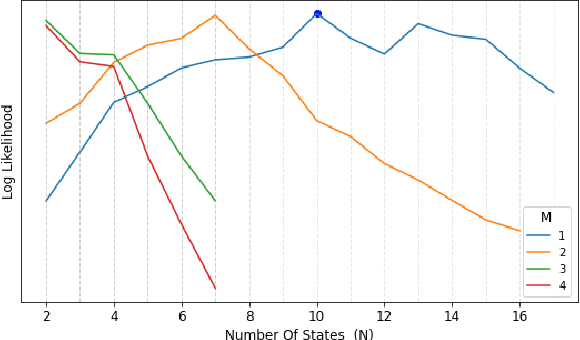

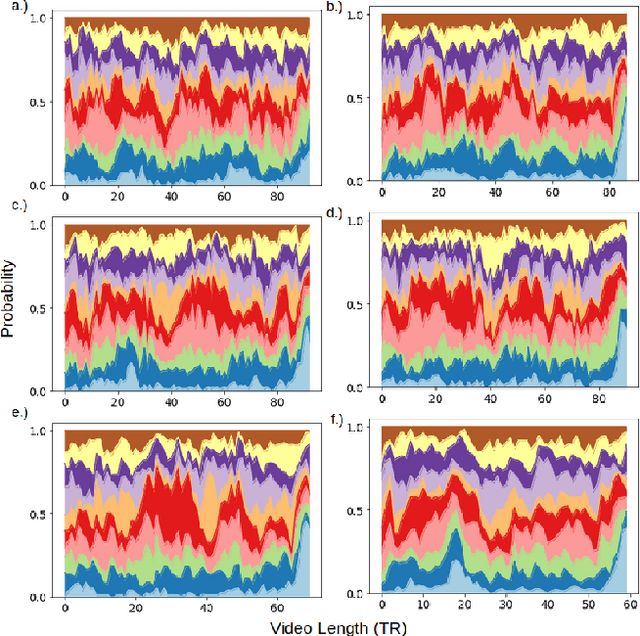

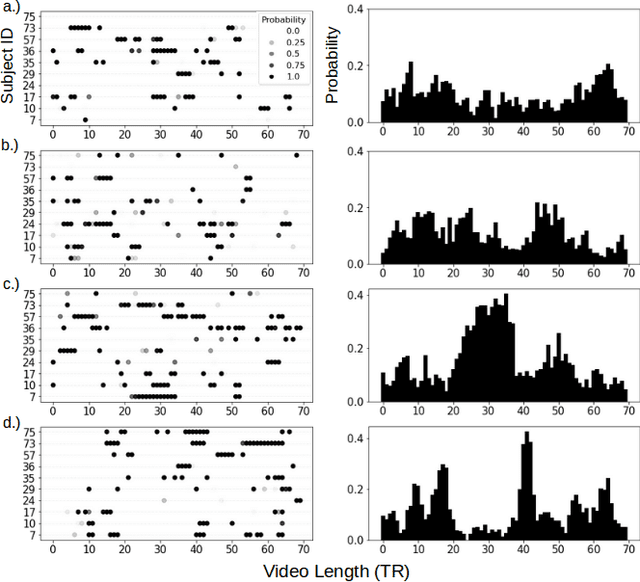

Abstract:For the last several decades, emotion research has attempted to identify a "biomarker" or consistent pattern of brain activity to characterize a single category of emotion (e.g., fear) that will remain consistent across all instances of that category, regardless of individual and context. In this study, we investigated variation rather than consistency during emotional experiences while people watched video clips chosen to evoke instances of specific emotion categories. Specifically, we developed a sequential probabilistic approach to model the temporal dynamics in a participant's brain activity during video viewing. We characterized brain states during these clips as distinct state occupancy periods between state transitions in blood oxygen level dependent (BOLD) signal patterns. We found substantial variation in the state occupancy probability distributions across individuals watching the same video, supporting the hypothesis that when it comes to the brain correlates of emotional experience, variation may indeed be the norm.

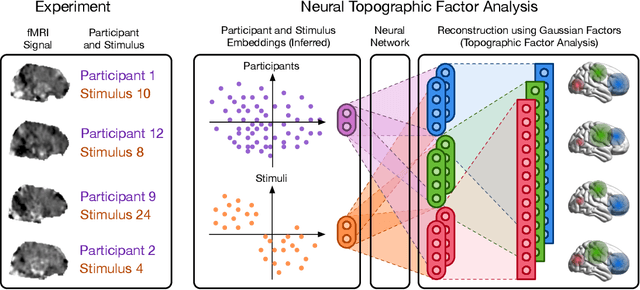

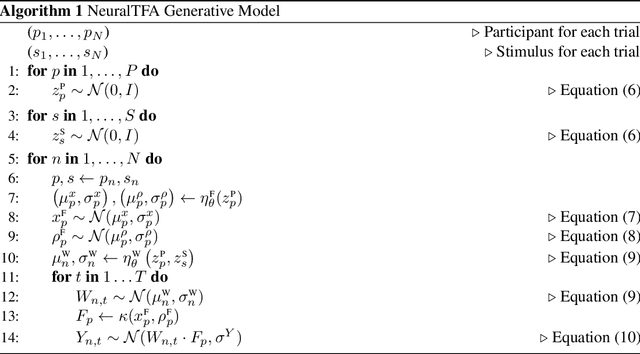

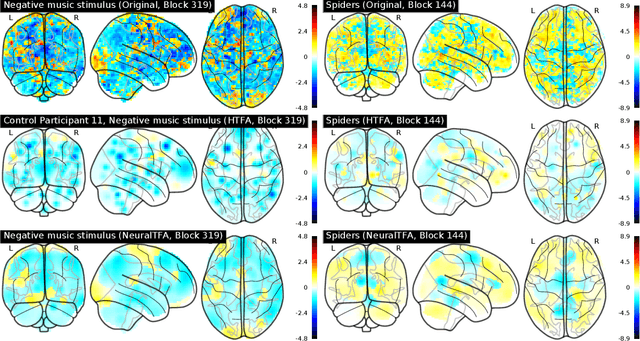

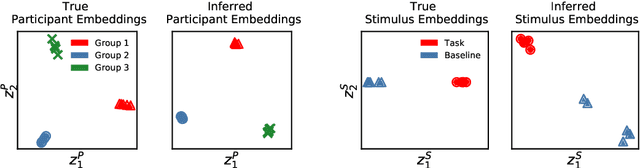

Neural Topographic Factor Analysis for fMRI Data

Jun 21, 2019

Abstract:Neuroimaging experiments produce a large volume (gigabytes) of high-dimensional spatio-temporal data for a small number of sampled participants and stimuli. Analyses of this data commonly compute averages over all trials, ignoring variation within groups of participants and stimuli. To enable the analysis of fMRI data without this implicit assumption of uniformity, we propose Neural Topographic Factor Analysis (NTFA), a deep generative model that parameterizes factors as functions of embeddings for participants and stimuli. We evaluate NTFA on a synthetically generated dataset as well as on three datasets from fMRI experiments. Our results demonstrate that NTFA yields more accurate reconstructions than a state-of-the-art method with fewer parameters. Moreover, learned embeddings uncover latent categories of participants and stimuli, which suggests that NTFA takes a first step towards reasoning about individual variation in fMRI experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge