Aigerim Bogyrbayeva

Department of Industrial and Management Systems Engineering, University of South Florida, Tampa, Florida, USA

Learning to Solve Vehicle Routing Problems: A Survey

May 05, 2022

Abstract:This paper provides a systematic overview of machine learning methods applied to solve NP-hard Vehicle Routing Problems (VRPs). Recently, there has been a great interest from both machine learning and operations research communities to solve VRPs either by pure learning methods or by combining them with the traditional hand-crafted heuristics. We present the taxonomy of the studies for learning paradigms, solution structures, underlying models, and algorithms. We present in detail the results of the state-of-the-art methods demonstrating their competitiveness with the traditional methods. The paper outlines the future research directions to incorporate learning-based solutions to overcome the challenges of modern transportation systems.

A Deep Reinforcement Learning Approach for Solving the Traveling Salesman Problem with Drone

Dec 31, 2021

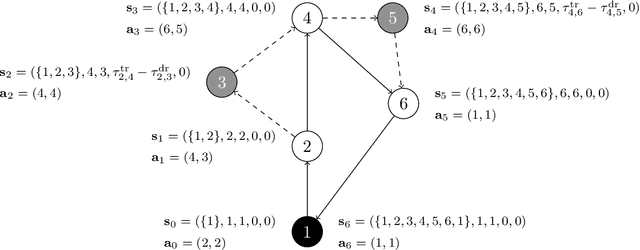

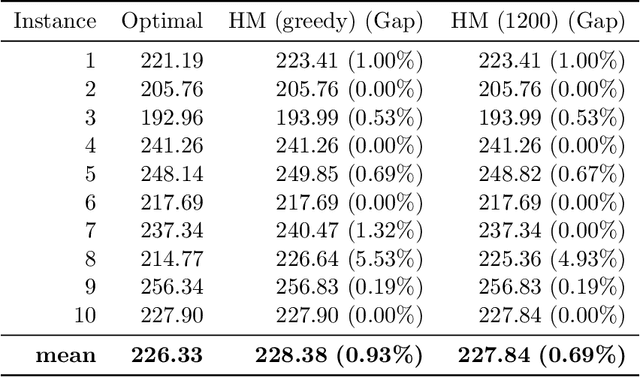

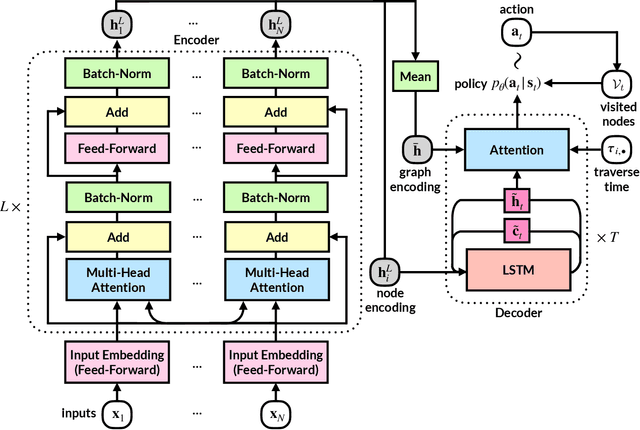

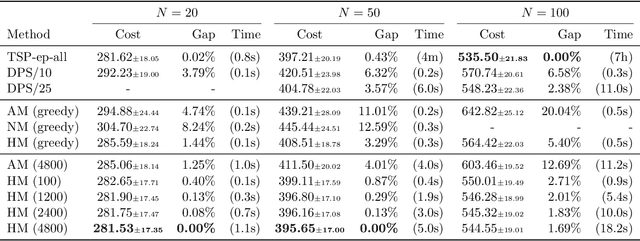

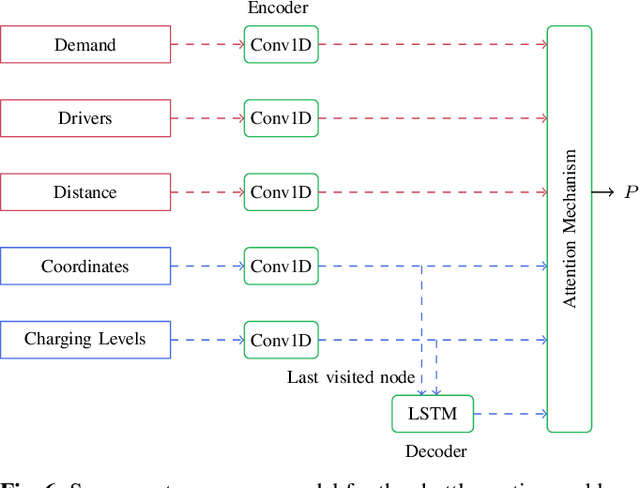

Abstract:Reinforcement learning has recently shown promise in learning quality solutions in many combinatorial optimization problems. In particular, the attention-based encoder-decoder models show high effectiveness on various routing problems, including the Traveling Salesman Problem (TSP). Unfortunately, they perform poorly for the TSP with Drone (TSP-D), requiring routing a heterogeneous fleet of vehicles in coordination -- a truck and a drone. In TSP-D, the two vehicles are moving in tandem and may need to wait at a node for the other vehicle to join. State-less attention-based decoder fails to make such coordination between vehicles. We propose an attention encoder-LSTM decoder hybrid model, in which the decoder's hidden state can represent the sequence of actions made. We empirically demonstrate that such a hybrid model improves upon a purely attention-based model for both solution quality and computational efficiency. Our experiments on the min-max Capacitated Vehicle Routing Problem (mmCVRP) also confirm that the hybrid model is more suitable for coordinated routing of multiple vehicles than the attention-based model.

A Reinforcement Learning Approach for Rebalancing Electric Vehicle Sharing Systems

Oct 05, 2020

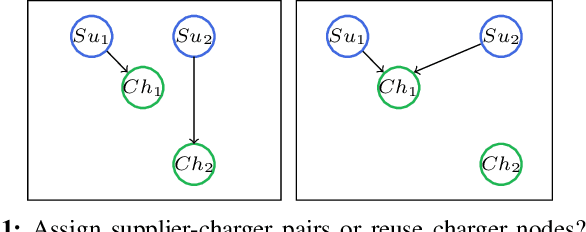

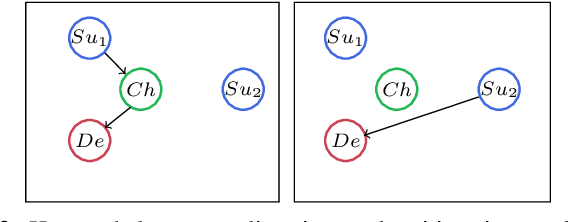

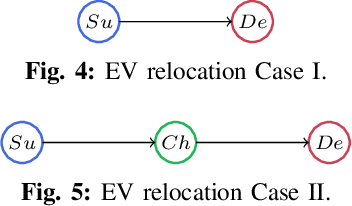

Abstract:This paper proposes a reinforcement learning approach for nightly offline rebalancing operations in free-floating electric vehicle sharing systems (FFEVSS). Due to sparse demand in a network, FFEVSS require relocation of electrical vehicles (EVs) to charging stations and demander nodes, which is typically done by a group of drivers. A shuttle is used to pick up and drop off drivers throughout the network. The objective of this study is to solve the shuttle routing problem to finish the rebalancing work in the minimal time. We consider a reinforcement learning framework for the problem, in which a central controller determines the routing policies of a fleet of multiple shuttles. We deploy a policy gradient method for training recurrent neural networks and compare the obtained policy results with heuristic solutions. Our numerical studies show that unlike the existing solutions in the literature, the proposed methods allow to solve the general version of the problem with no restrictions on the urban EV network structure and charging requirements of EVs. Moreover, the learned policies offer a wide range of flexibility resulting in a significant reduction in the time needed to rebalance the network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge