Ahmed Zoha

Automated Classification of Phonetic Segments in Child Speech Using Raw Ultrasound Imaging

Feb 27, 2024Abstract:Speech sound disorder (SSD) is defined as a persistent impairment in speech sound production leading to reduced speech intelligibility and hindered verbal communication. Early recognition and intervention of children with SSD and timely referral to speech and language therapists (SLTs) for treatment are crucial. Automated detection of speech impairment is regarded as an efficient method for examining and screening large populations. This study focuses on advancing the automatic diagnosis of SSD in early childhood by proposing a technical solution that integrates ultrasound tongue imaging (UTI) with deep-learning models. The introduced FusionNet model combines UTI data with the extracted texture features to classify UTI. The overarching aim is to elevate the accuracy and efficiency of UTI analysis, particularly for classifying speech sounds associated with SSD. This study compared the FusionNet approach with standard deep-learning methodologies, highlighting the excellent improvement results of the FusionNet model in UTI classification and the potential of multi-learning in improving UTI classification in speech therapy clinics.

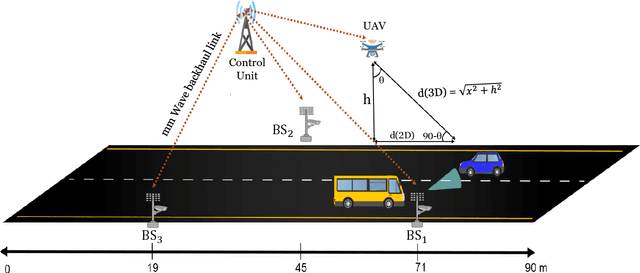

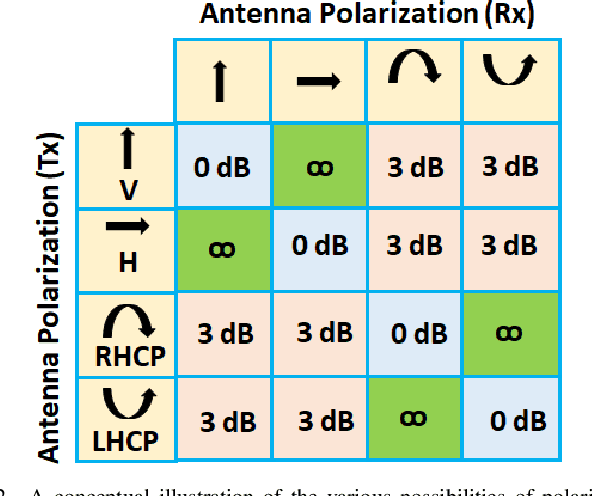

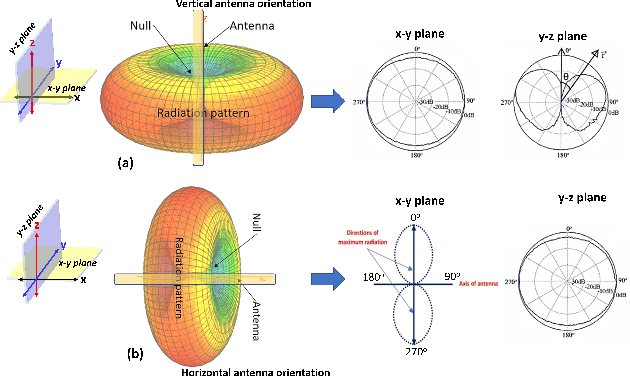

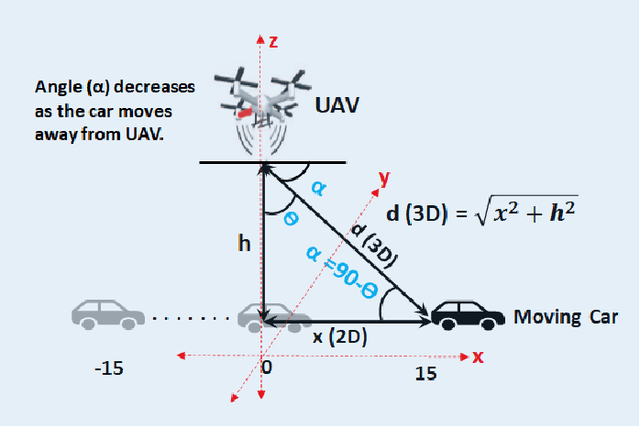

Proactive Blockage Prediction for UAV assisted Handover in Future Wireless Network

Feb 06, 2024

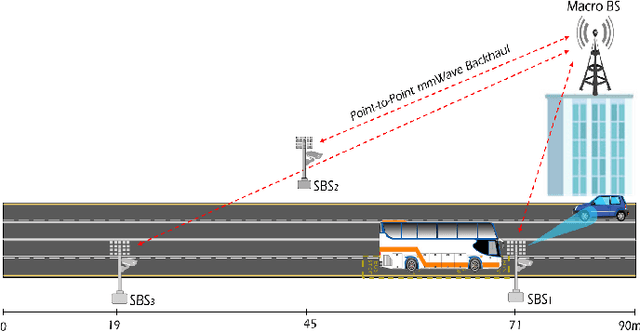

Abstract:The future wireless communication applications demand seamless connectivity, higher throughput, and low latency, for which the millimeter-wave (mmWave) band is considered a potential technology. Nevertheless, line-of-sight (LoS) is often mandatory for mmWave band communication, and it renders these waves sensitive to sudden changes in the environment. Therefore, it is necessary to maintain the LoS link for a reliable connection. One such technique to maintain LoS is using proactive handover (HO). However, proactive HO is challenging, requiring continuous information about the surrounding wireless network to anticipate potential blockage. This paper presents a proactive blockage prediction mechanism where an unmanned aerial vehicle (UAV) is used as the base station for HO. The proposed scheme uses computer vision (CV) to obtain potential blocking objects, user speed, and location. To assess the effectiveness of the proposed scheme, the system is evaluated using a publicly available dataset for blockage prediction. The study integrates scenarios from Vision-based Wireless (ViWi) and UAV channel modeling, generating wireless data samples relevant to UAVs. The antenna modeling on the UAV end incorporates a polarization-matched scenario to optimize signal reception. The results demonstrate that UAV-assisted Handover not only ensures seamless connectivity but also enhances overall network performance by 20%. This research contributes to the advancement of proactive blockage mitigation strategies in wireless networks, showcasing the potential of UAVs as dynamic and adaptable base stations.

Enhancing Reliability in Federated mmWave Networks: A Practical and Scalable Solution using Radar-Aided Dynamic Blockage Recognition

Jun 22, 2023

Abstract:This article introduces a new method to improve the dependability of millimeter-wave (mmWave) and terahertz (THz) network services in dynamic outdoor environments. In these settings, line-of-sight (LoS) connections are easily interrupted by moving obstacles like humans and vehicles. The proposed approach, coined as Radar-aided Dynamic blockage Recognition (RaDaR), leverages radar measurements and federated learning (FL) to train a dual-output neural network (NN) model capable of simultaneously predicting blockage status and time. This enables determining the optimal point for proactive handover (PHO) or beam switching, thereby reducing the latency introduced by 5G new radio procedures and ensuring high quality of experience (QoE). The framework employs radar sensors to monitor and track objects movement, generating range-angle and range-velocity maps that are useful for scene analysis and predictions. Moreover, FL provides additional benefits such as privacy protection, scalability, and knowledge sharing. The framework is assessed using an extensive real-world dataset comprising mmWave channel information and radar data. The evaluation results show that RaDaR substantially enhances network reliability, achieving an average success rate of 94% for PHO compared to existing reactive HO procedures that lack proactive blockage prediction. Additionally, RaDaR maintains a superior QoE by ensuring sustained high throughput levels and minimising PHO latency.

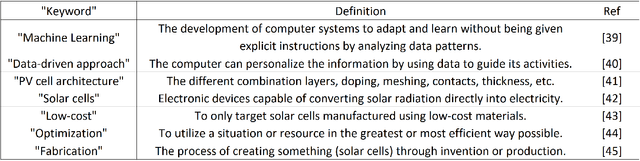

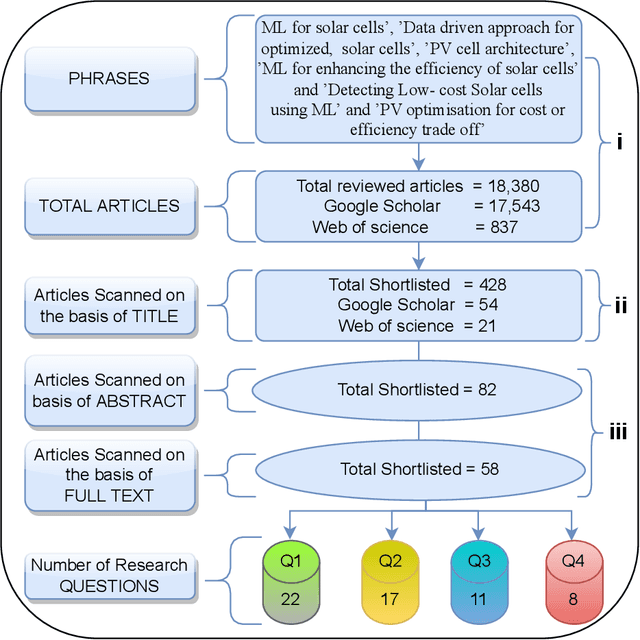

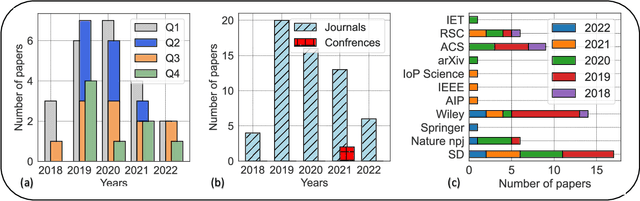

Machine learning for accelerating the discovery of high performance low-cost solar cells: a systematic review

Dec 26, 2022

Abstract:Solar photovoltaic (PV) technology has merged as an efficient and versatile method for converting the Sun's vast energy into electricity. Innovation in developing new materials and solar cell architectures is required to ensure lightweight, portable, and flexible miniaturized electronic devices operate for long periods with reduced battery demand. Recent advances in biomedical implantable and wearable devices have coincided with a growing interest in efficient energy-harvesting solutions. Such devices primarily rely on rechargeable batteries to satisfy their energy needs. Moreover, Artificial Intelligence (AI) and Machine Learning (ML) techniques are touted as game changers in energy harvesting, especially in solar energy materials. In this article, we systematically review a range of ML techniques for optimizing the performance of low-cost solar cells for miniaturized electronic devices. Our systematic review reveals that these ML techniques can expedite the discovery of new solar cell materials and architectures. In particular, this review covers a broad range of ML techniques targeted at producing low-cost solar cells. Moreover, we present a new method of classifying the literature according to data synthesis, ML algorithms, optimization, and fabrication process. In addition, our review reveals that the Gaussian Process Regression (GPR) ML technique with Bayesian Optimization (BO) enables the design of the most promising low-solar cell architecture. Therefore, our review is a critical evaluation of existing ML techniques and is presented to guide researchers in discovering the next generation of low-cost solar cells using ML techniques.

FedTrees: A Novel Computation-Communication Efficient Federated Learning Framework Investigated in Smart Grids

Sep 30, 2022

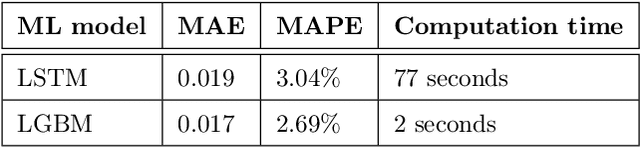

Abstract:Smart energy performance monitoring and optimisation at the supplier and consumer levels is essential to realising smart cities. In order to implement a more sustainable energy management plan, it is crucial to conduct a better energy forecast. The next-generation smart meters can also be used to measure, record, and report energy consumption data, which can be used to train machine learning (ML) models for predicting energy needs. However, sharing fine-grained energy data and performing centralised learning may compromise users' privacy and leave them vulnerable to several attacks. This study addresses this issue by utilising federated learning (FL), an emerging technique that performs ML model training at the user level, where data resides. We introduce FedTrees, a new, lightweight FL framework that benefits from the outstanding features of ensemble learning. Furthermore, we developed a delta-based early stopping algorithm to monitor FL training and stop it when it does not need to continue. The simulation results demonstrate that FedTrees outperforms the most popular federated averaging (FedAvg) framework and the baseline Persistence model for providing accurate energy forecasting patterns while taking only 2% of the computation time and 13% of the communication rounds compared to FedAvg, saving considerable amounts of computation and communication resources.

Intelligent Blockage Prediction and Proactive Handover for Seamless Connectivity in Vision-Aided 5G/6G UDNs

Feb 21, 2022

Abstract:The upsurge in wireless devices and real-time service demands force the move to a higher frequency spectrum. Millimetre-wave (mmWave) and terahertz (THz) bands combined with the beamforming technology offer significant performance enhancements for ultra-dense networks (UDNs). Unfortunately, shrinking cell coverage and severe penetration loss experienced at higher spectrum render mobility management a critical issue in UDNs, especially optimizing beam blockages and frequent handover (HO). Mobility management challenges have become prevalent in city centres and urban areas. To address this, we propose a novel mechanism driven by exploiting wireless signals and on-road surveillance systems to intelligently predict possible blockages in advance and perform timely HO. This paper employs computer vision (CV) to determine obstacles and users' location and speed. In addition, this study introduces a new HO event, called block event {BLK}, defined by the presence of a blocking object and a user moving towards the blocked area. Moreover, the multivariate regression technique predicts the remaining time until the user reaches the blocked area, hence determining best HO decision. Compared to typical wireless networks without blockage prediction, simulation results show that our BLK detection and PHO algorithm achieves 40\% improvement in maintaining user connectivity and the required quality of experience (QoE).

Edge-Native Intelligence for 6G Communications Driven by Federated Learning: A Survey of Trends and Challenges

Nov 14, 2021

Abstract:The unprecedented surge of data volume in wireless networks empowered with artificial intelligence (AI) opens up new horizons for providing ubiquitous data-driven intelligent services. Traditional cloud-centric machine learning (ML)-based services are implemented by collecting datasets and training models centrally. However, this conventional training technique encompasses two challenges: (i) high communication and energy cost due to increased data communication, (ii) threatened data privacy by allowing untrusted parties to utilise this information. Recently, in light of these limitations, a new emerging technique, coined as federated learning (FL), arose to bring ML to the edge of wireless networks. FL can extract the benefits of data silos by training a global model in a distributed manner, orchestrated by the FL server. FL exploits both decentralised datasets and computing resources of participating clients to develop a generalised ML model without compromising data privacy. In this article, we introduce a comprehensive survey of the fundamentals and enabling technologies of FL. Moreover, an extensive study is presented detailing various applications of FL in wireless networks and highlighting their challenges and limitations. The efficacy of FL is further explored with emerging prospective beyond fifth generation (B5G) and sixth generation (6G) communication systems. The purpose of this survey is to provide an overview of the state-of-the-art of FL applications in key wireless technologies that will serve as a foundation to establish a firm understanding of the topic. Lastly, we offer a road forward for future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge