Sajjad Hussain

RadioSim Agent: Combining Large Language Models and Deterministic EM Simulators for Interactive Radio Map Analysis

Nov 08, 2025

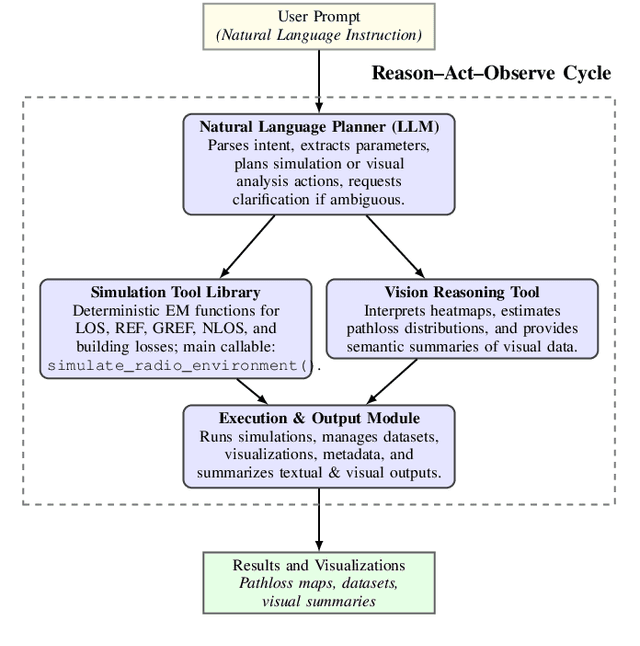

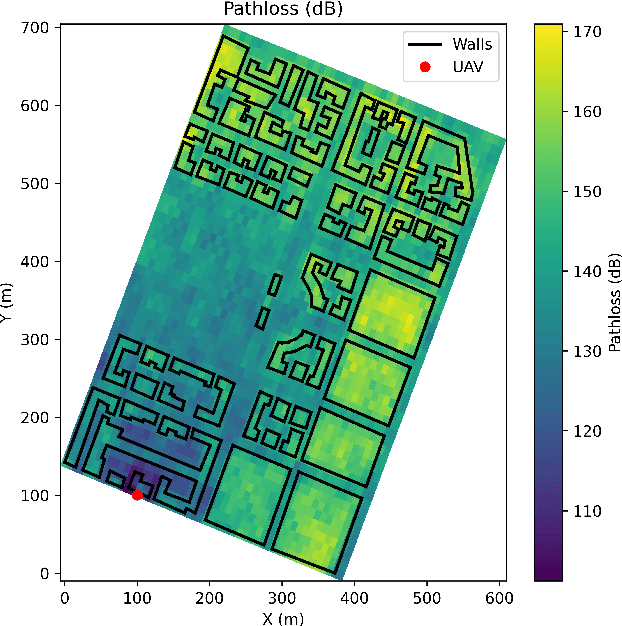

Abstract:Deterministic electromagnetic (EM) simulators provide accurate radio propagation modeling but often require expert configuration and lack interactive flexibility. We present RadioSim Agent, an agentic framework that integrates large language models (LLMs) with physics-based EM solvers and vision-enabled reasoning to enable interactive and explainable radio map generation. The framework encapsulates ray-tracing models as callable simulation tools, orchestrated by an LLM capable of interpreting natural language objectives, managing simulation workflows, and visually analyzing resulting radio maps. Demonstrations in urban UAV communication scenarios show that the agent autonomously selects appropriate propagation mechanisms, executes deterministic simulations, and provides semantic and visual summaries of pathloss behavior. The results indicate that RadioSim Agent provides multimodal interpretability and intuitive user interaction, paving the way for intelligent EM simulation assistants in next-generation wireless system design.

A Robust and Energy-Efficient Trajectory Planning Framework for High-Degree-of-Freedom Robots

Mar 13, 2025Abstract:Energy efficiency and motion smoothness are essential in trajectory planning for high-degree-of-freedom robots to ensure optimal performance and reduce mechanical wear. This paper presents a novel framework integrating sinusoidal trajectory generation with velocity scaling to minimize energy consumption while maintaining motion accuracy and smoothness. The framework is evaluated using a physics-based simulation environment with metrics such as energy consumption, motion smoothness, and trajectory accuracy. Results indicate significant energy savings and smooth transitions, demonstrating the framework's effectiveness for precision-based applications. Future work includes real-time trajectory adjustments and enhanced energy models.

Advancements in Gesture Recognition Techniques and Machine Learning for Enhanced Human-Robot Interaction: A Comprehensive Review

Sep 10, 2024

Abstract:In recent years robots have become an important part of our day-to-day lives with various applications. Human-robot interaction creates a positive impact in the field of robotics to interact and communicate with the robots. Gesture recognition techniques combined with machine learning algorithms have shown remarkable progress in recent years, particularly in human-robot interaction (HRI). This paper comprehensively reviews the latest advancements in gesture recognition methods and their integration with machine learning approaches to enhance HRI. Furthermore, this paper represents the vision-based gesture recognition for safe and reliable human-robot-interaction with a depth-sensing system, analyses the role of machine learning algorithms such as deep learning, reinforcement learning, and transfer learning in improving the accuracy and robustness of gesture recognition systems for effective communication between humans and robots.

Mathematical Modeling Of Four Finger Robotic Grippers

Sep 10, 2024Abstract:Robotic grippers are the end effector in the robot system of handling any task which used for performing various operations for the purpose of industrial application and hazardous tasks.In this paper, we developed the mathematical model for multi fingers robotics grippers. we are concerned with Jamia'shand which is developed in Robotics Lab, Mechanical Engineering Deptt, Faculty of Engg & Technolgy, Jamia Millia Islamia, India. This is a tendon-driven gripper each finger having three DOF having a total of 11 DOF. The term tendon is widely used to imply belts, cables, or similar types of applications. It is made up of three fingers and a thumb. Every finger and thumb has one degree of freedom. The power transmission mechanism is a rope and pulley system. Both hands have similar structures. Aluminum from the 5083 families was used to make this product. The gripping force can be adjusted we have done the kinematics, force, and dynamic analysis by developing a Mathematical model for the four-finger robotics grippers and their thumb. we focused it control motions in X and Y Displacements with the angular positions movements and we make the force analysis of the four fingers and thumb calculate the maximum weight, force, and torque required to move it with mass. Draw the force -displacements graph which shows the linear behavior up to 250 N and shows nonlinear behavior beyond this. and required Dmin of wire is 0.86 mm for grasping the maximum 1 kg load also developed the dynamic model (using energy )approach lagrangian method to find it torque required to move the fingers.

Simulation and optimization of computed torque control 3 DOF RRR manipulator using MATLAB

Sep 07, 2024

Abstract:Robot manipulators have become a significant tool for production industries due to their advantages in high speed, accuracy, safety, and repeatability. This paper simulates and optimizes the design of a 3-DOF articulated robotic manipulator (RRR Configuration). The forward and inverse dynamic models are utilized. The trajectory is planned using the end effector's required initial position. A torque compute model is used to calculate the physical end effector's trajectory, position, and velocity. The MATLAB Simulink platform is used for all simulations of the RRR manipulator. With the aid of MATLAB, we primarily focused on manipulator control of the robot using a calculated torque control strategy to achieve the required position.

Proactive Blockage Prediction for UAV assisted Handover in Future Wireless Network

Feb 06, 2024

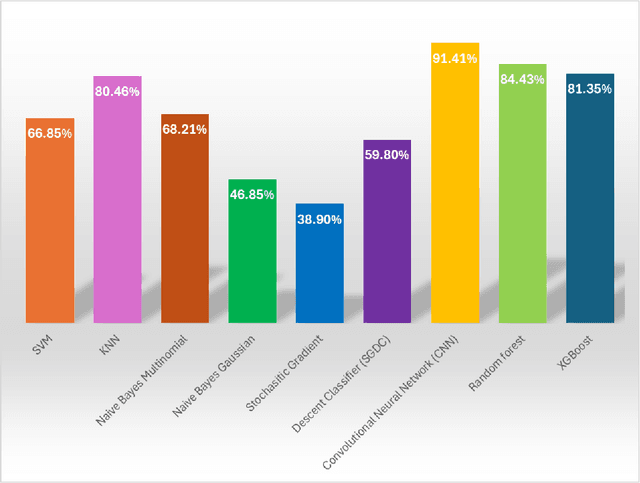

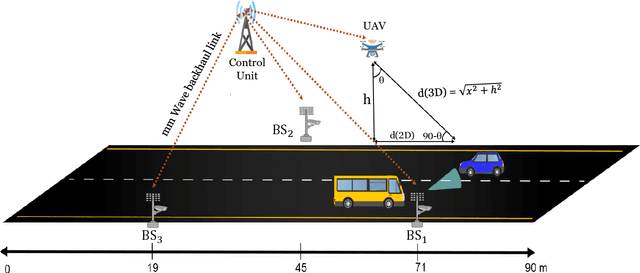

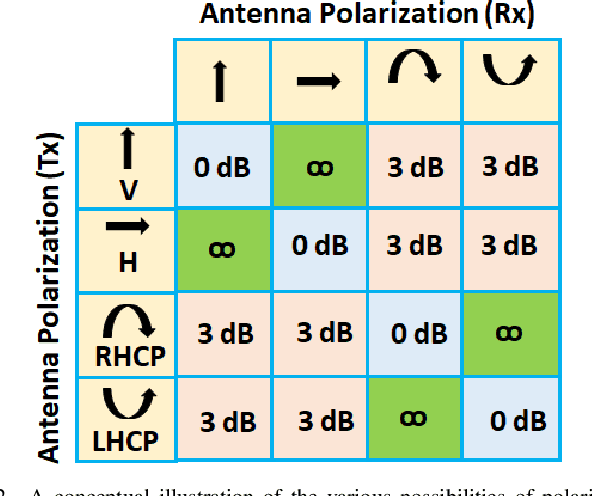

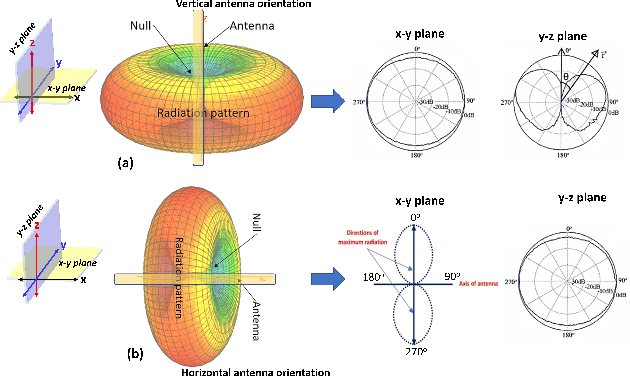

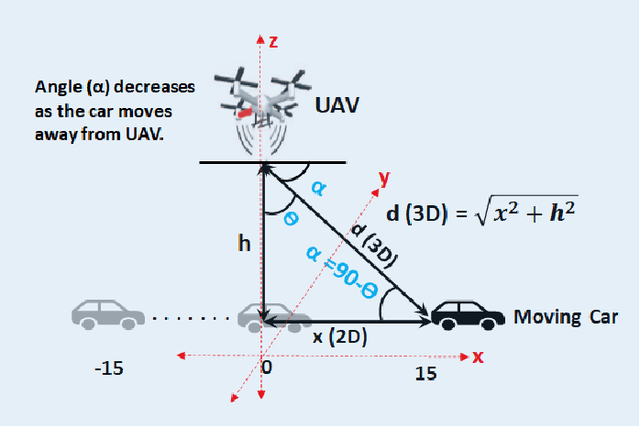

Abstract:The future wireless communication applications demand seamless connectivity, higher throughput, and low latency, for which the millimeter-wave (mmWave) band is considered a potential technology. Nevertheless, line-of-sight (LoS) is often mandatory for mmWave band communication, and it renders these waves sensitive to sudden changes in the environment. Therefore, it is necessary to maintain the LoS link for a reliable connection. One such technique to maintain LoS is using proactive handover (HO). However, proactive HO is challenging, requiring continuous information about the surrounding wireless network to anticipate potential blockage. This paper presents a proactive blockage prediction mechanism where an unmanned aerial vehicle (UAV) is used as the base station for HO. The proposed scheme uses computer vision (CV) to obtain potential blocking objects, user speed, and location. To assess the effectiveness of the proposed scheme, the system is evaluated using a publicly available dataset for blockage prediction. The study integrates scenarios from Vision-based Wireless (ViWi) and UAV channel modeling, generating wireless data samples relevant to UAVs. The antenna modeling on the UAV end incorporates a polarization-matched scenario to optimize signal reception. The results demonstrate that UAV-assisted Handover not only ensures seamless connectivity but also enhances overall network performance by 20%. This research contributes to the advancement of proactive blockage mitigation strategies in wireless networks, showcasing the potential of UAVs as dynamic and adaptable base stations.

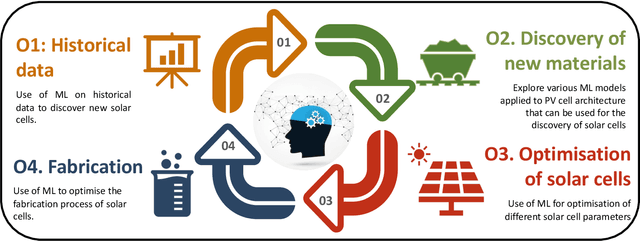

Machine learning for accelerating the discovery of high performance low-cost solar cells: a systematic review

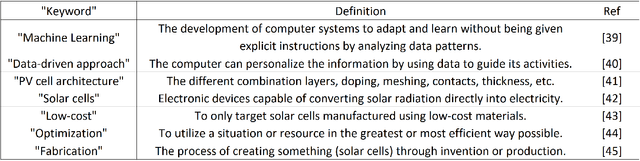

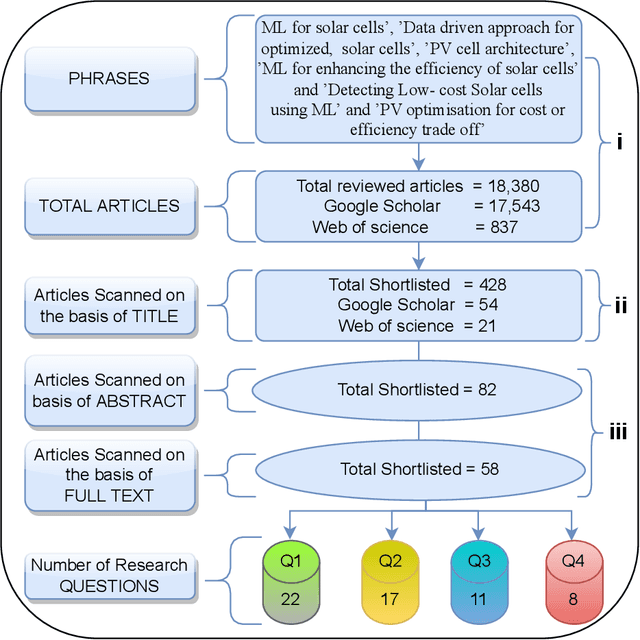

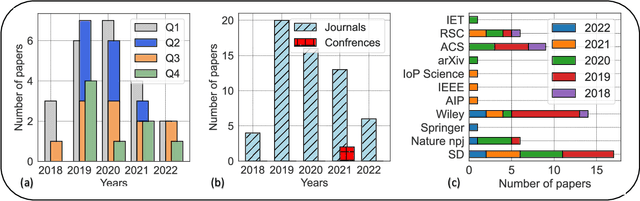

Dec 26, 2022

Abstract:Solar photovoltaic (PV) technology has merged as an efficient and versatile method for converting the Sun's vast energy into electricity. Innovation in developing new materials and solar cell architectures is required to ensure lightweight, portable, and flexible miniaturized electronic devices operate for long periods with reduced battery demand. Recent advances in biomedical implantable and wearable devices have coincided with a growing interest in efficient energy-harvesting solutions. Such devices primarily rely on rechargeable batteries to satisfy their energy needs. Moreover, Artificial Intelligence (AI) and Machine Learning (ML) techniques are touted as game changers in energy harvesting, especially in solar energy materials. In this article, we systematically review a range of ML techniques for optimizing the performance of low-cost solar cells for miniaturized electronic devices. Our systematic review reveals that these ML techniques can expedite the discovery of new solar cell materials and architectures. In particular, this review covers a broad range of ML techniques targeted at producing low-cost solar cells. Moreover, we present a new method of classifying the literature according to data synthesis, ML algorithms, optimization, and fabrication process. In addition, our review reveals that the Gaussian Process Regression (GPR) ML technique with Bayesian Optimization (BO) enables the design of the most promising low-solar cell architecture. Therefore, our review is a critical evaluation of existing ML techniques and is presented to guide researchers in discovering the next generation of low-cost solar cells using ML techniques.

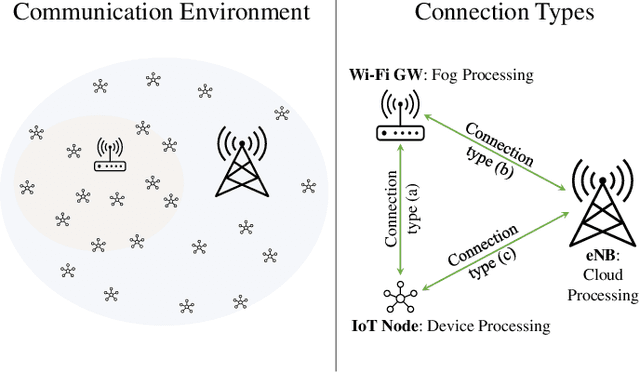

Context-Aware Wireless Connectivity and Processing Unit Optimization for IoT Networks

Apr 30, 2020

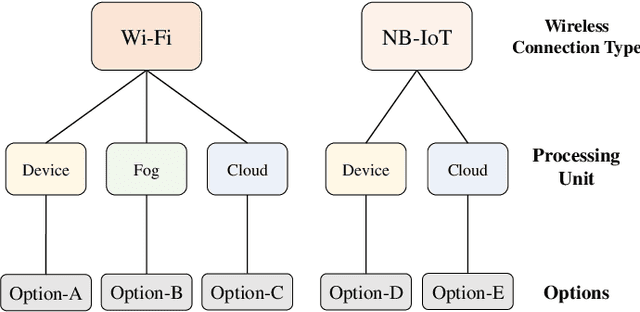

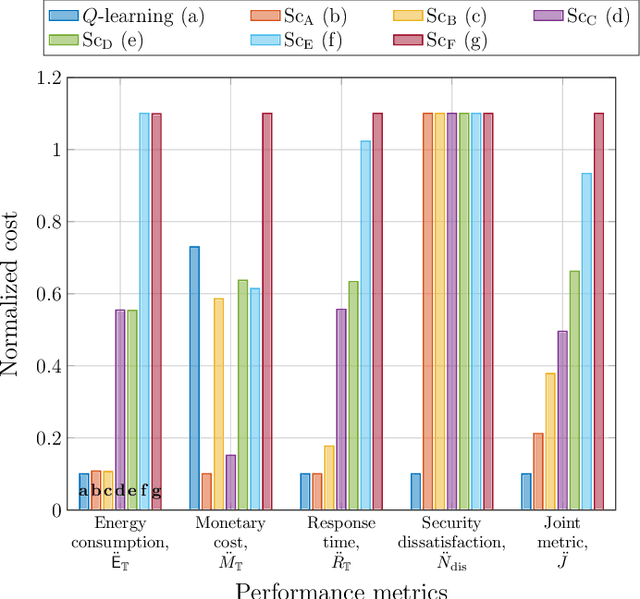

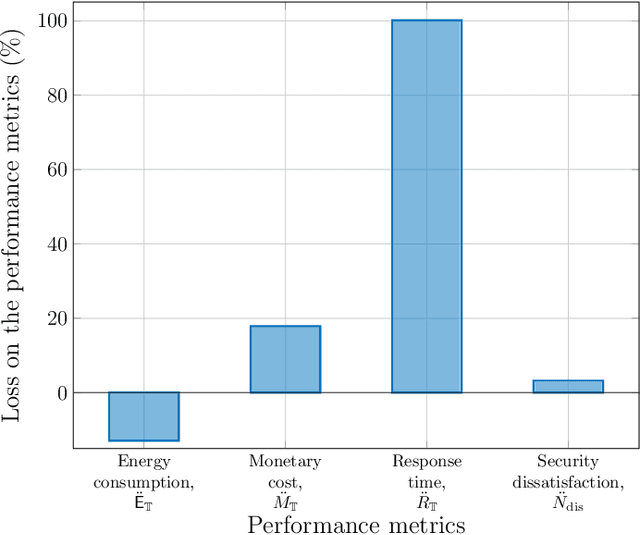

Abstract:A novel approach is presented in this work for context-aware connectivity and processing optimization of Internet of things (IoT) networks. Different from the state-of-the-art approaches, the proposed approach simultaneously selects the best connectivity and processing unit (e.g., device, fog, and cloud) along with the percentage of data to be offloaded by jointly optimizing energy consumption, response-time, security, and monetary cost. The proposed scheme employs a reinforcement learning algorithm, and manages to achieve significant gains compared to deterministic solutions. In particular, the requirements of IoT devices in terms of response-time and security are taken as inputs along with the remaining battery level of the devices, and the developed algorithm returns an optimized policy. The results obtained show that only our method is able to meet the holistic multi-objective optimisation criteria, albeit, the benchmark approaches may achieve better results on a particular metric at the cost of failing to reach the other targets. Thus, the proposed approach is a device-centric and context-aware solution that accounts for the monetary and battery constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge