Metin Ozturk

ISAC-over-NTN: HAPS-UAV Framework for Post-Disaster Responsive 6G Networks

Jan 21, 2026Abstract:In disaster scenarios, ensuring both reliable communication and situational awareness becomes a critical challenge due to the partial or complete collapse of terrestrial networks. This paper proposes an integrated sensing and communication (ISAC) over non-terrestrial networks (NTN) architecture referred to as ISAC-over-NTN that integrates multiple uncrewed aerial vehicles (UAVs) and a high-altitude platform station (HAPS) to maintain resilient and reliable network operations in post-disaster conditions. We aim to achieve two main objectives: i) provide a reliable communication infrastructure, thereby ensuring the continuity of search-and-rescue activities and connecting people to their loved ones, and ii) detect users, such as those trapped under rubble or those who are mobile, using a Doppler-based mobility detection model. We employ an innovative beamforming method that simultaneously transmits data and detects Doppler-based mobility by integrating multi-user multiple-input multiple-output (MU-MIMO) communication and monostatic sensing within the same transmission chain. The results show that the proposed framework maintains reliable connectivity and achieves high detection accuracy of users in critical locations, reaching 90% motion detection sensitivity and 88% detection accuracy.

A Multi-layer Non-Terrestrial Networks Architecture for 6G and Beyond under Realistic Conditions and with Practical Limitations

Feb 22, 2025Abstract:In order to bolster the next generation of wireless networks, there has been a great deal of interest in non-terrestrial networks (NTN), including satellites, high altitude platform stations (HAPS), and uncrewed aerial vehicles (UAV). To unlock their full potential, these platforms can integrate advanced technologies such as reconfigurable intelligent surfaces~(RIS) and next-generation multiple access (NGMA). However, in practical applications, transceivers often suffer from radio frequency (RF) impairments, which limit system performance. In this regard, this paper explores the potential of multi-layer NTN architecture to mitigate path propagation loss and improve network performance under hardware impairment limitations. First, we present current research activities in the NTN framework, including RIS, multiple access technologies, and hardware impairments. Next, we introduce a multi-layer NTN architecture with hardware limitations. This architecture includes HAPS super-macro base stations (HAPS-SMBS), UAVs--equipped with passive or active transmissive RIS--, and NGMA techniques, like non-orthogonal multiple access (NOMA), as the multiple access techniques to serve terrestrial devices. Additionally, we present and discuss potential use cases of the proposed multi-layer architecture considering hardware impairments. The multi-layer NTN architecture combined with advanced technologies, such as RIS and NGMA, demonstrates promising results; however, the performance degradation is attributed to RF impairments. Finally, we identify future research directions, including RF impairment mitigation, UAV power management, and antenna designs.

Strategic Demand-Planning in Wireless Networks: Can Generative-AI Save Spectrum and Energy?

Jul 02, 2024

Abstract:Wireless communications advance hand-in-hand with artificial intelligence (AI), indicating an interconnected advancement where each facilitates and benefits from the other. This synergy is particularly evident in the development of the sixth-generation technology standard for mobile networks (6G), envisioned to be AI-native. Generative-AI (GenAI), a novel technology capable of producing various types of outputs, including text, images, and videos, offers significant potential for wireless communications, with its distinctive features. Traditionally, conventional AI techniques have been employed for predictions, classifications, and optimization, while GenAI has more to offer. This article introduces the concept of strategic demand-planning through demand-labeling, demand-shaping, and demand-rescheduling. Accordingly, GenAI is proposed as a powerful tool to facilitate demand-shaping in wireless networks. More specifically, GenAI is used to compress and convert the content of various kind (e.g., from a higher bandwidth mode to a lower one, such as from a video to text), which subsequently enhances performance of wireless networks in various usage scenarios such as cell-switching, user association and load balancing, interference management, and disaster scenarios management. Therefore, GenAI can serve a function in saving energy and spectrum in wireless networks. With recent advancements in AI, including sophisticated algorithms like large-language-models and the development of more powerful hardware built exclusively for AI tasks, such as AI accelerators, the concept of demand-planning, particularly demand-shaping through GenAI, becomes increasingly relevant. Furthermore, recent efforts to make GenAI accessible on devices, such as user terminals, make the implementation of this concept even more straightforward and feasible.

Multi-Layer Network Formation through HAPS Base Station and Transmissive RIS-Equipped UAV

May 02, 2024Abstract:In order to bolster future wireless networks, there has been a great deal of interest in non-terrestrial networks, especially aerial platform stations including the high altitude platform station (HAPS) and uncrewed aerial vehicles (UAV). These platforms can integrate advanced technologies such as reconfigurable intelligent surfaces (RIS) and non-orthogonal multiple access (NOMA). In this regard, this paper proposes a multi-layer network architecture to improve the performance of conventional HAPS super-macro base station (HAPS-SMBS)-assisted UAV. The architecture includes a HAPS-SMBS, UAVs equipped with active transmissive RIS, and ground Internet of things devices. We also consider multiple-input single-output (MISO) technology, by employing multiple antennas at the HAPS-SMBS and a single antenna at the Internet of things devices. Additionally, we consider NOMA as the multiple access technology as well as the existence of hardware impairments as a practical limitation. In particular, we compare the proposed system model with three different scenarios: HAPS-SMBS-assisted UAV that are equipped with active transmissive RIS and supported by single-input single-output system, HAPS-SMBS-assisted UAV that are equipped with amplify-and-forward relaying, and HAPS-SMBS-assisted UAV-equipped with passive transmissive RIS. Sum rate and energy efficiency are used as performance metrics, and the findings demonstrate that, in comparison to all benchmarks, the proposed system yields higher performance gain. Moreover, the hardware impairment limits the system performance at high transmit power levels.

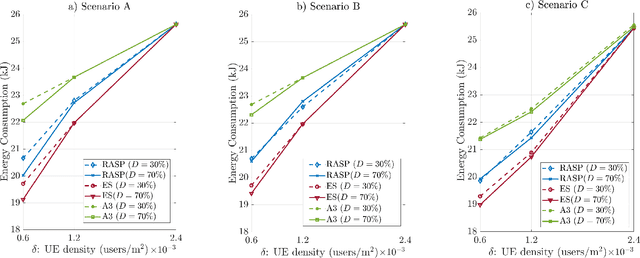

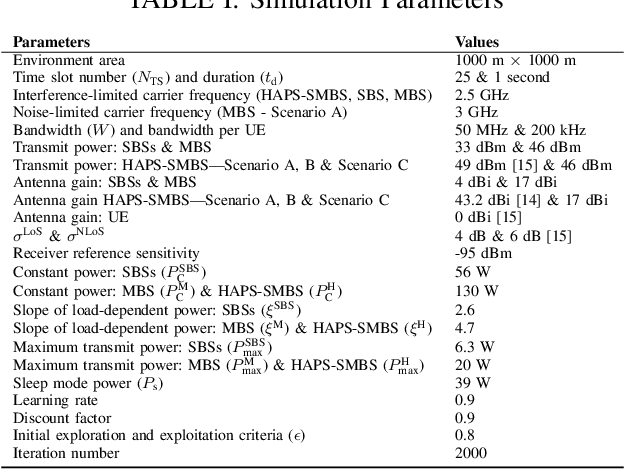

Cell Switching in HAPS-Aided Networking: How the Obscurity of Traffic Loads Affects the Decision

May 01, 2024Abstract:This study aims to introduce the cell load estimation problem of cell switching approaches in cellular networks specially-presented in a high-altitude platform station (HAPS)-assisted network. The problem arises from the fact that the traffic loads of sleeping base stations for the next time slot cannot be perfectly known, but they can rather be estimated, and any estimation error could result in divergence from the optimal decision, which subsequently affects the performance of energy efficiency. The traffic loads of the sleeping base stations for the next time slot are required because the switching decisions are made proactively in the current time slot. Two different Q-learning algorithms are developed; one is full-scale, focusing solely on the performance, while the other one is lightweight and addresses the computational cost. Results confirm that the estimation error is capable of changing cell switching decisions that yields performance divergence compared to no-error scenarios. Moreover, the developed Q-learning algorithms perform well since an insignificant difference (i.e., 0.3%) is observed between them and the optimum algorithm.

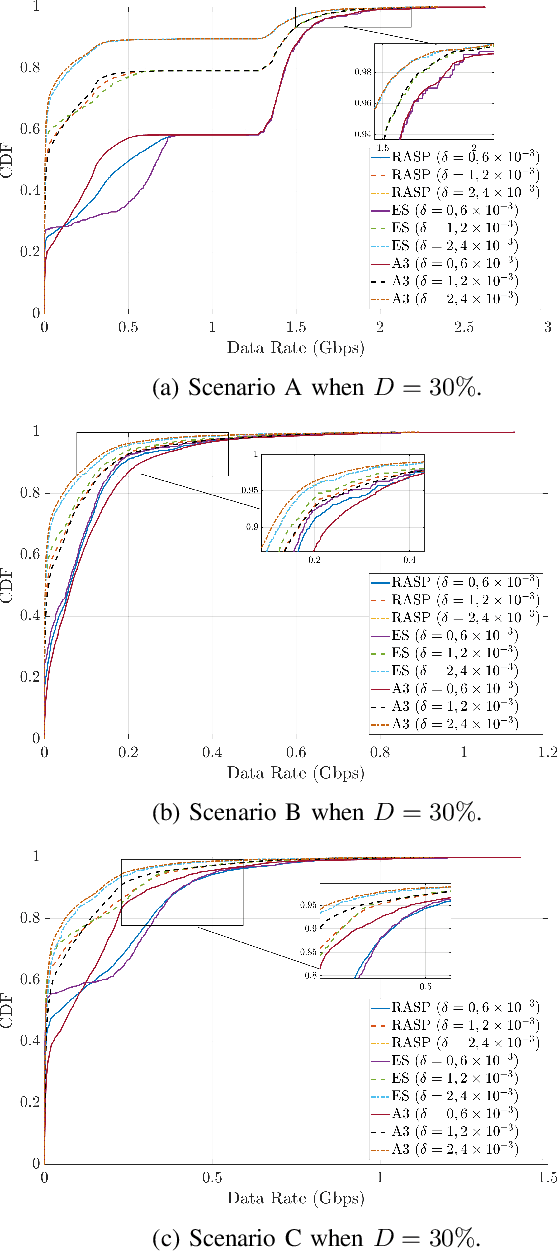

A Lightweight Machine Learning Approach for Delay-Aware Cell-Switching in 6G HAPS Networks

Feb 20, 2024

Abstract:This study investigates the integration of a high altitude platform station (HAPS), a non-terrestrial network (NTN) node, into the cell-switching paradigm for energy saving. By doing so, the sustainability and ubiquitous connectivity targets can be achieved. Besides, a delay-aware approach is also adopted, where the delay profiles of users are respected in such a way that we attempt to meet the latency requirements of users with a best-effort strategy. To this end, a novel, simple, and lightweight Q-learning algorithm is designed to address the cell-switching optimization problem. During the simulation campaigns, different interference scenarios and delay situations between base stations are examined in terms of energy consumption and quality-of-service (QoS), and the results confirm the efficacy of the proposed Q-learning algorithm.

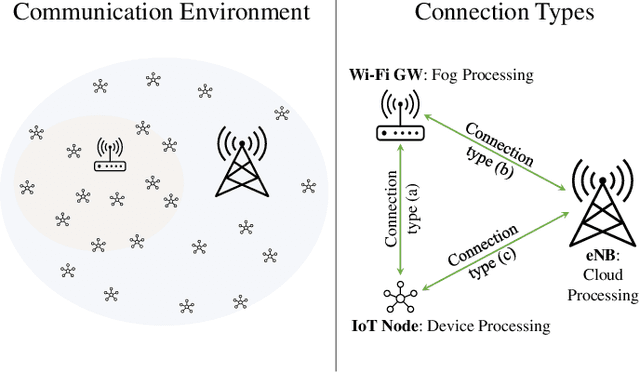

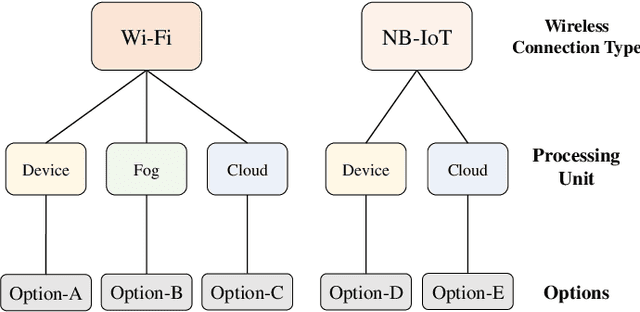

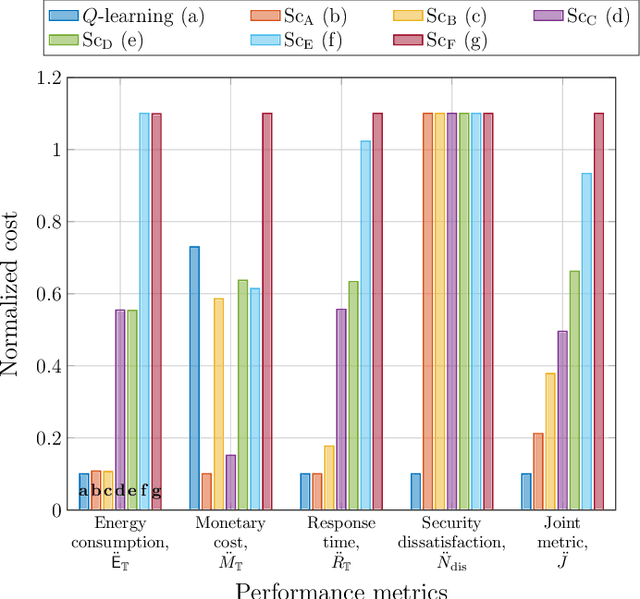

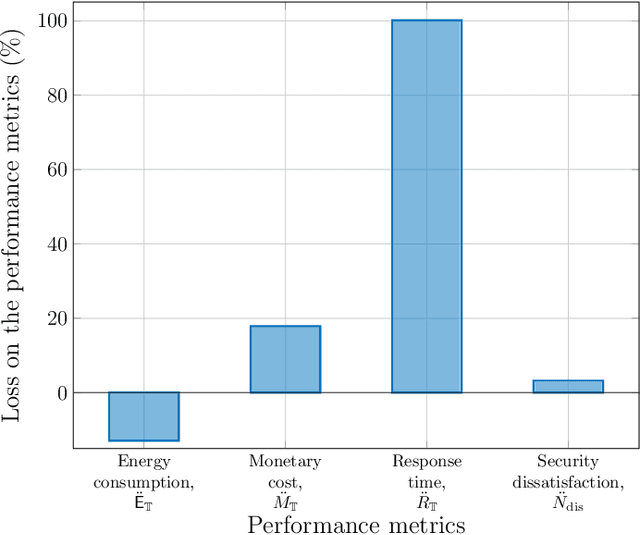

Context-Aware Wireless Connectivity and Processing Unit Optimization for IoT Networks

Apr 30, 2020

Abstract:A novel approach is presented in this work for context-aware connectivity and processing optimization of Internet of things (IoT) networks. Different from the state-of-the-art approaches, the proposed approach simultaneously selects the best connectivity and processing unit (e.g., device, fog, and cloud) along with the percentage of data to be offloaded by jointly optimizing energy consumption, response-time, security, and monetary cost. The proposed scheme employs a reinforcement learning algorithm, and manages to achieve significant gains compared to deterministic solutions. In particular, the requirements of IoT devices in terms of response-time and security are taken as inputs along with the remaining battery level of the devices, and the developed algorithm returns an optimized policy. The results obtained show that only our method is able to meet the holistic multi-objective optimisation criteria, albeit, the benchmark approaches may achieve better results on a particular metric at the cost of failing to reach the other targets. Thus, the proposed approach is a device-centric and context-aware solution that accounts for the monetary and battery constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge