FedTrees: A Novel Computation-Communication Efficient Federated Learning Framework Investigated in Smart Grids

Paper and Code

Sep 30, 2022

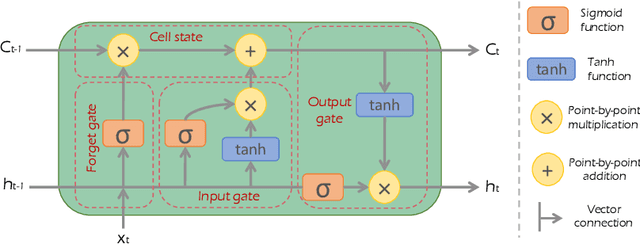

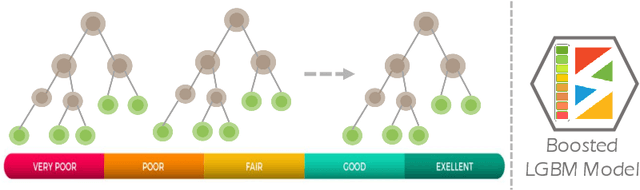

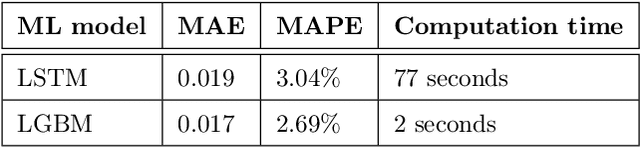

Smart energy performance monitoring and optimisation at the supplier and consumer levels is essential to realising smart cities. In order to implement a more sustainable energy management plan, it is crucial to conduct a better energy forecast. The next-generation smart meters can also be used to measure, record, and report energy consumption data, which can be used to train machine learning (ML) models for predicting energy needs. However, sharing fine-grained energy data and performing centralised learning may compromise users' privacy and leave them vulnerable to several attacks. This study addresses this issue by utilising federated learning (FL), an emerging technique that performs ML model training at the user level, where data resides. We introduce FedTrees, a new, lightweight FL framework that benefits from the outstanding features of ensemble learning. Furthermore, we developed a delta-based early stopping algorithm to monitor FL training and stop it when it does not need to continue. The simulation results demonstrate that FedTrees outperforms the most popular federated averaging (FedAvg) framework and the baseline Persistence model for providing accurate energy forecasting patterns while taking only 2% of the computation time and 13% of the communication rounds compared to FedAvg, saving considerable amounts of computation and communication resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge