Adrian Sandru

Feature-level augmentation to improve robustness of deep neural networks to affine transformations

Feb 15, 2022

Abstract:Recent studies revealed that convolutional neural networks do not generalize well to small image transformations, e.g. rotations by a few degrees or translations of a few pixels. To improve the robustness to such transformations, we propose to introduce data augmentation at intermediate layers of the neural architecture, in addition to the common data augmentation applied on the input images. By introducing small perturbations to activation maps (features) at various levels, we develop the capacity of the neural network to cope with such transformations. We conduct experiments on three image classification benchmarks (Tiny ImageNet, Caltech-256 and Food-101), considering two different convolutional architectures (ResNet-18 and DenseNet-121). When compared with two state-of-the-art stabilization methods, the empirical results show that our approach consistently attains the best trade-off between accuracy and mean flip rate.

A realistic approach to generate masked faces applied on two novel masked face recognition data sets

Sep 03, 2021

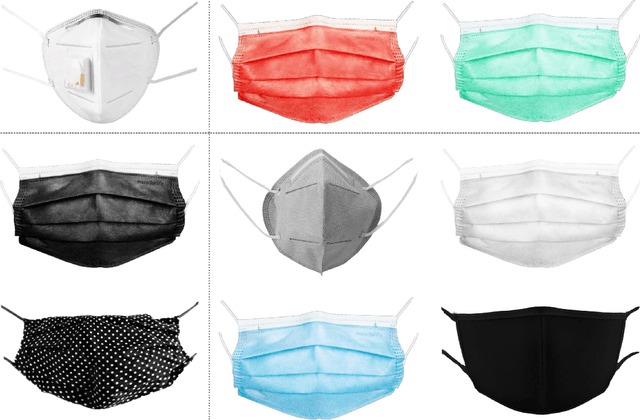

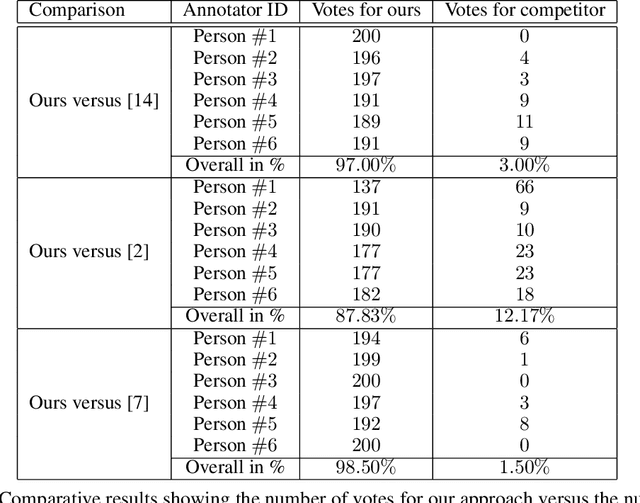

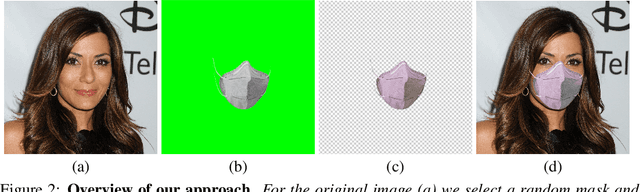

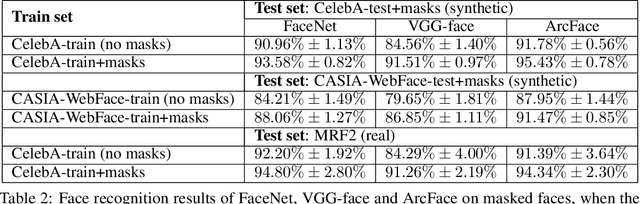

Abstract:The COVID-19 pandemic raises the problem of adapting face recognition systems to the new reality, where people may wear surgical masks to cover their noses and mouths. Traditional data sets (e.g., CelebA, CASIA-WebFace) used for training these systems were released before the pandemic, so they now seem unsuited due to the lack of examples of people wearing masks. We propose a method for enhancing data sets containing faces without masks by creating synthetic masks and overlaying them on faces in the original images. Our method relies on Spark AR Studio, a developer program made by Facebook that is used to create Instagram face filters. In our approach, we use 9 masks of different colors, shapes and fabrics. We employ our method to generate a number of 445,446 (90%) samples of masks for the CASIA-WebFace data set and 196,254 (96.8%) masks for the CelebA data set, releasing the mask images at https://github.com/securifai/masked_faces. We show that our method produces significantly more realistic training examples of masks overlaid on faces by asking volunteers to qualitatively compare it to other methods or data sets designed for the same task. We also demonstrate the usefulness of our method by evaluating state-of-the-art face recognition systems (FaceNet, VGG-face, ArcFace) trained on the enhanced data sets and showing that they outperform equivalent systems trained on the original data sets (containing faces without masks), when the test benchmark contains masked faces.

SuPEr-SAM: Using the Supervision Signal from a Pose Estimator to Train a Spatial Attention Module for Personal Protective Equipment Recognition

Sep 25, 2020

Abstract:We propose a deep learning method to automatically detect personal protective equipment (PPE), such as helmets, surgical masks, reflective vests, boots and so on, in images of people. Typical approaches for PPE detection based on deep learning are (i) to train an object detector for items such as those listed above or (ii) to train a person detector and a classifier that takes the bounding boxes predicted by the detector and discriminates between people wearing and people not wearing the corresponding PPE items. We propose a novel and accurate approach that uses three components: a person detector, a body pose estimator and a classifier. Our novelty consists in using the pose estimator only at training time, to improve the prediction performance of the classifier. We modify the neural architecture of the classifier by adding a spatial attention mechanism, which is trained using supervision signal from the pose estimator. In this way, the classifier learns to focus on PPE items, using knowledge from the pose estimator with almost no computational overhead during inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge