Adam Bignold

Broad-persistent Advice for Interactive Reinforcement Learning Scenarios

Oct 11, 2022

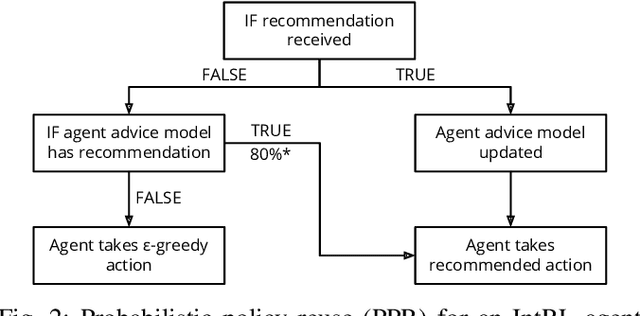

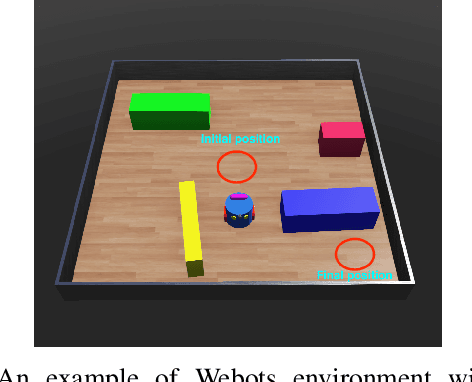

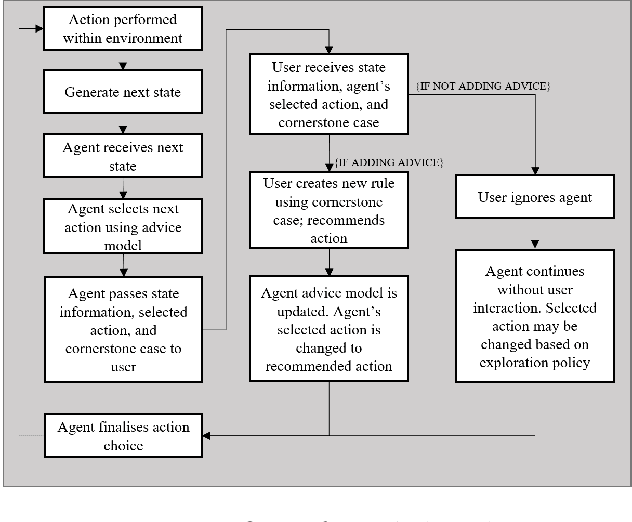

Abstract:The use of interactive advice in reinforcement learning scenarios allows for speeding up the learning process for autonomous agents. Current interactive reinforcement learning research has been limited to real-time interactions that offer relevant user advice to the current state only. Moreover, the information provided by each interaction is not retained and instead discarded by the agent after a single use. In this paper, we present a method for retaining and reusing provided knowledge, allowing trainers to give general advice relevant to more than just the current state. Results obtained show that the use of broad-persistent advice substantially improves the performance of the agent while reducing the number of interactions required for the trainer.

Persistent Rule-based Interactive Reinforcement Learning

Feb 04, 2021

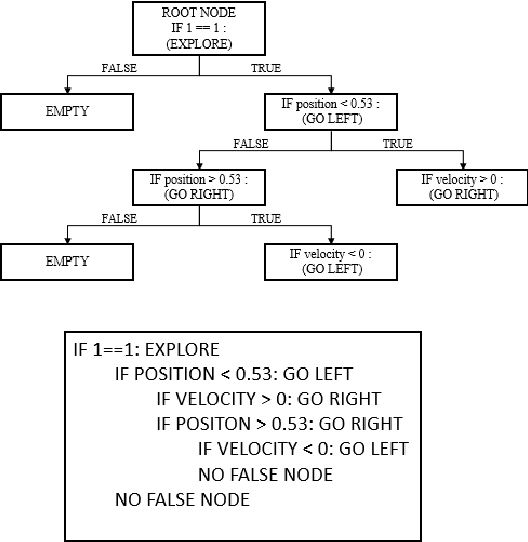

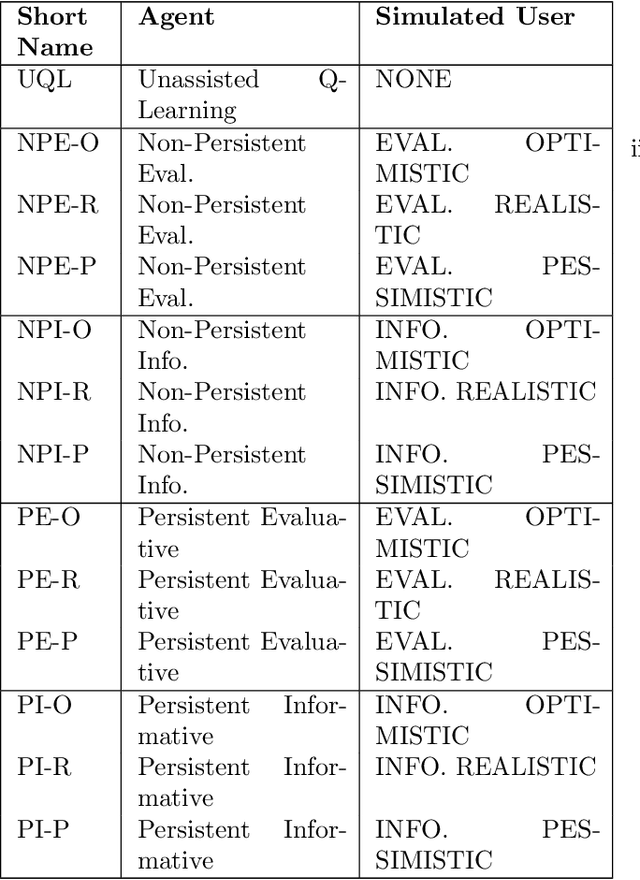

Abstract:Interactive reinforcement learning has allowed speeding up the learning process in autonomous agents by including a human trainer providing extra information to the agent in real-time. Current interactive reinforcement learning research has been limited to interactions that offer relevant advice to the current state only. Additionally, the information provided by each interaction is not retained and instead discarded by the agent after a single-use. In this work, we propose a persistent rule-based interactive reinforcement learning approach, i.e., a method for retaining and reusing provided knowledge, allowing trainers to give general advice relevant to more than just the current state. Our experimental results show persistent advice substantially improves the performance of the agent while reducing the number of interactions required for the trainer. Moreover, rule-based advice shows similar performance impact as state-based advice, but with a substantially reduced interaction count.

Human Engagement Providing Evaluative and Informative Advice for Interactive Reinforcement Learning

Sep 21, 2020

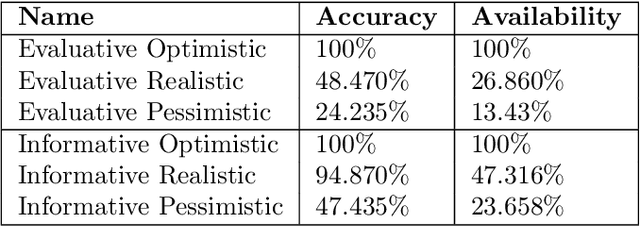

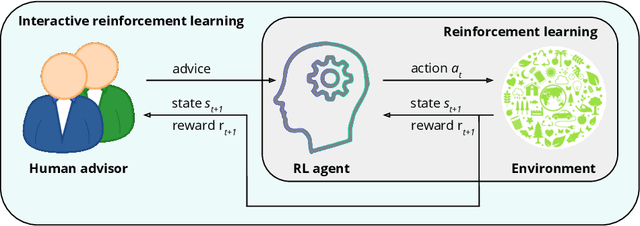

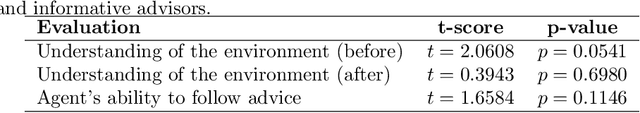

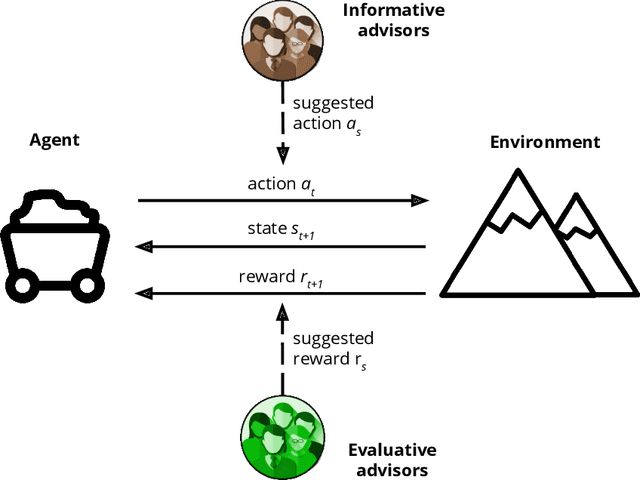

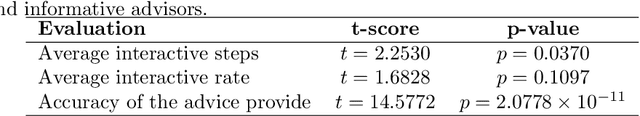

Abstract:Reinforcement learning is an approach used by intelligent agents to autonomously learn new skills. Although reinforcement learning has been demonstrated to be an effective learning approach in several different contexts, a common drawback exhibited is the time needed in order to satisfactorily learn a task, especially in large state-action spaces. To address this issue, interactive reinforcement learning proposes the use of externally-sourced information in order to speed up the learning process. Up to now, different information sources have been used to give advice to the learner agent, among them human-sourced advice. When interacting with a learner agent, humans may provide either evaluative or informative advice. From the agent's perspective these styles of interaction are commonly referred to as reward-shaping and policy-shaping respectively. Evaluation requires the human to provide feedback on the prior action performed, while informative advice they provide advice on the best action to select for a given situation. Prior research has focused on the effect of human-sourced advice on the interactive reinforcement learning process, specifically aiming to improve the learning speed of the agent, while reducing the engagement with the human. This work presents an experimental setup for a human-trial designed to compare the methods people use to deliver advice in term of human engagement. Obtained results show that users giving informative advice to the learner agents provide more accurate advice, are willing to assist the learner agent for a longer time, and provide more advice per episode. Additionally, self-evaluation from participants using the informative approach has indicated that the agent's ability to follow the advice is higher, and therefore, they feel their own advice to be of higher accuracy when compared to people providing evaluative advice.

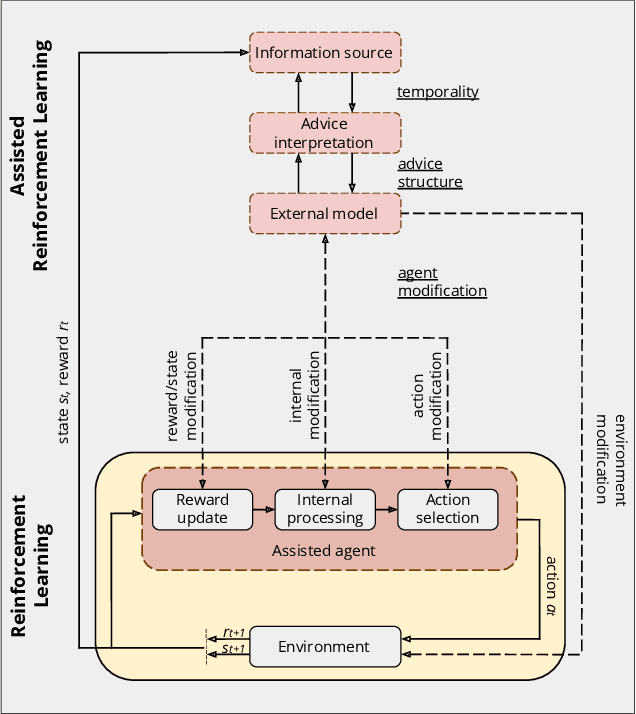

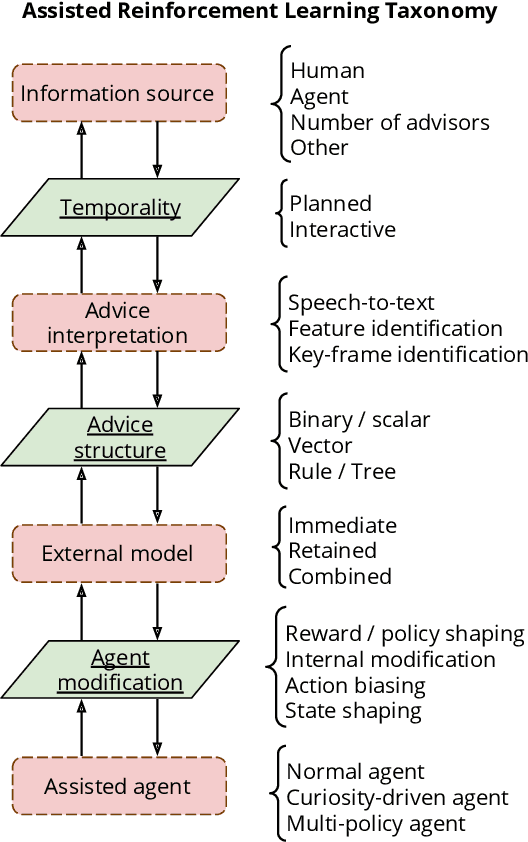

A Conceptual Framework for Externally-influenced Agents: An Assisted Reinforcement Learning Review

Jul 03, 2020

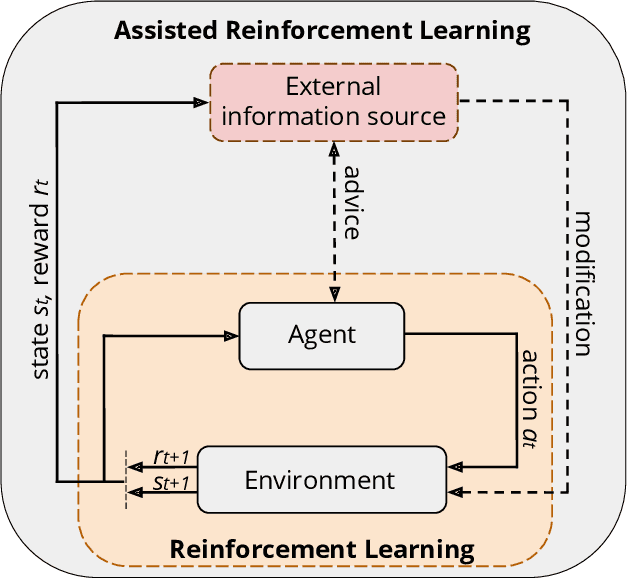

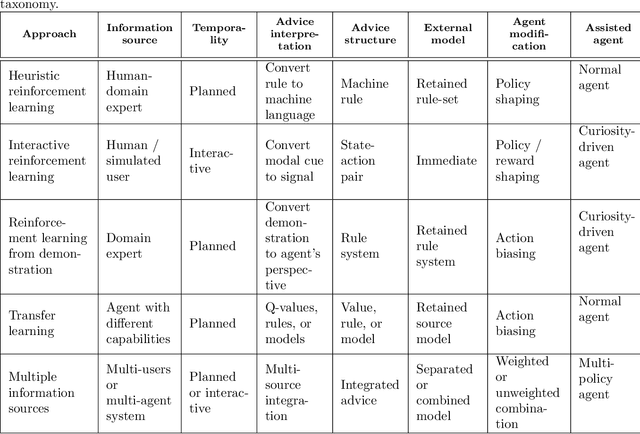

Abstract:A long-term goal of reinforcement learning agents is to be able to perform tasks in complex real-world scenarios. The use of external information is one way of scaling agents to more complex problems. However, there is a general lack of collaboration or interoperability between different approaches using external information. In this work, we propose a conceptual framework and taxonomy for assisted reinforcement learning, aimed at fostering such collaboration by classifying and comparing various methods that use external information in the learning process. The proposed taxonomy details the relationship between the external information source and the learner agent, highlighting the process of information decomposition, structure, retention, and how it can be used to influence agent learning. As well as reviewing state-of-the-art methods, we identify current streams of reinforcement learning that use external information in order to improve the agent's performance and its decision-making process. These include heuristic reinforcement learning, interactive reinforcement learning, learning from demonstration, transfer learning, and learning from multiple sources, among others. These streams of reinforcement learning operate with the shared objective of scaffolding the learner agent. Lastly, we discuss further possibilities for future work in the field of assisted reinforcement learning systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge