Hung Son Nguyen

Speeding Up Recommender Systems Using Association Rules

Nov 16, 2022Abstract:Recommender systems are considered one of the most rapidly growing branches of Artificial Intelligence. The demand for finding more efficient techniques to generate recommendations becomes urgent. However, many recommendations become useless if there is a delay in generating and showing them to the user. Therefore, we focus on improving the speed of recommendation systems without impacting the accuracy. In this paper, we suggest a novel recommender system based on Factorization Machines and Association Rules (FMAR). We introduce an approach to generate association rules using two algorithms: (i) apriori and (ii) frequent pattern (FP) growth. These association rules will be utilized to reduce the number of items passed to the factorization machines recommendation model. We show that FMAR has significantly decreased the number of new items that the recommender system has to predict and hence, decreased the required time for generating the recommendations. On the other hand, while building the FMAR tool, we concentrate on making a balance between prediction time and accuracy of generated recommendations to ensure that the accuracy is not significantly impacted compared to the accuracy of using factorization machines without association rules.

Broad-persistent Advice for Interactive Reinforcement Learning Scenarios

Oct 11, 2022

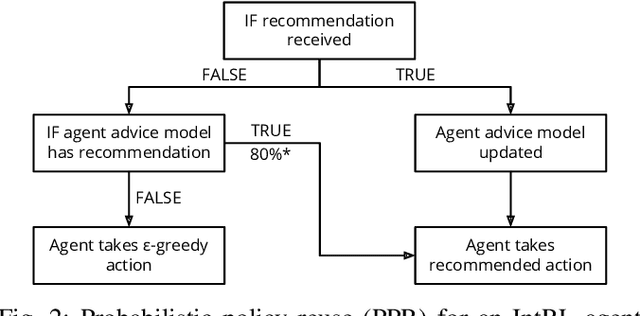

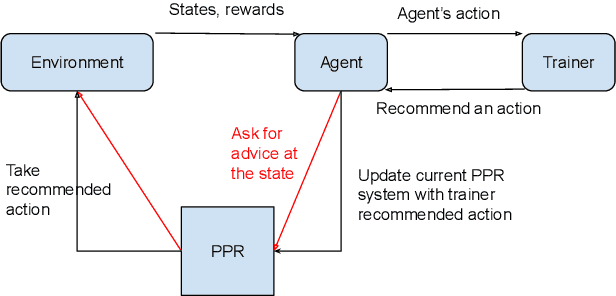

Abstract:The use of interactive advice in reinforcement learning scenarios allows for speeding up the learning process for autonomous agents. Current interactive reinforcement learning research has been limited to real-time interactions that offer relevant user advice to the current state only. Moreover, the information provided by each interaction is not retained and instead discarded by the agent after a single use. In this paper, we present a method for retaining and reusing provided knowledge, allowing trainers to give general advice relevant to more than just the current state. Results obtained show that the use of broad-persistent advice substantially improves the performance of the agent while reducing the number of interactions required for the trainer.

A Broad-persistent Advising Approach for Deep Interactive Reinforcement Learning in Robotic Environments

Oct 15, 2021

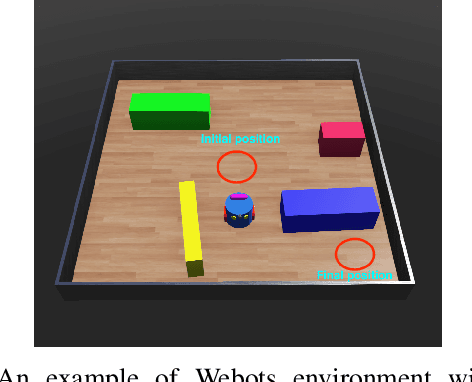

Abstract:Deep Reinforcement Learning (DeepRL) methods have been widely used in robotics to learn about the environment and acquire behaviors autonomously. Deep Interactive Reinforcement Learning (DeepIRL) includes interactive feedback from an external trainer or expert giving advice to help learners choosing actions to speed up the learning process. However, current research has been limited to interactions that offer actionable advice to only the current state of the agent. Additionally, the information is discarded by the agent after a single use that causes a duplicate process at the same state for a revisit. In this paper, we present Broad-persistent Advising (BPA), a broad-persistent advising approach that retains and reuses the processed information. It not only helps trainers to give more general advice relevant to similar states instead of only the current state but also allows the agent to speed up the learning process. We test the proposed approach in two continuous robotic scenarios, namely, a cart pole balancing task and a simulated robot navigation task. The obtained results show that the performance of the agent using BPA improves while keeping the number of interactions required for the trainer in comparison to the DeepIRL approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge