Abhinav Prakash

Sample-Efficient Language Model for Hinglish Conversational AI

Apr 27, 2025Abstract:This paper presents our process for developing a sample-efficient language model for a conversational Hinglish chatbot. Hinglish, a code-mixed language that combines Hindi and English, presents a unique computational challenge due to inconsistent spelling, lack of standardization, and limited quality of conversational data. This work evaluates multiple pre-trained cross-lingual language models, including Gemma3-4B and Qwen2.5-7B, and employs fine-tuning techniques to improve performance on Hinglish conversational tasks. The proposed approach integrates synthetically generated dialogues with insights from existing Hinglish datasets to address data scarcity. Experimental results demonstrate that models with fewer parameters, when appropriately fine-tuned on high-quality code-mixed data, can achieve competitive performance for Hinglish conversation generation while maintaining computational efficiency.

LLM-driven Constrained Copy Generation through Iterative Refinement

Apr 14, 2025Abstract:Crafting a marketing message (copy), or copywriting is a challenging generation task, as the copy must adhere to various constraints. Copy creation is inherently iterative for humans, starting with an initial draft followed by successive refinements. However, manual copy creation is time-consuming and expensive, resulting in only a few copies for each use case. This limitation restricts our ability to personalize content to customers. Contrary to the manual approach, LLMs can generate copies quickly, but the generated content does not consistently meet all the constraints on the first attempt (similar to humans). While recent studies have shown promise in improving constrained generation through iterative refinement, they have primarily addressed tasks with only a few simple constraints. Consequently, the effectiveness of iterative refinement for tasks such as copy generation, which involves many intricate constraints, remains unclear. To address this gap, we propose an LLM-based end-to-end framework for scalable copy generation using iterative refinement. To the best of our knowledge, this is the first study to address multiple challenging constraints simultaneously in copy generation. Examples of these constraints include length, topics, keywords, preferred lexical ordering, and tone of voice. We demonstrate the performance of our framework by creating copies for e-commerce banners for three different use cases of varying complexity. Our results show that iterative refinement increases the copy success rate by $16.25-35.91$% across use cases. Furthermore, the copies generated using our approach outperformed manually created content in multiple pilot studies using a multi-armed bandit framework. The winning copy improved the click-through rate by $38.5-45.21$%.

Chaining text-to-image and large language model: A novel approach for generating personalized e-commerce banners

Feb 28, 2024

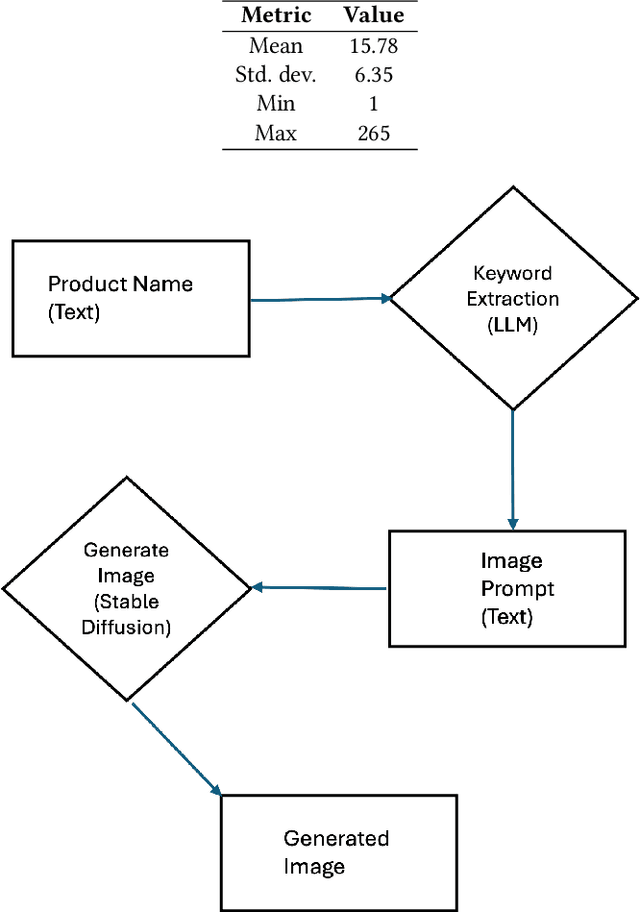

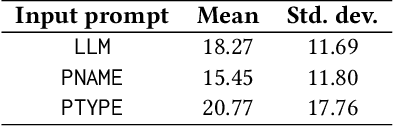

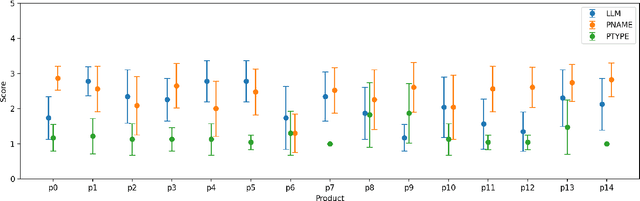

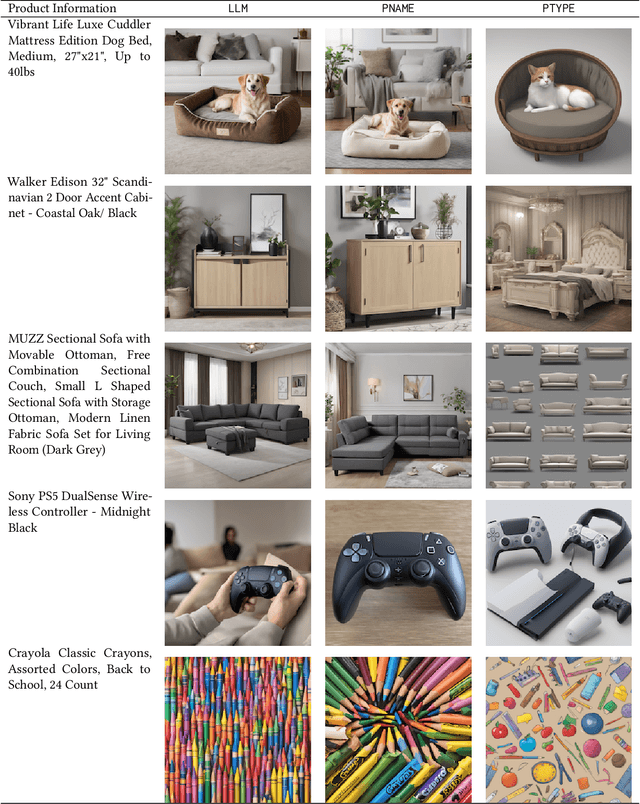

Abstract:Text-to-image models such as stable diffusion have opened a plethora of opportunities for generating art. Recent literature has surveyed the use of text-to-image models for enhancing the work of many creative artists. Many e-commerce platforms employ a manual process to generate the banners, which is time-consuming and has limitations of scalability. In this work, we demonstrate the use of text-to-image models for generating personalized web banners with dynamic content for online shoppers based on their interactions. The novelty in this approach lies in converting users' interaction data to meaningful prompts without human intervention. To this end, we utilize a large language model (LLM) to systematically extract a tuple of attributes from item meta-information. The attributes are then passed to a text-to-image model via prompt engineering to generate images for the banner. Our results show that the proposed approach can create high-quality personalized banners for users.

The temporal overfitting problem with applications in wind power curve modeling

Dec 02, 2020

Abstract:This paper is concerned with a nonparametric regression problem in which the independence assumption of the input variables and the residuals is no longer valid. Using existing model selection methods, like cross validation, the presence of temporal autocorrelation in the input variables and the error terms leads to model overfitting. This phenomenon is referred to as temporal overfitting, which causes loss of performance while predicting responses for a time domain different from the training time domain. We propose a new method to tackle the temporal overfitting problem. Our nonparametric model is partitioned into two parts -- a time-invariant component and a time-varying component, each of which is modeled through a Gaussian process regression. The key in our inference is a thinning-based strategy, an idea borrowed from Markov chain Monte Carlo sampling, to estimate the two components, respectively. Our specific application in this paper targets the power curve modeling in wind energy. In our numerical studies, we compare extensively our proposed method with both existing power curve models and available ideas for handling temporal overfitting. Our approach yields significant improvement in prediction both in and outside the time domain covered by the training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge