Unsupervised Learning Facial Parameter Regressor for Action Unit Intensity Estimation via Differentiable Renderer

Paper and Code

Aug 20, 2020

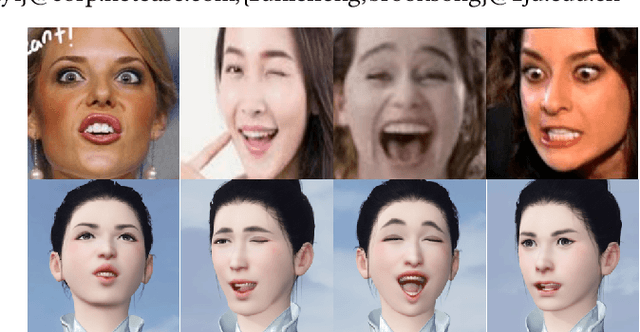

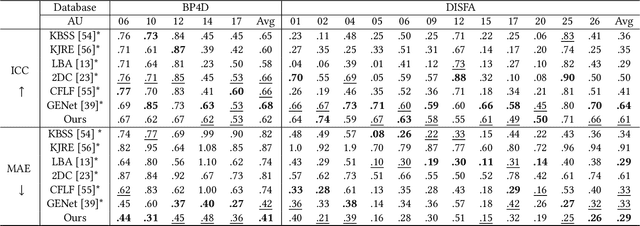

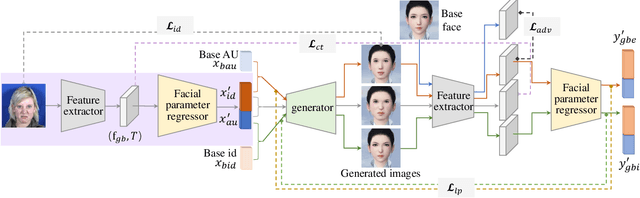

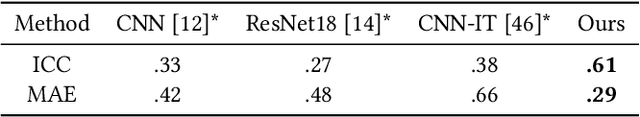

Facial action unit (AU) intensity is an index to describe all visually discernible facial movements. Most existing methods learn intensity estimator with limited AU data, while they lack generalization ability out of the dataset. In this paper, we present a framework to predict the facial parameters (including identity parameters and AU parameters) based on a bone-driven face model (BDFM) under different views. The proposed framework consists of a feature extractor, a generator, and a facial parameter regressor. The regressor can fit the physical meaning parameters of the BDFM from a single face image with the help of the generator, which maps the facial parameters to the game-face images as a differentiable renderer. Besides, identity loss, loopback loss, and adversarial loss can improve the regressive results. Quantitative evaluations are performed on two public databases BP4D and DISFA, which demonstrates that the proposed method can achieve comparable or better performance than the state-of-the-art methods. What's more, the qualitative results also demonstrate the validity of our method in the wild.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge