UNIQ: Uniform Noise Injection for Non-Uniform Quantization of Neural Networks

Paper and Code

Oct 02, 2018

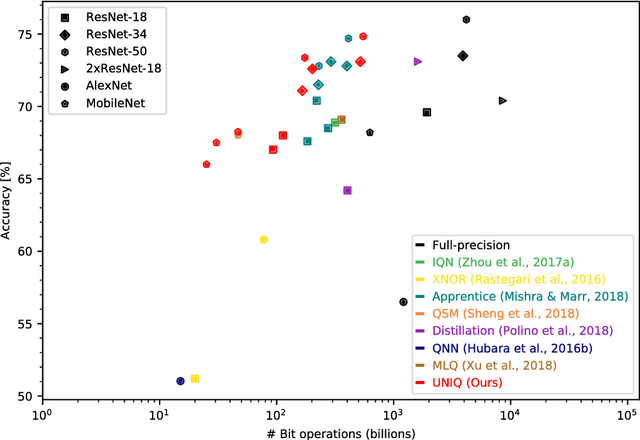

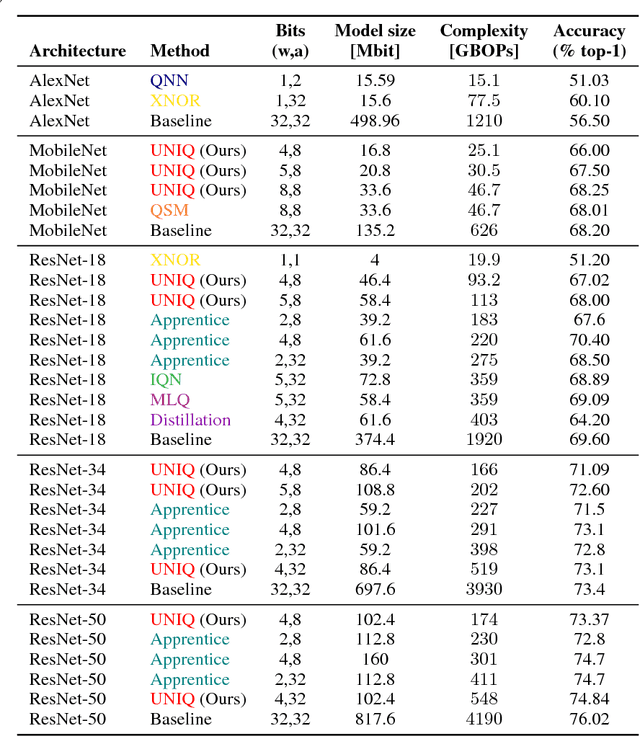

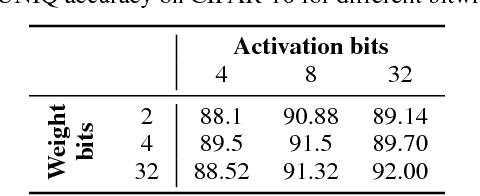

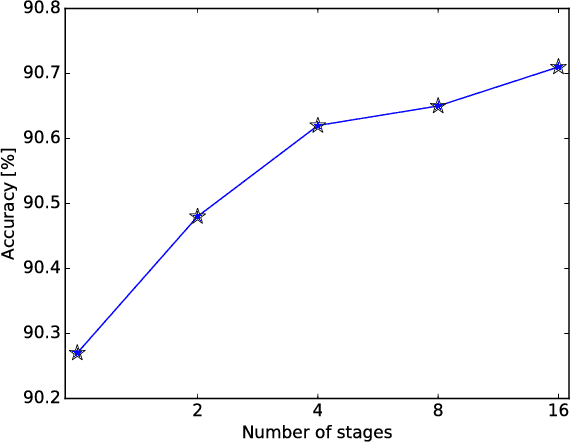

We present a novel method for neural network quantization that emulates a non-uniform $k$-quantile quantizer, which adapts to the distribution of the quantized parameters. Our approach provides a novel alternative to the existing uniform quantization techniques for neural networks. We suggest to compare the results as a function of the bit-operations (BOPS) performed, assuming a look-up table availability for the non-uniform case. In this setup, we show the advantages of our strategy in the low computational budget regime. While the proposed solution is harder to implement in hardware, we believe it sets a basis for new alternatives to neural networks quantization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge