Unified Group Fairness on Federated Learning

Paper and Code

Nov 09, 2021

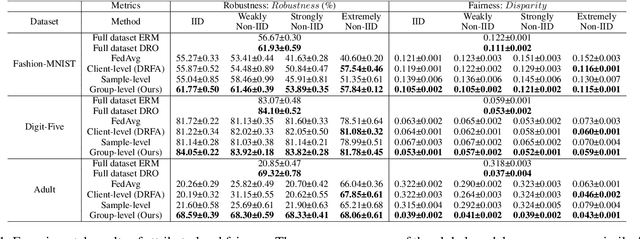

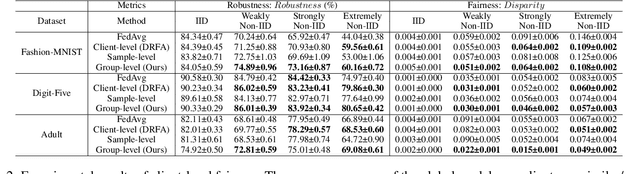

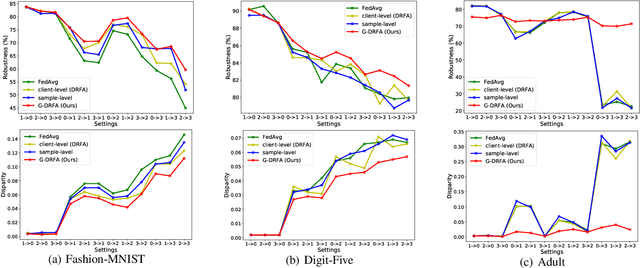

Federated learning (FL) has emerged as an important machine learning paradigm where a global model is trained based on the private data from distributed clients. However, most of existing FL algorithms cannot guarantee the performance fairness towards different clients or different groups of samples because of the distribution shift. Recent researches focus on achieving fairness among clients, but they ignore the fairness towards different groups formed by sensitive attribute(s) (e.g., gender and/or race), which is important and practical in real applications. To bridge this gap, we formulate the goal of unified group fairness on FL which is to learn a fair global model with similar performance on different groups. To achieve the unified group fairness for arbitrary sensitive attribute(s), we propose a novel FL algorithm, named Group Distributionally Robust Federated Averaging (G-DRFA), which mitigates the distribution shift across groups with theoretical analysis of convergence rate. Specifically, we treat the performance of the federated global model at each group as an objective and employ the distributionally robust techniques to maximize the performance of the worst-performing group over an uncertainty set by group reweighting. We validate the advantages of the G-DRFA algorithm with various kinds of distribution shift settings in experiments, and the results show that G-DRFA algorithm outperforms the existing fair federated learning algorithms on unified group fairness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge