Underreporting of errors in NLG output, and what to do about it

Paper and Code

Aug 08, 2021

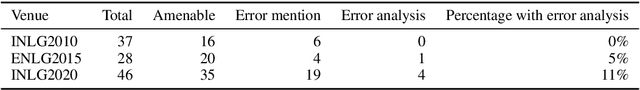

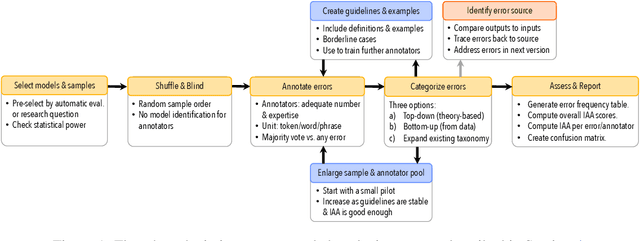

We observe a severe under-reporting of the different kinds of errors that Natural Language Generation systems make. This is a problem, because mistakes are an important indicator of where systems should still be improved. If authors only report overall performance metrics, the research community is left in the dark about the specific weaknesses that are exhibited by `state-of-the-art' research. Next to quantifying the extent of error under-reporting, this position paper provides recommendations for error identification, analysis and reporting.

* Prefinal version, accepted for publication in the Proceedings of the

14th International Conference on Natural Language Generation (INLG 2021,

Aberdeen). Comments welcome

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge