TreeSegNet: Adaptive Tree CNNs for Subdecimeter Aerial Image Segmentation

Paper and Code

Aug 25, 2018

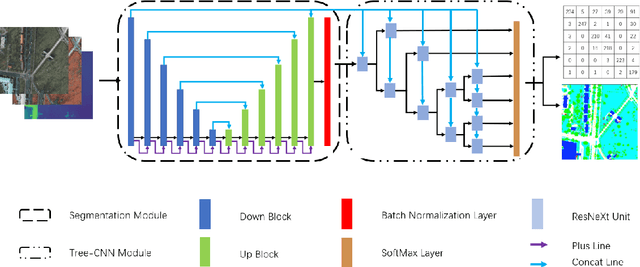

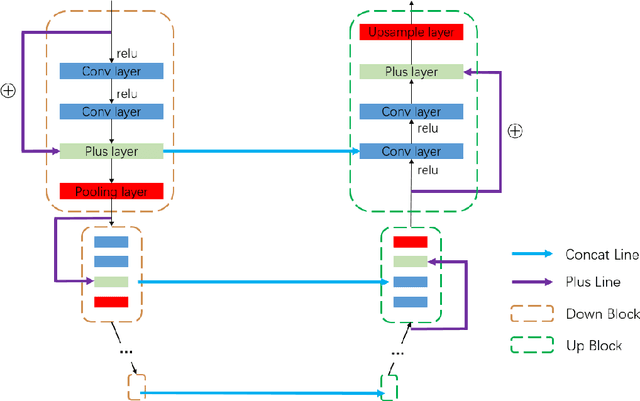

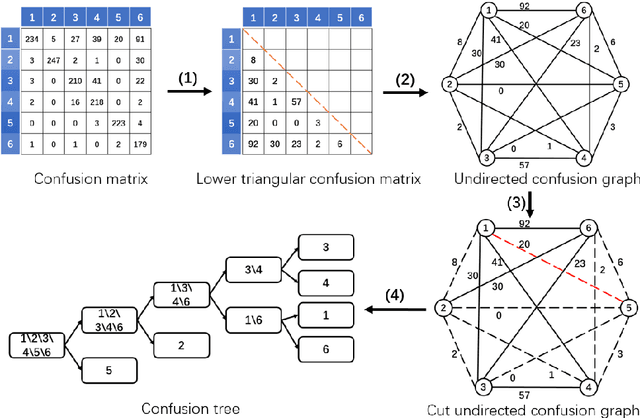

For the task of subdecimeter aerial imagery segmentation, fine-grained semantic segmentation results are usually difficult to obtain because of complex remote sensing content and optical conditions. Recently, convolutional neural networks (CNNs) have shown outstanding performance on this task. Although many deep neural network structures and techniques have been applied to improve the accuracy, few have paid attention to better differentiating the easily confused classes. In this paper, we propose TreeSegNet which adopts an adaptive network to increase the classification rate at the pixelwise level. Specifically, based on the infrastructure of DeepUNet, a Tree-CNN block in which each node represents a ResNeXt unit is constructed adaptively according to the confusion matrix and the proposed TreeCutting algorithm. By transporting feature maps through concatenating connections, the Tree-CNN block fuses multiscale features and learns best weights for the model. In experiments on the ISPRS 2D semantic labeling Potsdam dataset, the results obtained by TreeSegNet are better than those of other published state-of-the-art methods. Detailed comparison and analysis show that the improvement brought by the adaptive Tree-CNN block is significant.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge