Transfer Learning in General Lensless Imaging through Scattering Media

Paper and Code

Dec 28, 2019

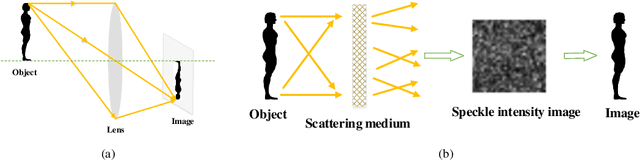

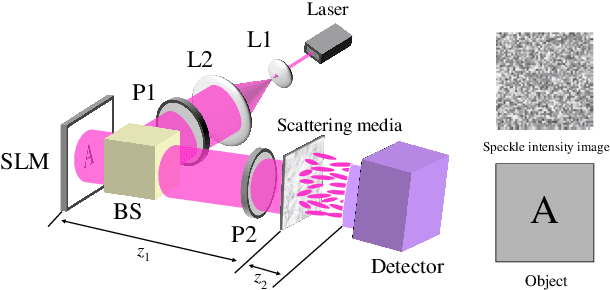

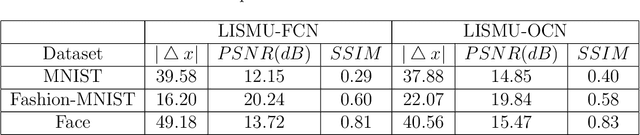

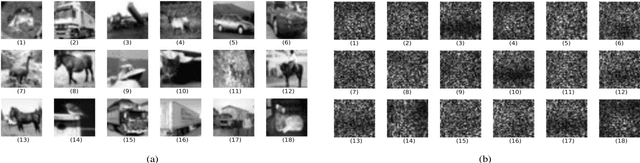

Recently deep neural networks (DNNs) have been successfully introduced to the field of lensless imaging through scattering media. By solving an inverse problem in computational imaging, DNNs can overcome several shortcomings in the conventional lensless imaging through scattering media methods, namely, high cost, poor quality, complex control, and poor anti-interference. However, for training, a large number of training samples on various datasets have to be collected, with a DNN trained on one dataset generally performing poorly for recovering images from another dataset. The underlying reason is that lensless imaging through scattering media is a high dimensional regression problem and it is difficult to obtain an analytical solution. In this work, transfer learning is proposed to address this issue. Our main idea is to train a DNN on a relatively complex dataset using a large number of training samples and fine-tune the last few layers using very few samples from other datasets. Instead of the thousands of samples required to train from scratch, transfer learning alleviates the problem of costly data acquisition. Specifically, considering the difference in sample sizes and similarity among datasets, we propose two DNN architectures, namely LISMU-FCN and LISMU-OCN, and a balance loss function designed for balancing smoothness and sharpness. LISMU-FCN, with much fewer parameters, can achieve imaging across similar datasets while LISMU-OCN can achieve imaging across significantly different datasets. What's more, we establish a set of simulation algorithms which are close to the real experiment, and it is of great significance and practical value in the research on lensless scattering imaging. In summary, this work provides a new solution for lensless imaging through scattering media using transfer learning in DNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge