Towards Robust RGB-D Human Mesh Recovery

Paper and Code

Nov 18, 2019

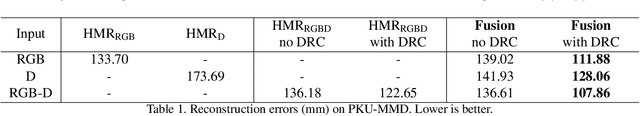

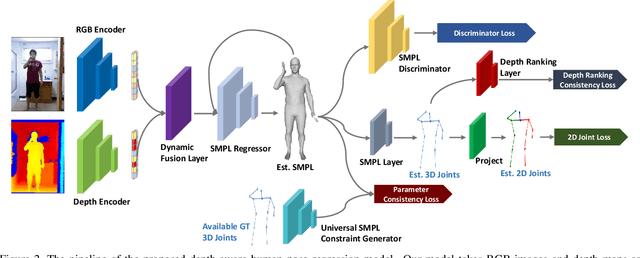

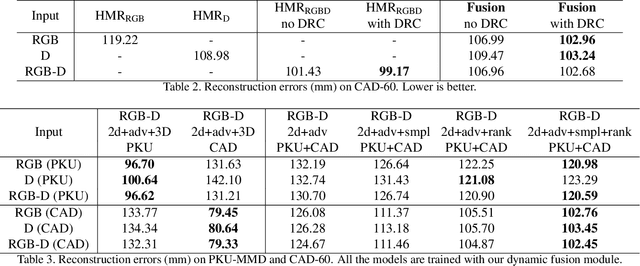

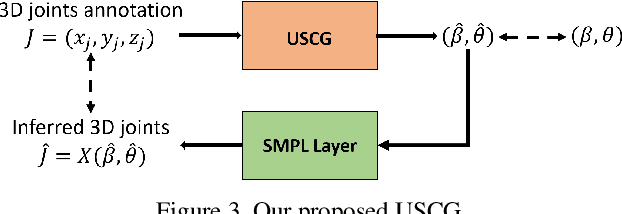

We consider the problem of human pose estimation. While much recent work has focused on the RGB domain, these techniques are inherently under-constrained since there can be many 3D configurations that explain the same 2D projection. To this end, we propose a new method that uses RGB-D data to estimate a parametric human mesh model. Our key innovations include (a) the design of a new dynamic data fusion module that facilitates learning with a combination of RGB-only and RGB-D datasets, (b) a new constraint generator module that provides SMPL supervisory signals when explicit SMPL annotations are not available, and (c) the design of a new depth ranking learning objective, all of which enable principled model training with RGB-D data. We conduct extensive experiments on a variety of RGB-D datasets to demonstrate efficacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge