Three-dimensional propagation and time-reversal of fluorescence images

Paper and Code

Jan 31, 2019

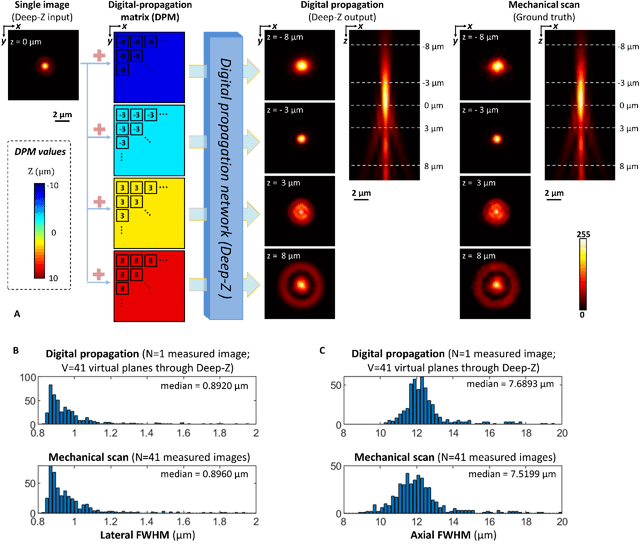

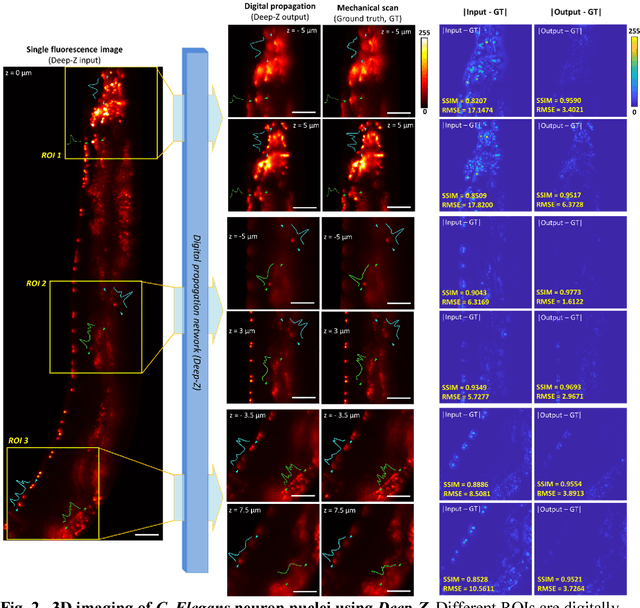

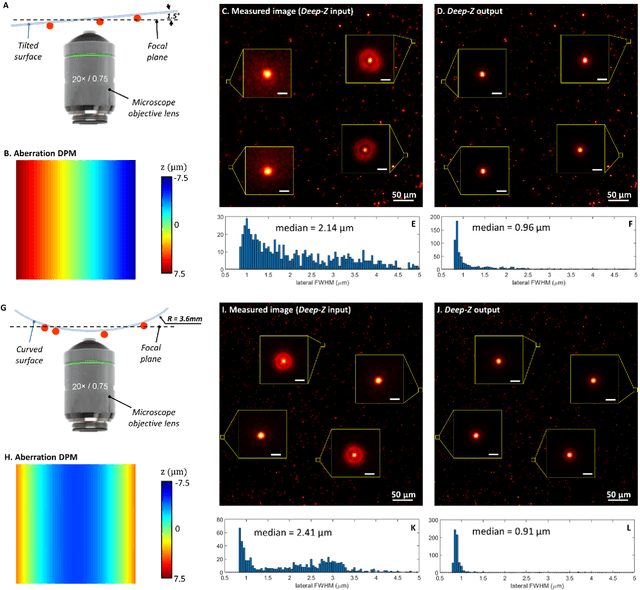

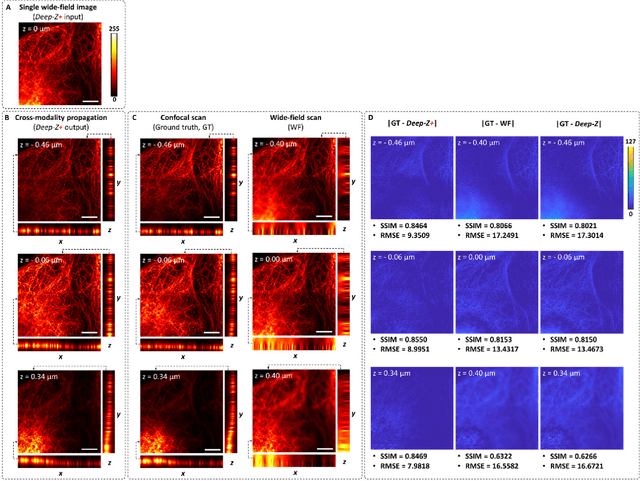

Unlike holography, fluorescence microscopy lacks an image propagation and time-reversal framework, which necessitates scanning of fluorescent objects to obtain 3D images. We demonstrate that a neural network can inherently learn the physical laws governing fluorescence wave propagation and time-reversal to enable 3D imaging of fluorescent samples using a single 2D image, without mechanical scanning, additional hardware, or a trade-off of resolution or speed. Using this data-driven framework, we increased the depth-of-field of a microscope by 20-fold, imaged Caenorhabditis elegans neurons in 3D using a single fluorescence image, and digitally propagated fluorescence images onto user-defined 3D surfaces, also correcting various aberrations. Furthermore, this learning-based approach cross-connects different imaging modalities, permitting 3D propagation of a wide-field fluorescence image to match confocal microscopy images acquired at different sample planes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge