The Shallow End: Empowering Shallower Deep-Convolutional Networks through Auxiliary Outputs

Paper and Code

Apr 23, 2017

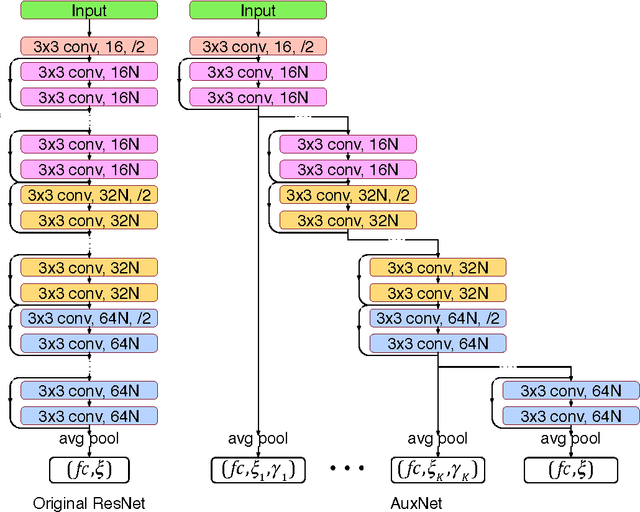

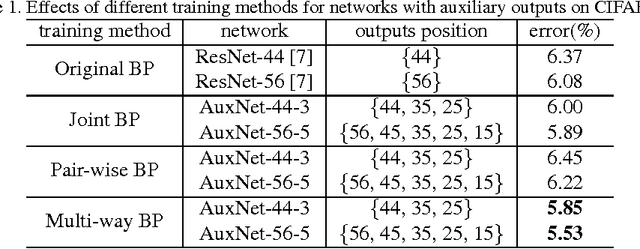

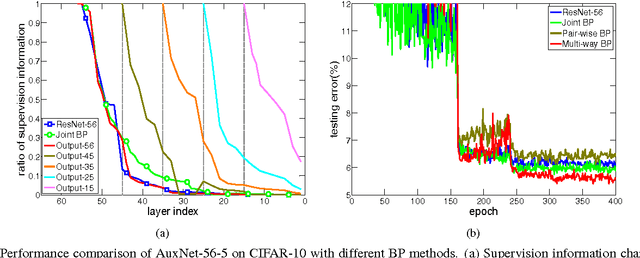

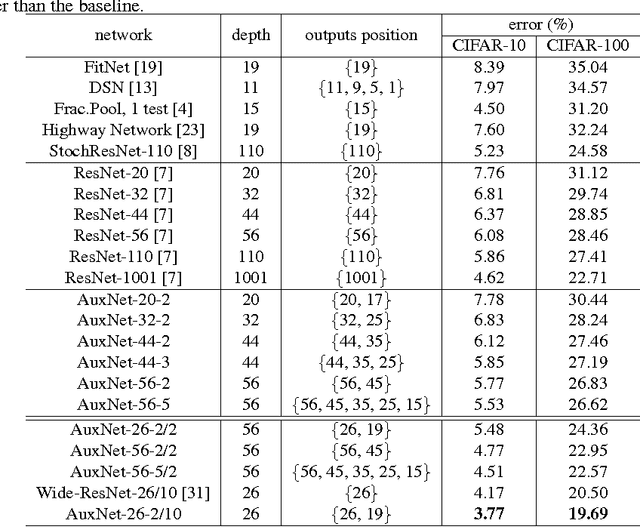

The depth is one of the key factors behind the great success of convolutional neural networks (CNNs), with the gradient vanishing issue having been largely addressed by various nets, e.g. ResNet. However, when the depth goes very deep, the supervision information from the loss function will vanish due to the long backpropagation path, especially for those shallow layers. This means that intermediate layers receive less supervision information and will lead to redundancy in models. As a result, the model becomes very redundant and the over-fitting issue may happen. To address this, we propose a model, called AuxNet, by introducing auxiliary outputs at intermediate layers. Different from existing approaches, we propose a Multi-path training method to propagate not only gradients but also sufficient supervision informationfrommultipleauxiliaryoutputs. TheproposedAuxNetwithmulti-pathtrainingmethodgivesrisetomorecompact networks which outperform their very deep equivalent (i.e. ResNet). For example, AuxNet with 44 layers performs better than the ResNet equivalent with 110 layers on several benchmark data sets, i.e. CIFAR-10, CIFAR-100 and SVHN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge