Spikingformer: Spike-driven Residual Learning for Transformer-based Spiking Neural Network

Paper and Code

Apr 24, 2023

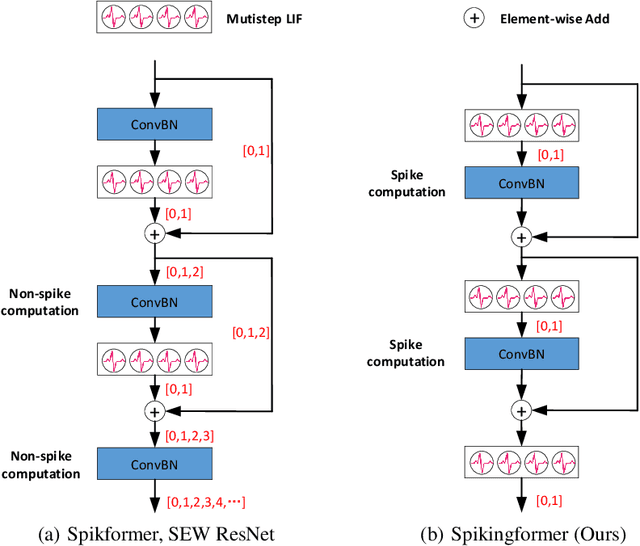

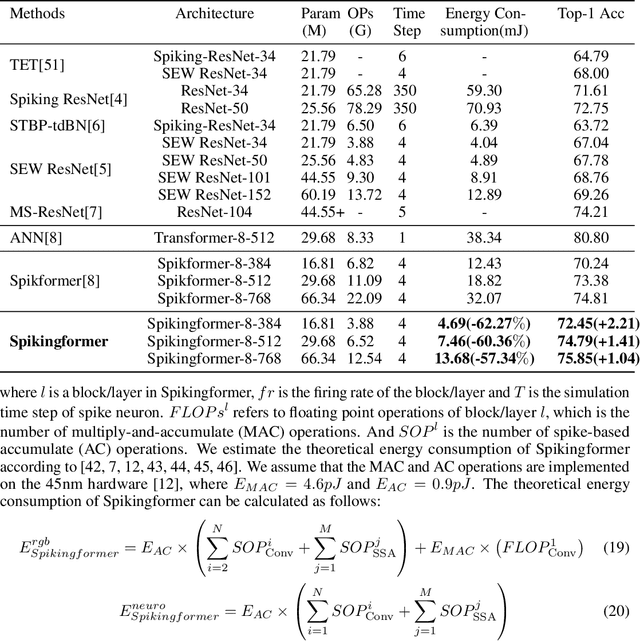

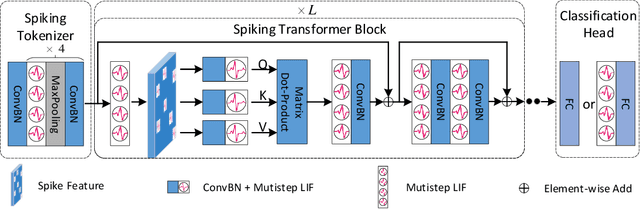

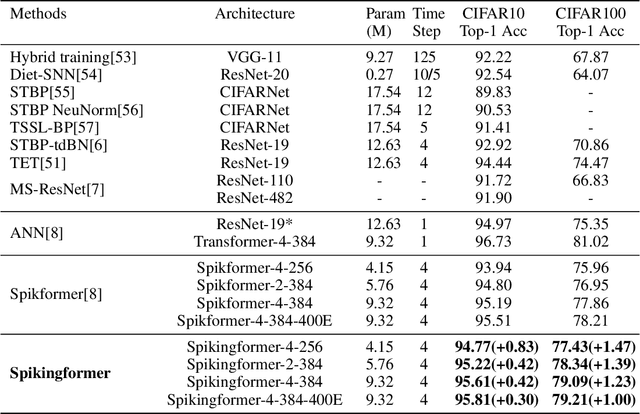

Spiking neural networks (SNNs) offer a promising energy-efficient alternative to artificial neural networks, due to their event-driven spiking computation. However, state-of-the-art deep SNNs (including Spikformer and SEW ResNet) suffer from non-spike computations (integer-float multiplications) caused by the structure of their residual connection. These non-spike computations increase SNNs' power consumption and make them unsuitable for deployment on mainstream neuromorphic hardware, which only supports spike operations. In this paper, we propose a hardware-friendly spike-driven residual learning architecture for SNNs to avoid non-spike computations. Based on this residual design, we develop Spikingformer, a pure transformer-based spiking neural network. We evaluate Spikingformer on ImageNet, CIFAR10, CIFAR100, CIFAR10-DVS and DVS128 Gesture datasets, and demonstrated that Spikingformer outperforms the state-of-the-art in directly trained pure SNNs as a novel advanced backbone (74.79$\%$ top-1 accuracy on ImageNet, + 1.41$\%$ compared with Spikformer). Furthermore, our experiments verify that Spikingformer effectively avoids non-spike computations and reduces energy consumption by 60.34$\%$ compared with Spikformer on ImageNet. To our best knowledge, this is the first time that a pure event-driven transformer-based SNN has been developed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge