Semantic Segmentation of 3D LiDAR Data in Dynamic Scene Using Semi-supervised Learning

Paper and Code

Sep 03, 2018

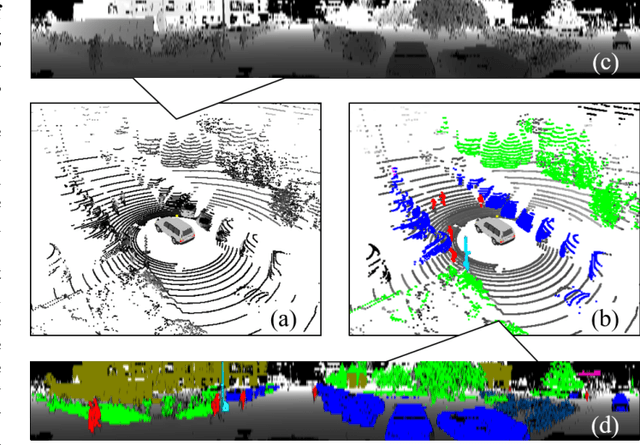

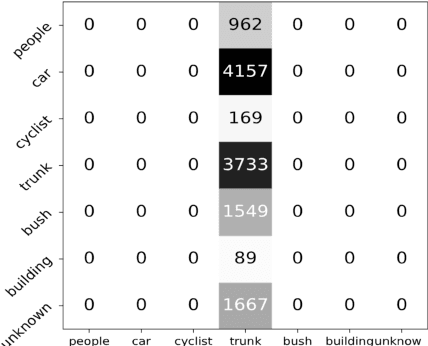

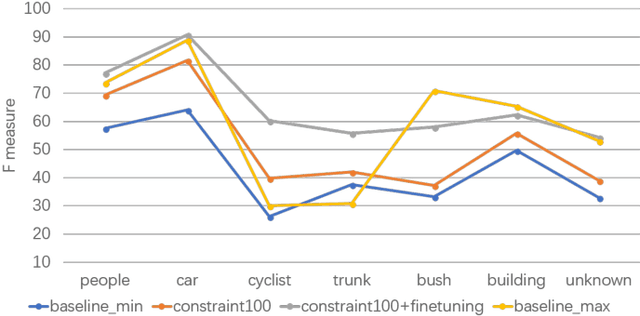

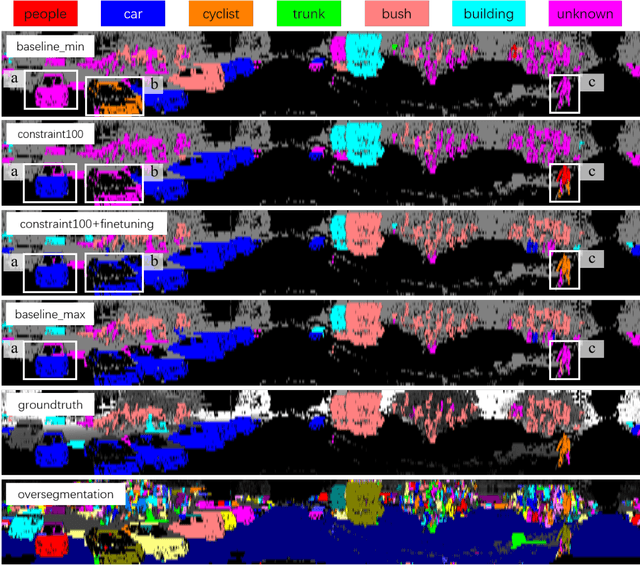

This work studies the semantic segmentation of 3D LiDAR data in dynamic scenes for autonomous driving applications. A system of semantic segmentation using 3D LiDAR data, including range image segmentation, sample generation, inter-frame data association, track-level annotation and semi-supervised learning, is developed. To reduce the considerable requirement of fine annotations, a CNN-based classifier is trained by considering both supervised samples with manually labeled object classes and pairwise constraints, where a data sample is composed of a segment as the foreground and neighborhood points as the background. A special loss function is designed to account for both annotations and constraints, where the constraint data are encouraged to be assigned to the same semantic class. A dataset containing 1838 frames of LiDAR data, 39934 pairwise constraints and 57927 human annotations is developed. The performance of the method is examined extensively. Qualitative and quantitative experiments show that the combination of a few annotations and large amount of constraint data significantly enhances the effectiveness and scene adaptability, resulting in greater than 10% improvement

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge