Seeing the Unseen: Visual Common Sense for Semantic Placement

Paper and Code

Jan 15, 2024

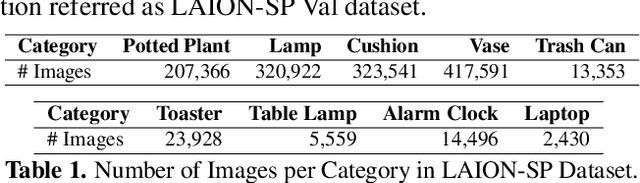

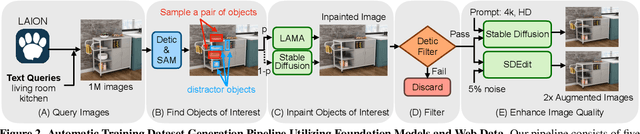

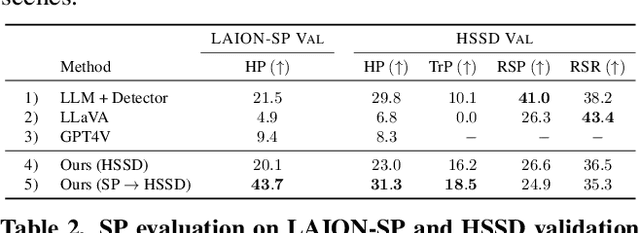

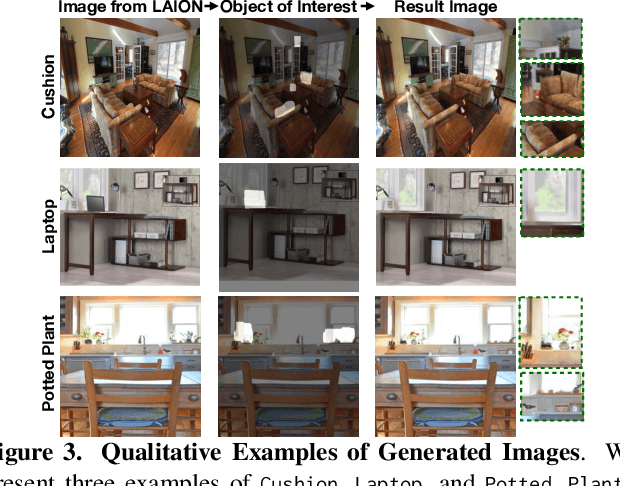

Computer vision tasks typically involve describing what is present in an image (e.g. classification, detection, segmentation, and captioning). We study a visual common sense task that requires understanding what is not present. Specifically, given an image (e.g. of a living room) and name of an object ("cushion"), a vision system is asked to predict semantically-meaningful regions (masks or bounding boxes) in the image where that object could be placed or is likely be placed by humans (e.g. on the sofa). We call this task: Semantic Placement (SP) and believe that such common-sense visual understanding is critical for assitive robots (tidying a house), and AR devices (automatically rendering an object in the user's space). Studying the invisible is hard. Datasets for image description are typically constructed by curating relevant images and asking humans to annotate the contents of the image; neither of those two steps are straightforward for objects not present in the image. We overcome this challenge by operating in the opposite direction: we start with an image of an object in context from web, and then remove that object from the image via inpainting. This automated pipeline converts unstructured web data into a dataset comprising pairs of images with/without the object. Using this, we collect a novel dataset, with ${\sim}1.3$M images across $9$ object categories, and train a SP prediction model called CLIP-UNet. CLIP-UNet outperforms existing VLMs and baselines that combine semantic priors with object detectors on real-world and simulated images. In our user studies, we find that the SP masks predicted by CLIP-UNet are favored $43.7\%$ and $31.3\%$ times when comparing against the $4$ SP baselines on real and simulated images. In addition, we demonstrate leveraging SP mask predictions from CLIP-UNet enables downstream applications like building tidying robots in indoor environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge