SecureBoost: A Lossless Federated Learning Framework

Paper and Code

Jan 25, 2019

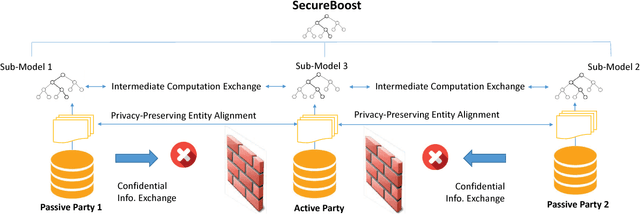

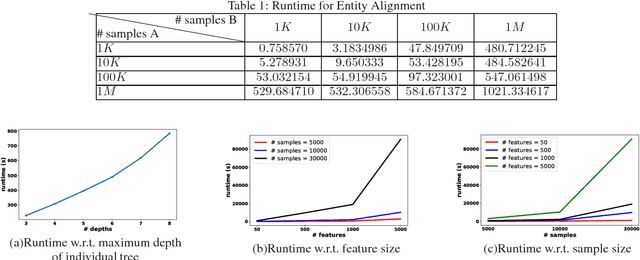

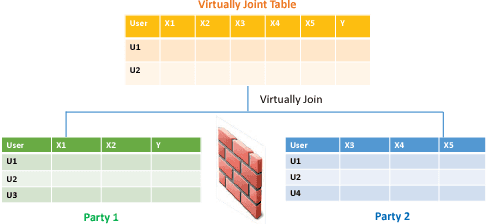

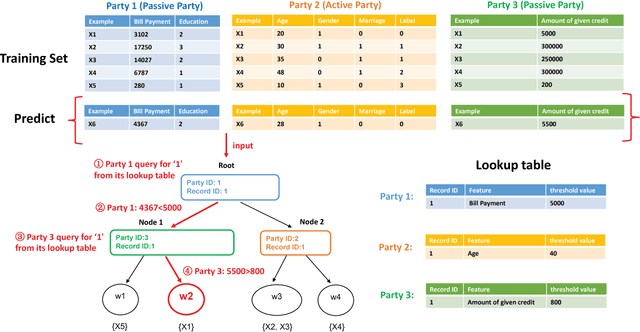

The protection of user privacy is an important concern in machine learning, as evidenced by the rolling out of the General Data Protection Regulation (GDPR) in the European Union (EU) in May 2018. The GDPR is designed to give users more control over their personal data, which motivates us to explore machine learning frameworks with data sharing without violating user privacy. To meet this goal, in this paper, we propose a novel lossless privacy-preserving tree-boosting system known as SecureBoost in the setting of federated learning. This federated-learning system allows a learning process to be jointly conducted over multiple parties with partially common user samples but different feature sets, which corresponds to a vertically partitioned virtual data set. An advantage of SecureBoost is that it provides the same level of accuracy as the non-privacy-preserving approach while at the same time, reveal no information of each private data provider. We theoretically prove that the SecureBoost framework is as accurate as other non-federated gradient tree-boosting algorithms that bring the data into one place. In addition, along with a proof of security, we discuss what would be required to make the protocols completely secure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge