Rotation Invariant Point Cloud Classification: Where Local Geometry Meets Global Topology

Paper and Code

Nov 01, 2019

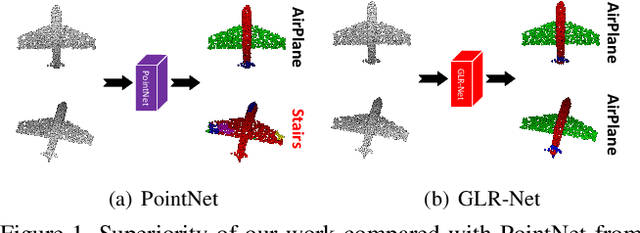

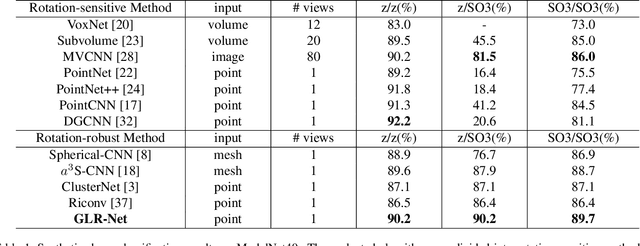

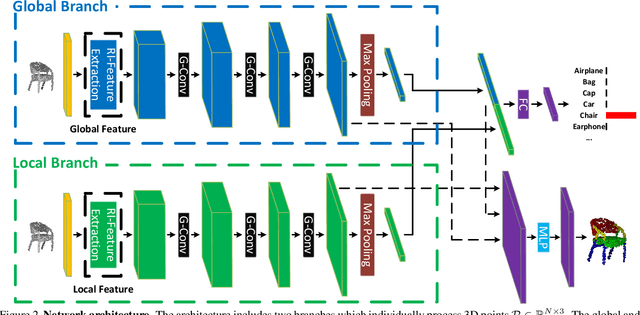

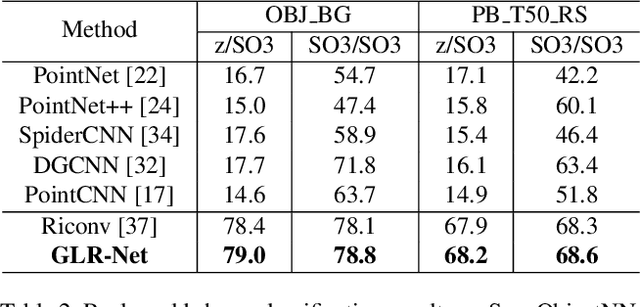

Point cloud analysis is a basic task in 3D computer vision, which attracts increasing research attention. Most previous works develop experiments on synthetic datasets where the data is well-aligned. However, the data is prone to being unaligned in the real world, which contains SO3 rotations. In this context, most existing works are ineffective due to the sensitivity of coordinate changes. For this reason, we address the issue of rotation by presenting a combination of global and local representations which are invariant to rotation. Moreover, we integrate the combination into a two-branch network where the highly dimensional features are hierarchically extracted. Compared with previous rotation-invariant works, the proposed representations effectively consider both global and local information. Extensive experiments have demonstrated that our method achieves state-of-the-art performance on the rotation-augmented version of ModelNet40, ShapeNet, and ScanObjectNN (real-world dataset).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge