REO-VLM: Transforming VLM to Meet Regression Challenges in Earth Observation

Paper and Code

Dec 21, 2024

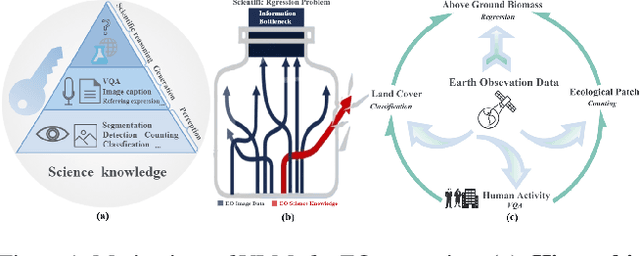

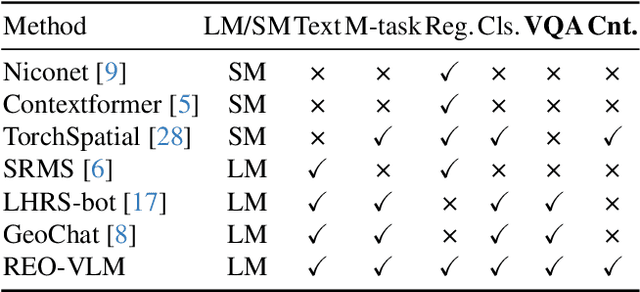

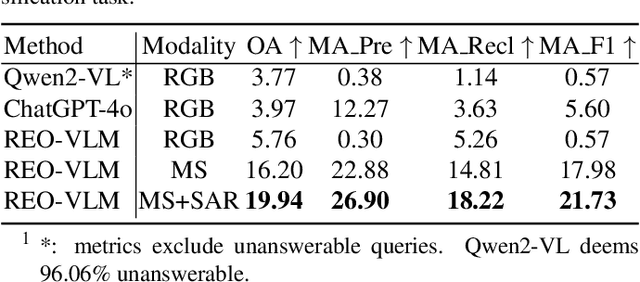

The rapid evolution of Vision Language Models (VLMs) has catalyzed significant advancements in artificial intelligence, expanding research across various disciplines, including Earth Observation (EO). While VLMs have enhanced image understanding and data processing within EO, their applications have predominantly focused on image content description. This limited focus overlooks their potential in geographic and scientific regression tasks, which are essential for diverse EO applications. To bridge this gap, this paper introduces a novel benchmark dataset, called \textbf{REO-Instruct} to unify regression and generation tasks specifically for the EO domain. Comprising 1.6 million multimodal EO imagery and language pairs, this dataset is designed to support both biomass regression and image content interpretation tasks. Leveraging this dataset, we develop \textbf{REO-VLM}, a groundbreaking model that seamlessly integrates regression capabilities with traditional generative functions. By utilizing language-driven reasoning to incorporate scientific domain knowledge, REO-VLM goes beyond solely relying on EO imagery, enabling comprehensive interpretation of complex scientific attributes from EO data. This approach establishes new performance benchmarks and significantly enhances the capabilities of environmental monitoring and resource management.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge