Predicting Distant Metastases in Soft-Tissue Sarcomas from PET-CT scans using Constrained Hierarchical Multi-Modality Feature Learning

Paper and Code

Apr 23, 2021

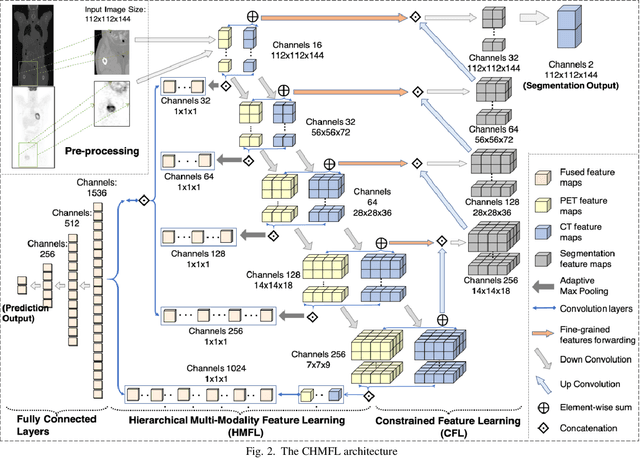

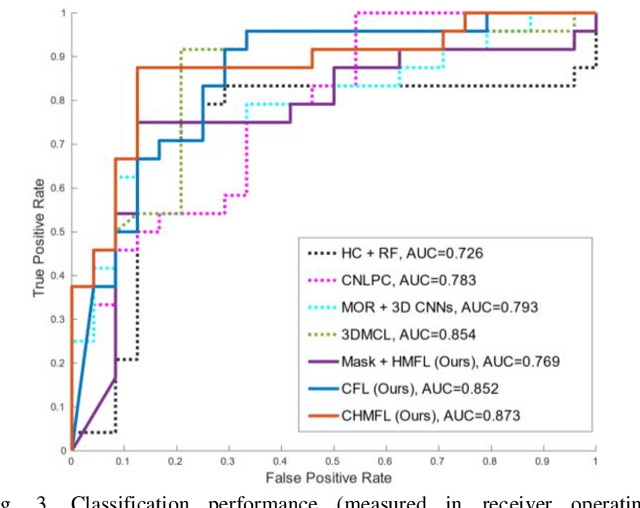

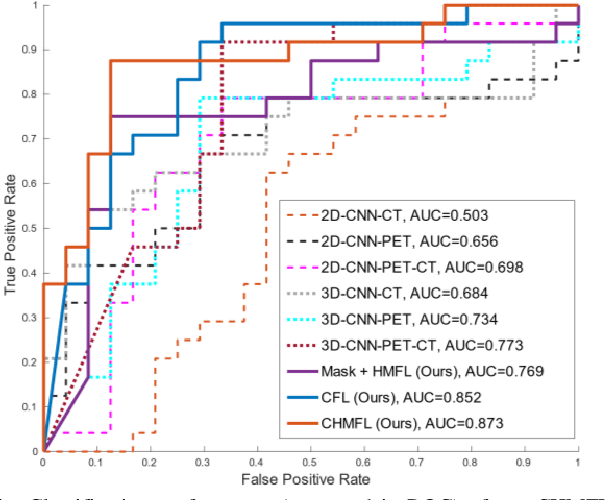

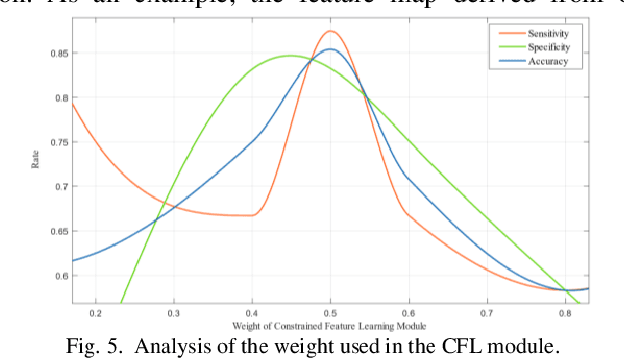

Distant metastases (DM) refer to the dissemination of tumors, usually, beyond the organ where the tumor originated. They are the leading cause of death in patients with soft-tissue sarcomas (STSs). Positron emission tomography-computed tomography (PET-CT) is regarded as the imaging modality of choice for the management of STSs. It is difficult to determine from imaging studies which STS patients will develop metastases. 'Radiomics' refers to the extraction and analysis of quantitative features from medical images and it has been employed to help identify such tumors. The state-of-the-art in radiomics is based on convolutional neural networks (CNNs). Most CNNs are designed for single-modality imaging data (CT or PET alone) and do not exploit the information embedded in PET-CT where there is a combination of an anatomical and functional imaging modality. Furthermore, most radiomic methods rely on manual input from imaging specialists for tumor delineation, definition and selection of radiomic features. This approach, however, may not be scalable to tumors with complex boundaries and where there are multiple other sites of disease. We outline a new 3D CNN to help predict DM in STS patients from PET-CT data. The 3D CNN uses a constrained feature learning module and a hierarchical multi-modality feature learning module that leverages the complementary information from the modalities to focus on semantically important regions. Our results on a public PET-CT dataset of STS patients show that multi-modal information improves the ability to identify those patients who develop DM. Further our method outperformed all other related state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge