One Neuron Saved Is One Neuron Earned: On Parametric Efficiency of Quadratic Networks

Paper and Code

Mar 11, 2023

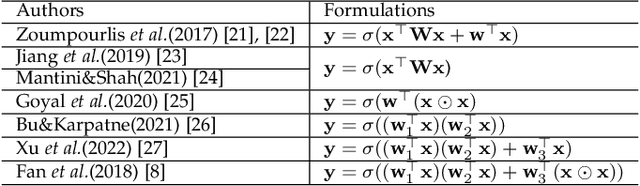

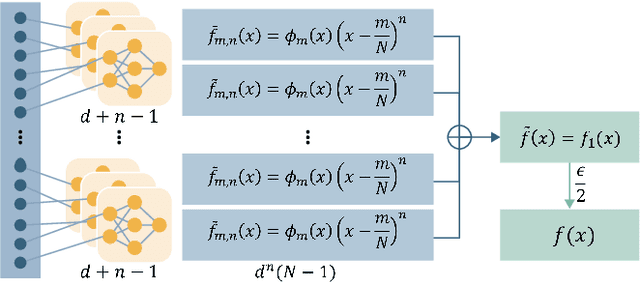

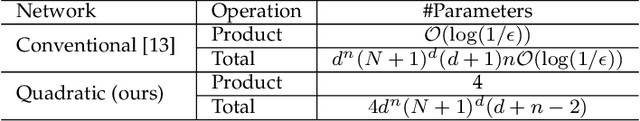

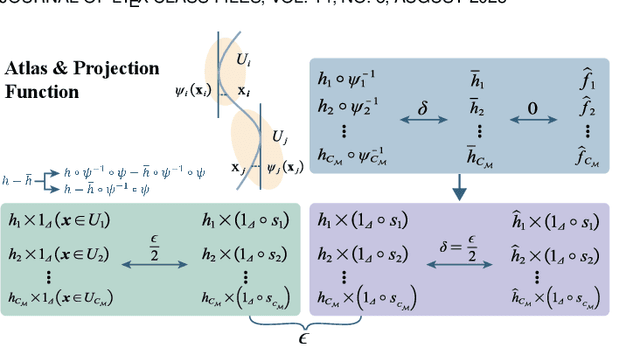

Inspired by neuronal diversity in the biological neural system, a plethora of studies proposed to design novel types of artificial neurons and introduce neuronal diversity into artificial neural networks. Recently proposed quadratic neuron, which replaces the inner-product operation in conventional neurons with a quadratic one, have achieved great success in many essential tasks. Despite the promising results of quadratic neurons, there is still an unresolved issue: \textit{Is the superior performance of quadratic networks simply due to the increased parameters or due to the intrinsic expressive capability?} Without clarifying this issue, the performance of quadratic networks is always suspicious. Additionally, resolving this issue is reduced to finding killer applications of quadratic networks. In this paper, with theoretical and empirical studies, we show that quadratic networks enjoy parametric efficiency, thereby confirming that the superior performance of quadratic networks is due to the intrinsic expressive capability. This intrinsic expressive ability comes from that quadratic neurons can easily represent nonlinear interaction, while it is hard for conventional neurons. Theoretically, we derive the approximation efficiency of the quadratic network over conventional ones in terms of real space and manifolds. Moreover, from the perspective of the Barron space, we demonstrate that there exists a functional space whose functions can be approximated by quadratic networks in a dimension-free error, but the approximation error of conventional networks is dependent on dimensions. Empirically, experimental results on synthetic data, classic benchmarks, and real-world applications show that quadratic models broadly enjoy parametric efficiency, and the gain of efficiency depends on the task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge