On the Fairness of Causal Algorithmic Recourse

Paper and Code

Oct 14, 2020

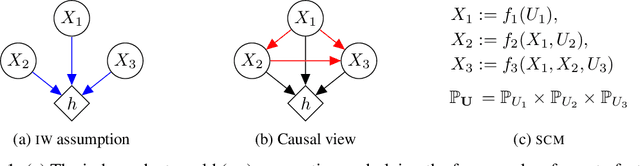

While many recent works have studied the problem of algorithmic fairness from the perspective of predictions, here we investigate the fairness of recourse actions recommended to individuals to recover from an unfavourable classification. To this end, we propose two new fairness criteria at the group and individual level which---unlike prior work on equalising the average distance from the decision boundary across protected groups---are based on a causal framework that explicitly models relationships between input features, thereby allowing to capture downstream effects of recourse actions performed in the physical world. We explore how our criteria relate to others, such as counterfactual fairness, and show that fairness of recourse is complementary to fairness of prediction. We then investigate how to enforce fair recourse in the training of the classifier. Finally, we discuss whether fairness violations in the data generating process revealed by our criteria may be better addressed by societal interventions and structural changes to the system, as opposed to constraints on the classifier.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge