On the Convergence Theory of Meta Reinforcement Learning with Personalized Policies

Paper and Code

Sep 21, 2022

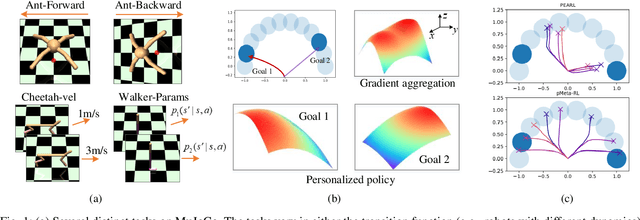

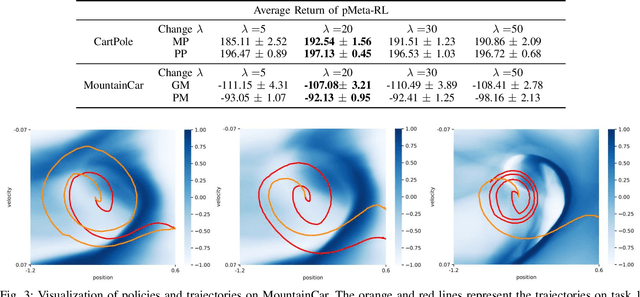

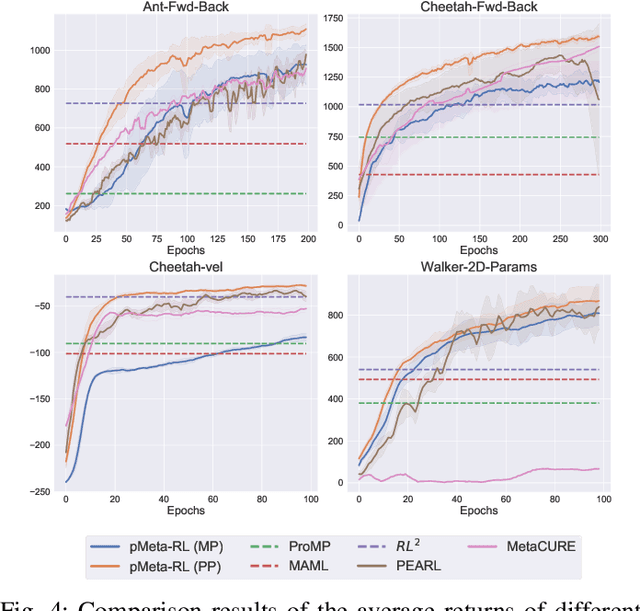

Modern meta-reinforcement learning (Meta-RL) methods are mainly developed based on model-agnostic meta-learning, which performs policy gradient steps across tasks to maximize policy performance. However, the gradient conflict problem is still poorly understood in Meta-RL, which may lead to performance degradation when encountering distinct tasks. To tackle this challenge, this paper proposes a novel personalized Meta-RL (pMeta-RL) algorithm, which aggregates task-specific personalized policies to update a meta-policy used for all tasks, while maintaining personalized policies to maximize the average return of each task under the constraint of the meta-policy. We also provide the theoretical analysis under the tabular setting, which demonstrates the convergence of our pMeta-RL algorithm. Moreover, we extend the proposed pMeta-RL algorithm to a deep network version based on soft actor-critic, making it suitable for continuous control tasks. Experiment results show that the proposed algorithms outperform other previous Meta-RL algorithms on Gym and MuJoCo suites.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge