NNoculation: Broad Spectrum and Targeted Treatment of Backdoored DNNs

Paper and Code

Feb 19, 2020

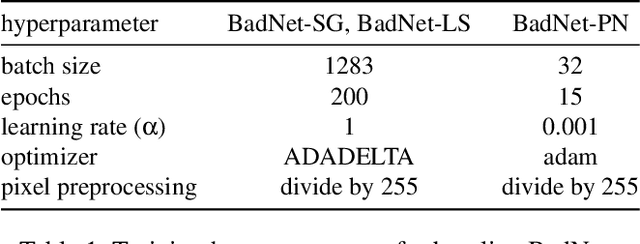

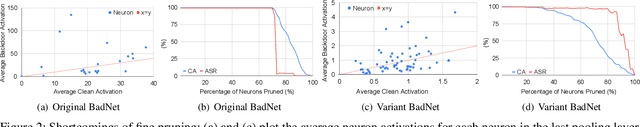

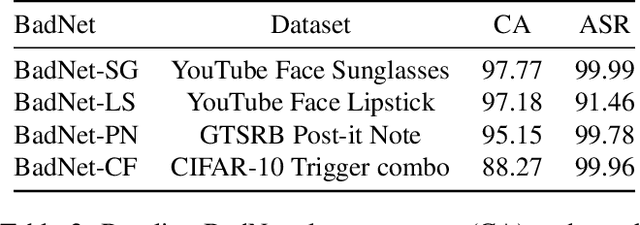

This paper proposes a novel two-stage defense (NNoculation) against backdoored neural networks (BadNets) that, unlike existing defenses, makes minimal assumptions on the shape, size and location of backdoor triggers and BadNet's functioning. In the pre-deployment stage, NNoculation retrains the network using "broad-spectrum" random perturbations of inputs drawn from a clean validation set to partially reduce the adversarial impact of a backdoor. In the post-deployment stage, NNoculation detects and quarantines backdoored test inputs by recording disagreements between the original and pre-deployment patched networks. A CycleGAN is then trained to learn transformations between clean validation inputs and quarantined inputs; i.e., it learns to add triggers to clean validation images. This transformed set of backdoored validation images along with their correct labels is used to further retrain the BadNet, yielding our final defense. NNoculation outperforms state-of-the-art defenses NeuralCleanse and Artificial Brain Simulation (ABS) that we show are ineffective when their restrictive assumptions are circumvented by the attacker.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge