Near-Optimal Fair Resource Allocation for Strategic Agents without Money: A Data-Driven Approach

Paper and Code

Nov 18, 2023

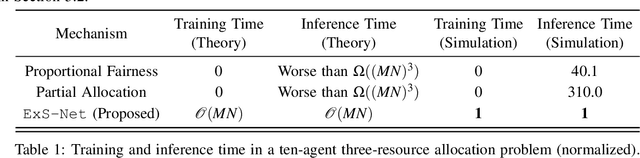

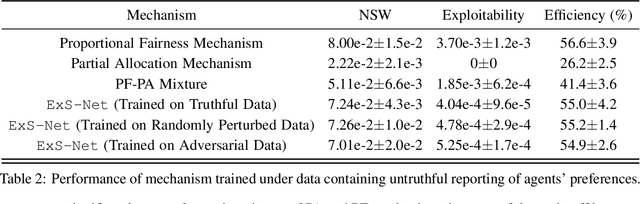

We study learning-based design of fair allocation mechanisms for divisible resources, using proportional fairness (PF) as a benchmark. The learning setting is a significant departure from the classic mechanism design literature, in that, we need to learn fair mechanisms solely from data. In particular, we consider the challenging problem of learning one-shot allocation mechanisms -- without the use of money -- that incentivize strategic agents to be truthful when reporting their valuations. It is well-known that the mechanism that directly seeks to optimize PF is not incentive compatible, meaning that the agents can potentially misreport their preferences to gain increased allocations. We introduce the notion of "exploitability" of a mechanism to measure the relative gain in utility from misreport, and make the following important contributions in the paper: (i) Using sophisticated techniques inspired by differentiable convex programming literature, we design a numerically efficient approach for computing the exploitability of the PF mechanism. This novel contribution enables us to quantify the gap that needs to be bridged to approximate PF via incentive compatible mechanisms. (ii) Next, we modify the PF mechanism to introduce a trade-off between fairness and exploitability. By properly controlling this trade-off using data, we show that our proposed mechanism, ExPF-Net, provides a strong approximation to the PF mechanism while maintaining low exploitability. This mechanism, however, comes with a high computational cost. (iii) To address the computational challenges, we propose another mechanism ExS-Net, which is end-to-end parameterized by a neural network. ExS-Net enjoys similar (slightly inferior) performance and significantly accelerated training and inference time performance. (iv) Extensive numerical simulations demonstrate the robustness and efficacy of the proposed mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge