Multiple Object Tracking by Flowing and Fusing

Paper and Code

Jan 30, 2020

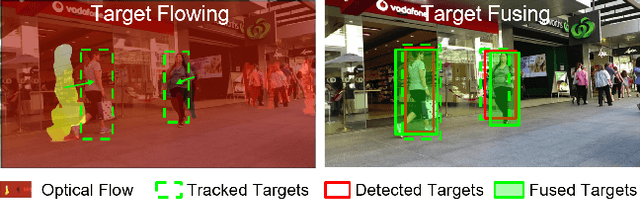

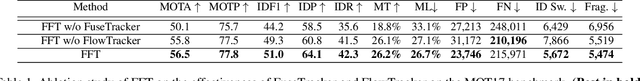

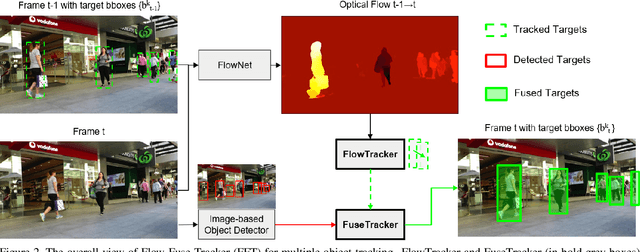

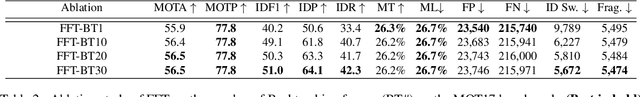

Most of Multiple Object Tracking (MOT) approaches compute individual target features for two subtasks: estimating target-wise motions and conducting pair-wise Re-Identification (Re-ID). Because of the indefinite number of targets among video frames, both subtasks are very difficult to scale up efficiently in end-to-end Deep Neural Networks (DNNs). In this paper, we design an end-to-end DNN tracking approach, Flow-Fuse-Tracker (FFT), that addresses the above issues with two efficient techniques: target flowing and target fusing. Specifically, in target flowing, a FlowTracker DNN module learns the indefinite number of target-wise motions jointly from pixel-level optical flows. In target fusing, a FuseTracker DNN module refines and fuses targets proposed by FlowTracker and frame-wise object detection, instead of trusting either of the two inaccurate sources of target proposal. Because FlowTracker can explore complex target-wise motion patterns and FuseTracker can refine and fuse targets from FlowTracker and detectors, our approach can achieve the state-of-the-art results on several MOT benchmarks. As an online MOT approach, FFT produced the top MOTA of 46.3 on the 2DMOT15, 56.5 on the MOT16, and 56.5 on the MOT17 tracking benchmarks, surpassing all the online and offline methods in existing publications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge