Model-Agnostic Few-Shot Open-Set Recognition

Paper and Code

Jun 18, 2022

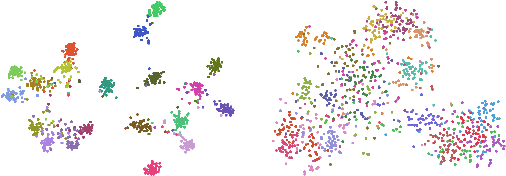

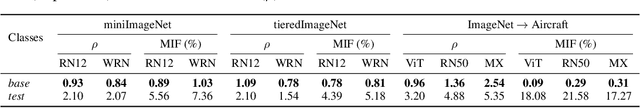

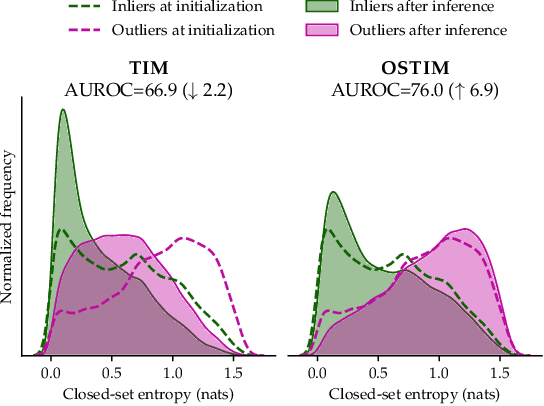

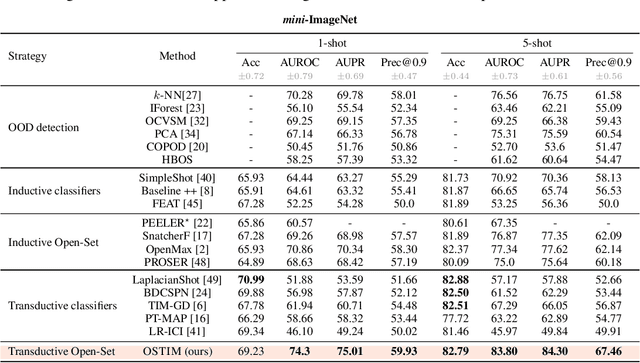

We tackle the Few-Shot Open-Set Recognition (FSOSR) problem, i.e. classifying instances among a set of classes for which we only have few labeled samples, while simultaneously detecting instances that do not belong to any known class. Departing from existing literature, we focus on developing model-agnostic inference methods that can be plugged into any existing model, regardless of its architecture or its training procedure. Through evaluating the embedding's quality of a variety of models, we quantify the intrinsic difficulty of model-agnostic FSOSR. Furthermore, a fair empirical evaluation suggests that the naive combination of a kNN detector and a prototypical classifier ranks before specialized or complex methods in the inductive setting of FSOSR. These observations motivated us to resort to transduction, as a popular and practical relaxation of standard few-shot learning problems. We introduce an Open Set Transductive Information Maximization method OSTIM, which hallucinates an outlier prototype while maximizing the mutual information between extracted features and assignments. Through extensive experiments spanning 5 datasets, we show that OSTIM surpasses both inductive and existing transductive methods in detecting open-set instances while competing with the strongest transductive methods in classifying closed-set instances. We further show that OSTIM's model agnosticity allows it to successfully leverage the strong expressive abilities of the latest architectures and training strategies without any hyperparameter modification, a promising sign that architectural advances to come will continue to positively impact OSTIM's performances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge