MaxSup: Overcoming Representation Collapse in Label Smoothing

Paper and Code

Feb 18, 2025

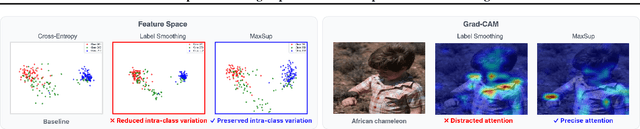

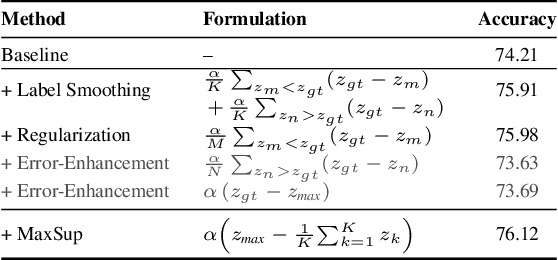

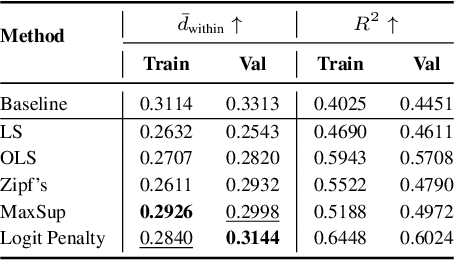

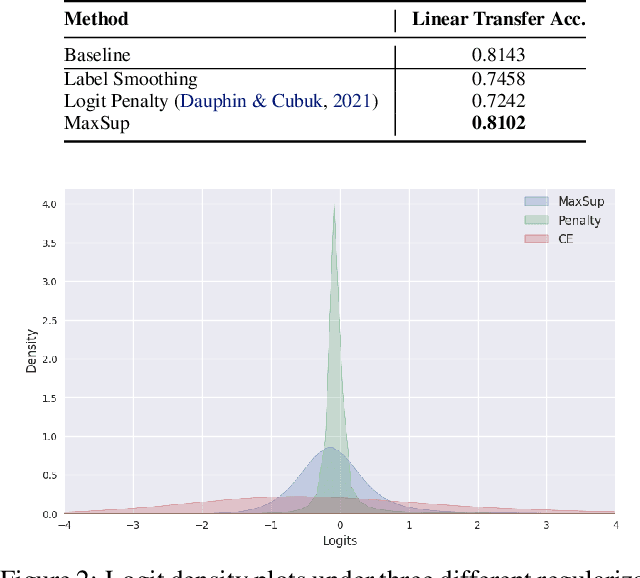

Label Smoothing (LS) is widely adopted to curb overconfidence in neural network predictions and enhance generalization. However, previous research shows that LS can force feature representations into excessively tight clusters, eroding intra-class distinctions. More recent findings suggest that LS also induces overconfidence in misclassifications, yet the precise mechanism remained unclear. In this work, we decompose the loss term introduced by LS, revealing two key components: (i) a regularization term that functions only when the prediction is correct, and (ii) an error-enhancement term that emerges under misclassifications. This latter term compels the model to reinforce incorrect predictions with exaggerated certainty, further collapsing the feature space. To address these issues, we propose Max Suppression (MaxSup), which uniformly applies the intended regularization to both correct and incorrect predictions by penalizing the top-1 logit instead of the ground-truth logit. Through feature analyses, we show that MaxSup restores intra-class variation and sharpens inter-class boundaries. Extensive experiments on image classification and downstream tasks confirm that MaxSup is a more robust alternative to LS. Code is available at: https://github.com/ZhouYuxuanYX/Maximum-Suppression-Regularization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge