mAnI: Movie Amalgamation using Neural Imitation

Paper and Code

Aug 16, 2017

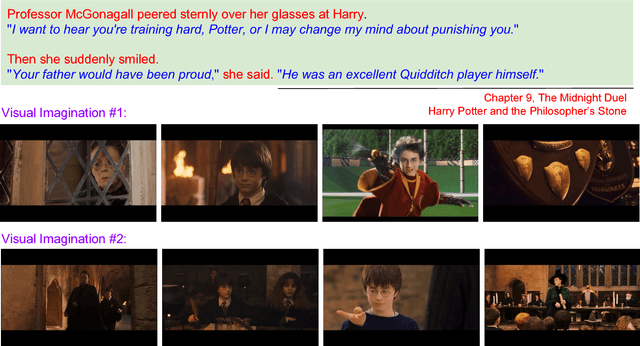

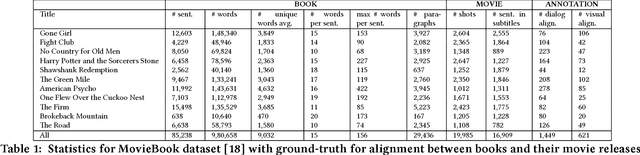

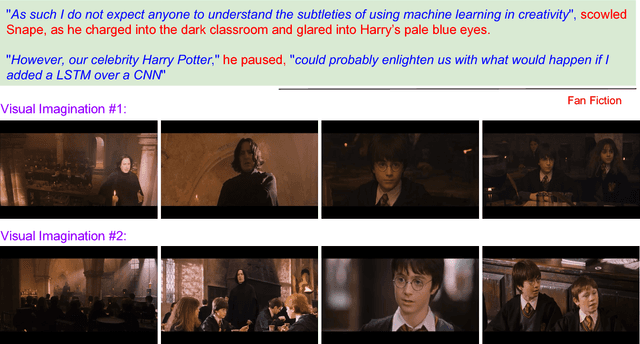

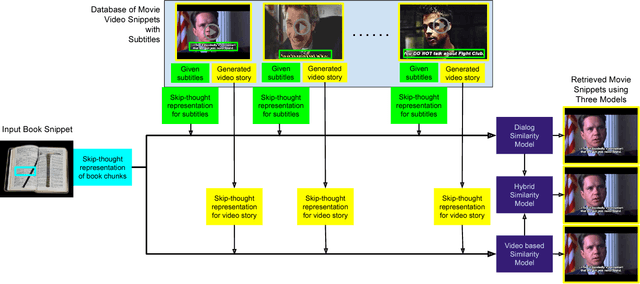

Cross-modal data retrieval has been the basis of various creative tasks performed by Artificial Intelligence (AI). One such highly challenging task for AI is to convert a book into its corresponding movie, which most of the creative film makers do as of today. In this research, we take the first step towards it by visualizing the content of a book using its corresponding movie visuals. Given a set of sentences from a book or even a fan-fiction written in the same universe, we employ deep learning models to visualize the input by stitching together relevant frames from the movie. We studied and compared three different types of setting to match the book with the movie content: (i) Dialog model: using only the dialog from the movie, (ii) Visual model: using only the visual content from the movie, and (iii) Hybrid model: using the dialog and the visual content from the movie. Experiments on the publicly available MovieBook dataset shows the effectiveness of the proposed models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge