Learning to Ask for Data-Efficient Event Argument Extraction

Paper and Code

Oct 01, 2021

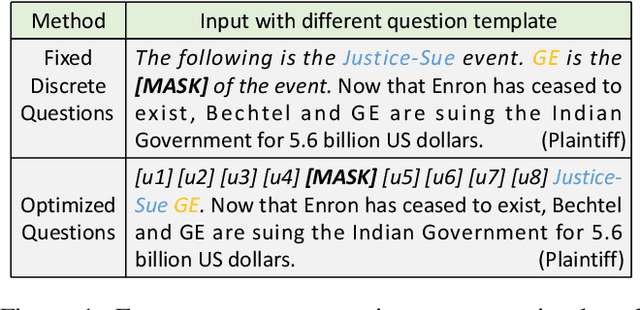

Event argument extraction (EAE) is an important task for information extraction to discover specific argument roles. In this study, we cast EAE as a question-based cloze task and empirically analyze fixed discrete token template performance. As generating human-annotated question templates is often time-consuming and labor-intensive, we further propose a novel approach called "Learning to Ask," which can learn optimized question templates for EAE without human annotations. Experiments using the ACE-2005 dataset demonstrate that our method based on optimized questions achieves state-of-the-art performance in both the few-shot and supervised settings.

* work in progress

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge