Label-guided Learning for Text Classification

Paper and Code

Feb 25, 2020

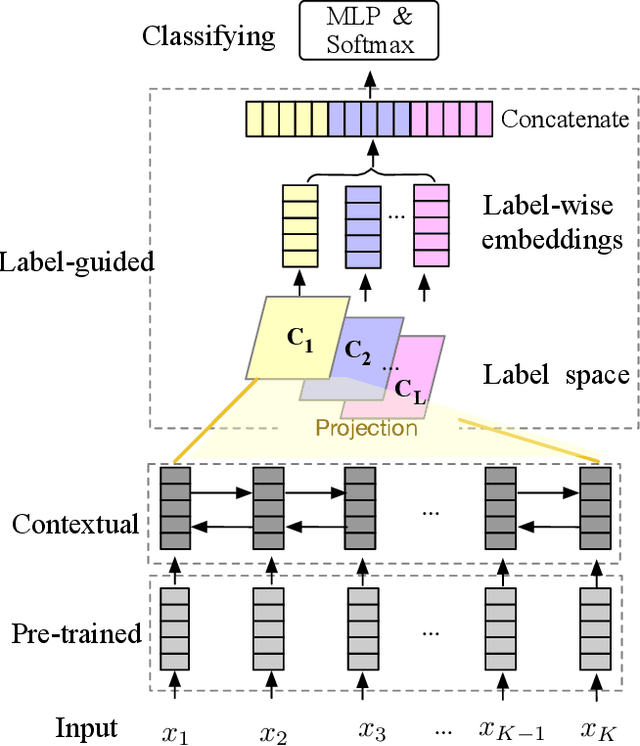

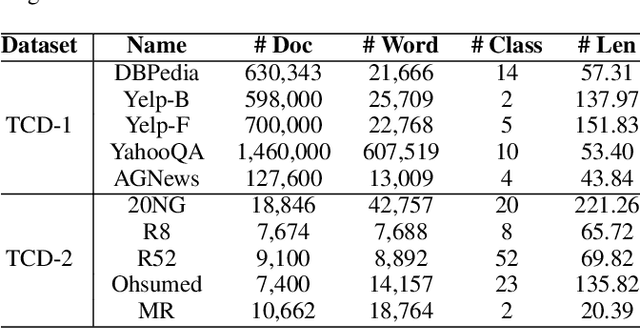

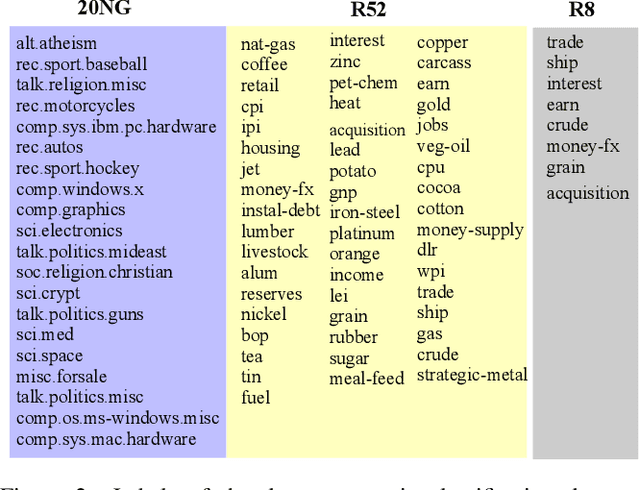

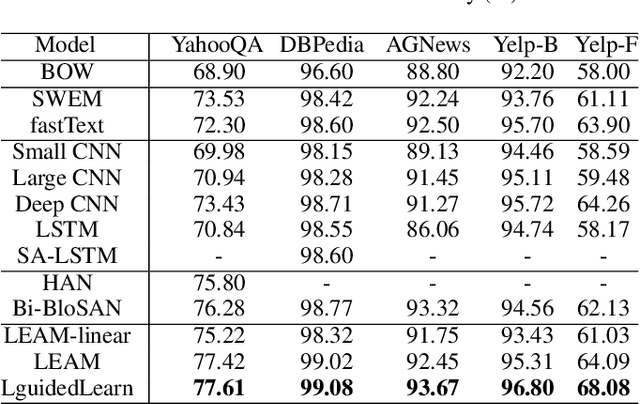

Text classification is one of the most important and fundamental tasks in natural language processing. Performance of this task mainly dependents on text representation learning. Currently, most existing learning frameworks mainly focus on encoding local contextual information between words. These methods always neglect to exploit global clues, such as label information, for encoding text information. In this study, we propose a label-guided learning framework LguidedLearn for text representation and classification. Our method is novel but simple that we only insert a label-guided encoding layer into the commonly used text representation learning schemas. That label-guided layer performs label-based attentive encoding to map the universal text embedding (encoded by a contextual information learner) into different label spaces, resulting in label-wise embeddings. In our proposed framework, the label-guided layer can be easily and directly applied with a contextual encoding method to perform jointly learning. Text information is encoded based on both the local contextual information and the global label clues. Therefore, the obtained text embeddings are more robust and discriminative for text classification. Extensive experiments are conducted on benchmark datasets to illustrate the effectiveness of our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge