Industrial Scale Privacy Preserving Deep Neural Network

Paper and Code

Mar 12, 2020

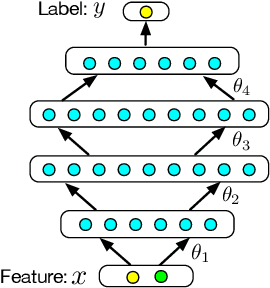

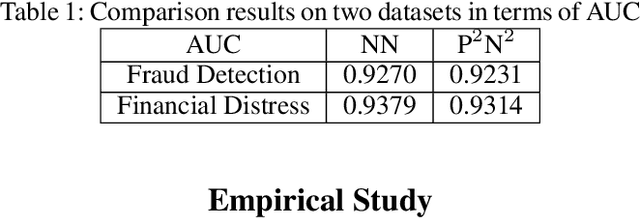

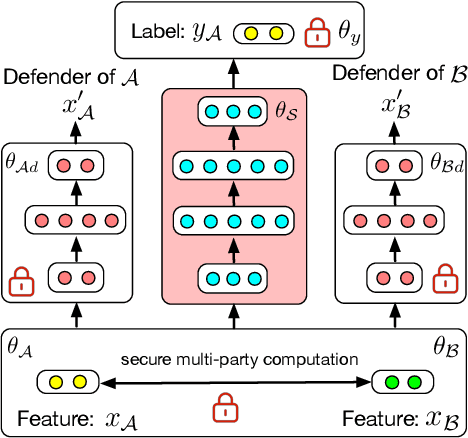

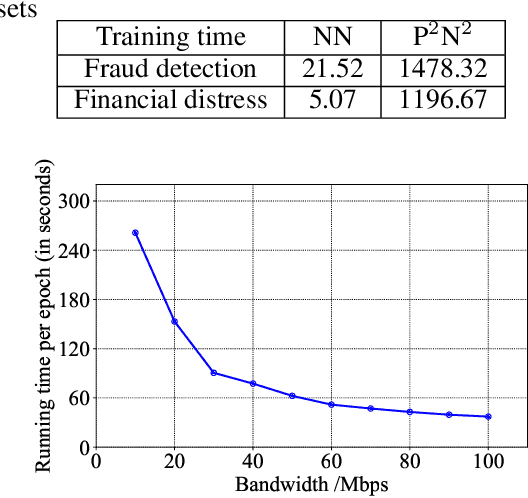

Deep Neural Network (DNN) has been showing great potential in kinds of real-world applications such as fraud detection and distress prediction. Meanwhile, data isolation has become a serious problem currently, i.e., different parties cannot share data with each other. To solve this issue, most research leverages cryptographic techniques to train secure DNN models for multi-parties without compromising their private data. Although such methods have strong security guarantee, they are difficult to scale to deep networks and large datasets due to its high communication and computation complexities. To solve the scalability of the existing secure Deep Neural Network (DNN) in data isolation scenarios, in this paper, we propose an industrial scale privacy preserving neural network learning paradigm, which is secure against semi-honest adversaries. Our main idea is to split the computation graph of DNN into two parts, i.e., the computations related to private data are performed by each party using cryptographic techniques, and the rest computations are done by a neutral server with high computation ability. We also present a defender mechanism for further privacy protection. We conduct experiments on real-world fraud detection dataset and financial distress prediction dataset, the encouraging results demonstrate the practicalness of our proposal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge