HazeCLIP: Towards Language Guided Real-World Image Dehazing

Paper and Code

Jul 18, 2024

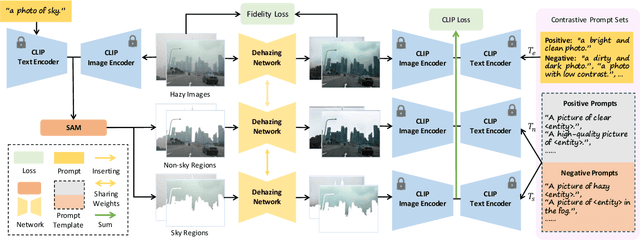

Existing methods have achieved remarkable performance in single image dehazing, particularly on synthetic datasets. However, they often struggle with real-world hazy images due to domain shift, limiting their practical applicability. This paper introduces HazeCLIP, a language-guided adaptation framework designed to enhance the real-world performance of pre-trained dehazing networks. Inspired by the Contrastive Language-Image Pre-training (CLIP) model's ability to distinguish between hazy and clean images, we utilize it to evaluate dehazing results. Combined with a region-specific dehazing technique and tailored prompt sets, CLIP model accurately identifies hazy areas, providing a high-quality, human-like prior that guides the fine-tuning process of pre-trained networks. Extensive experiments demonstrate that HazeCLIP achieves the state-of-the-art performance in real-word image dehazing, evaluated through both visual quality and no-reference quality assessments. The code is available: https://github.com/Troivyn/HazeCLIP .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge