Graph-based Facial Affect Analysis: A Review of Methods, Applications and Challenges

Paper and Code

Apr 12, 2021

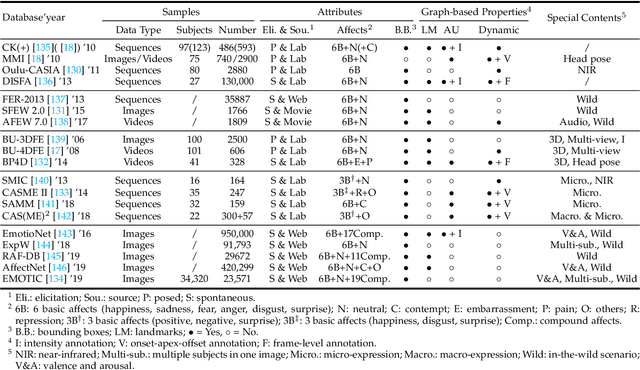

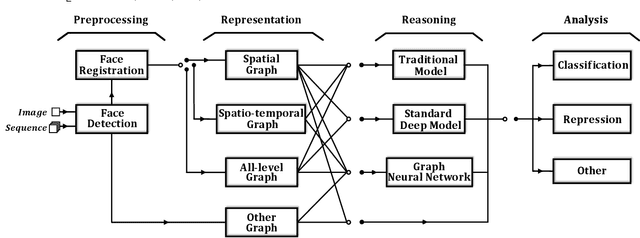

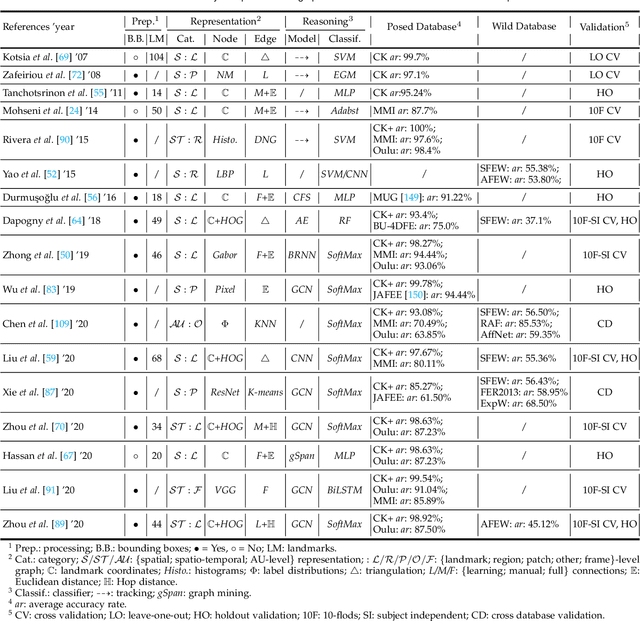

Facial affect analysis (FAA) using visual signals is a key step in human-computer interactions. Early methods mainly focus on extracting appearance and geometry features associated with human affects, while ignore the latent semantic information among individual facial changes, leading to limited performance and generalization. Recent trends attempt to establish a graph-based representation to model these semantic relationships and develop learning frameworks to leverage it for different FAA tasks. In this paper, we provide a comprehensive review of graph-based FAA, including the evolution of algorithms and their applications. First, we introduce the background knowledge of facial affect analysis, especially on the role of graph. We then discuss approaches that are widely used for graph-based affective representation in literatures and show a trend towards graph construction. For the relational reasoning in graph-based FAA, we categorize the existing studies according to their usage of traditional methods or deep models, with a special emphasis on latest graph neural networks. Experimental comparisons of the state-of-the-art on standard FAA problems are also summarized. Finally, we discuss the challenges and potential directions. As far as we know, this is the first survey of graph-based FAA methods, and our findings can serve as a reference point for future research in this field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge