EvEval: A Comprehensive Evaluation of Event Semantics for Large Language Models

Paper and Code

May 24, 2023

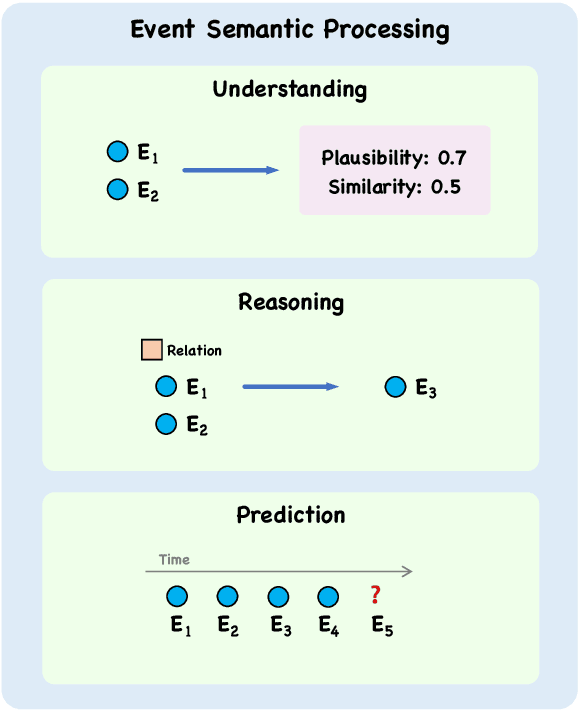

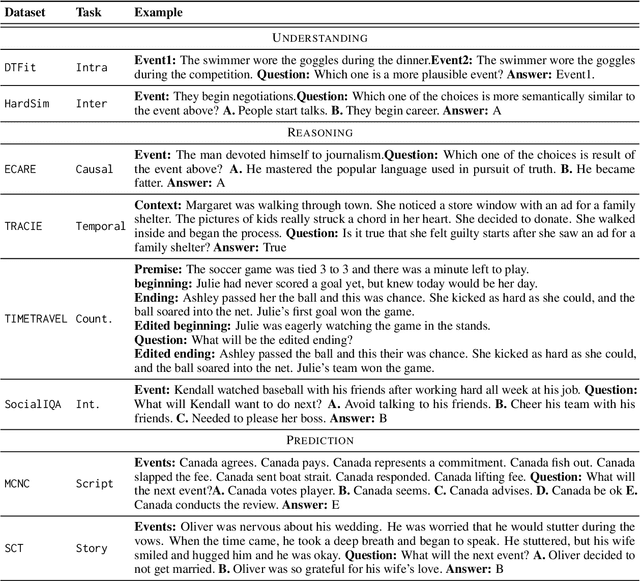

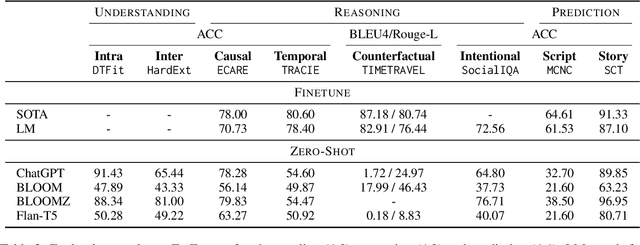

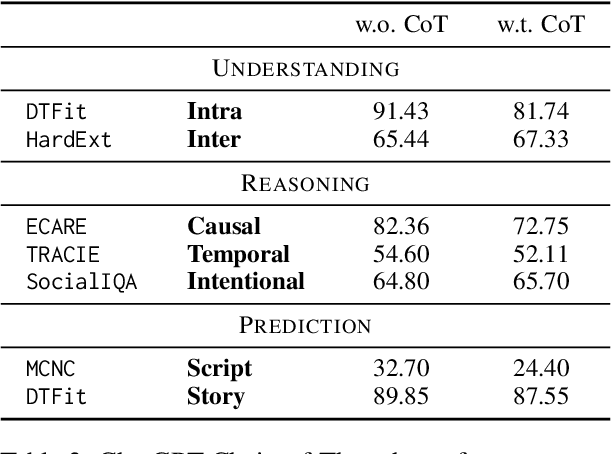

Events serve as fundamental units of occurrence within various contexts. The processing of event semantics in textual information forms the basis of numerous natural language processing (NLP) applications. Recent studies have begun leveraging large language models (LLMs) to address event semantic processing. However, the extent that LLMs can effectively tackle these challenges remains uncertain. Furthermore, the lack of a comprehensive evaluation framework for event semantic processing poses a significant challenge in evaluating these capabilities. In this paper, we propose an overarching framework for event semantic processing, encompassing understanding, reasoning, and prediction, along with their fine-grained aspects. To comprehensively evaluate the event semantic processing abilities of models, we introduce a novel benchmark called EVEVAL. We collect 8 datasets that cover all aspects of event semantic processing. Extensive experiments are conducted on EVEVAL, leading to several noteworthy findings based on the obtained results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge