Differential privacy for protecting patient data in speech disorder detection using deep learning

Paper and Code

Sep 27, 2024

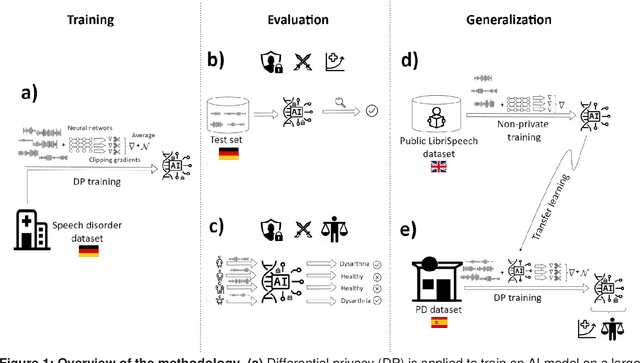

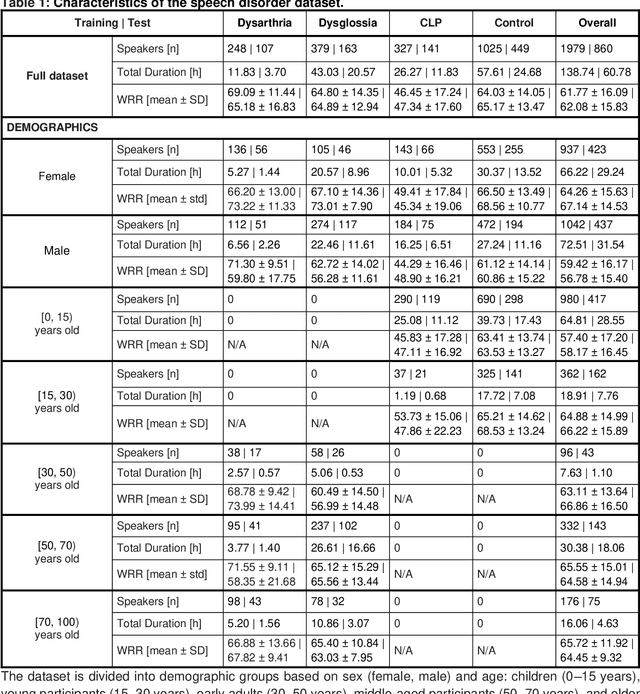

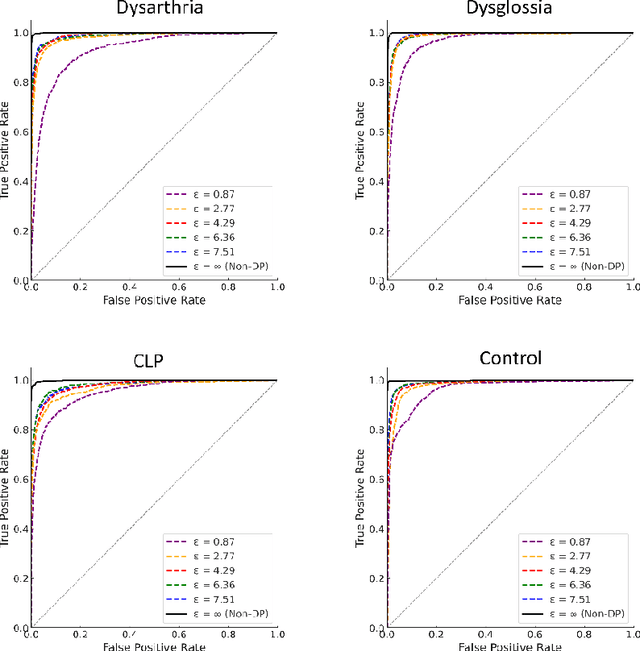

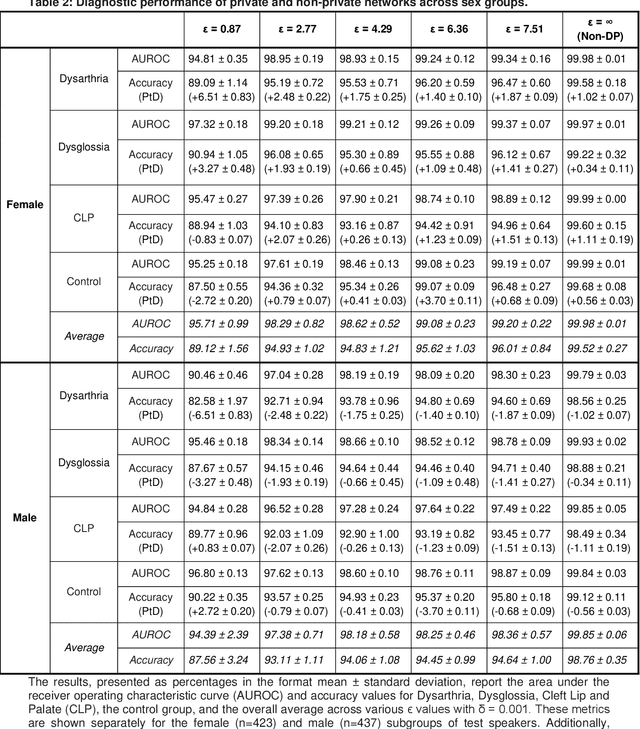

Speech pathology has impacts on communication abilities and quality of life. While deep learning-based models have shown potential in diagnosing these disorders, the use of sensitive data raises critical privacy concerns. Although differential privacy (DP) has been explored in the medical imaging domain, its application in pathological speech analysis remains largely unexplored despite the equally critical privacy concerns. This study is the first to investigate DP's impact on pathological speech data, focusing on the trade-offs between privacy, diagnostic accuracy, and fairness. Using a large, real-world dataset of 200 hours of recordings from 2,839 German-speaking participants, we observed a maximum accuracy reduction of 3.85% when training with DP with a privacy budget, denoted by {\epsilon}, of 7.51. To generalize our findings, we validated our approach on a smaller dataset of Spanish-speaking Parkinson's disease patients, demonstrating that careful pretraining on large-scale task-specific datasets can maintain or even improve model accuracy under DP constraints. We also conducted a comprehensive fairness analysis, revealing that reasonable privacy levels (2<{\epsilon}<10) do not introduce significant gender bias, though age-related disparities may require further attention. Our results suggest that DP can effectively balance privacy and utility in speech disorder detection, but also highlight the unique challenges in the speech domain, particularly regarding the privacy-fairness trade-off. This provides a foundation for future work to refine DP methodologies and address fairness across diverse patient groups in real-world deployments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge