Detection under Privileged Information

Paper and Code

Mar 31, 2018

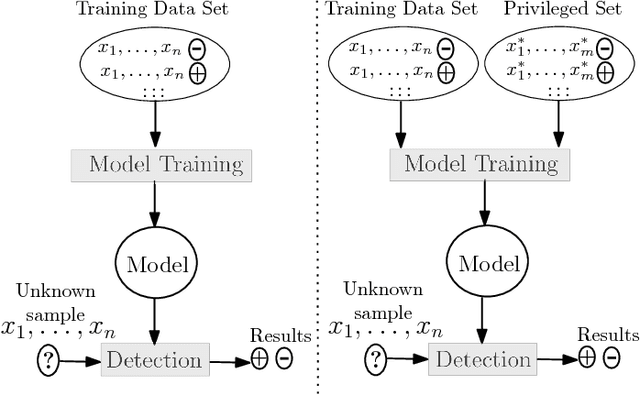

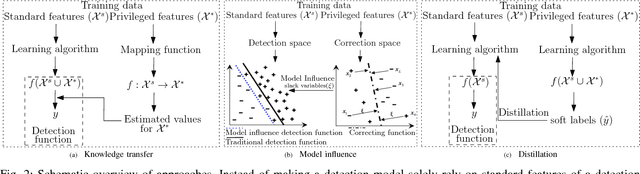

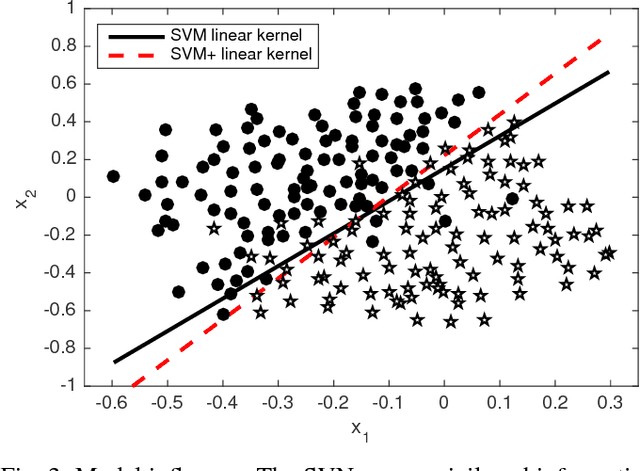

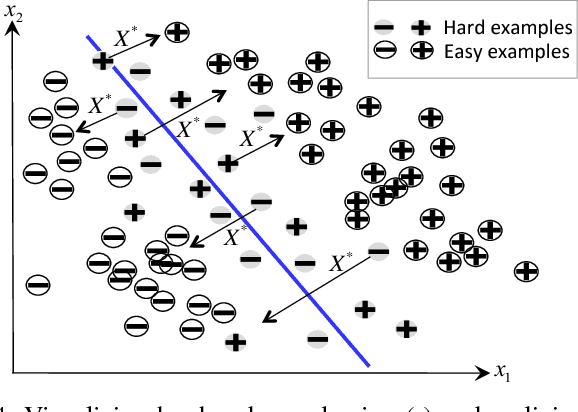

For well over a quarter century, detection systems have been driven by models learned from input features collected from real or simulated environments. An artifact (e.g., network event, potential malware sample, suspicious email) is deemed malicious or non-malicious based on its similarity to the learned model at runtime. However, the training of the models has been historically limited to only those features available at runtime. In this paper, we consider an alternate learning approach that trains models using "privileged" information--features available at training time but not at runtime--to improve the accuracy and resilience of detection systems. In particular, we adapt and extend recent advances in knowledge transfer, model influence, and distillation to enable the use of forensic or other data unavailable at runtime in a range of security domains. An empirical evaluation shows that privileged information increases precision and recall over a system with no privileged information: we observe up to 7.7% relative decrease in detection error for fast-flux bot detection, 8.6% for malware traffic detection, 7.3% for malware classification, and 16.9% for face recognition. We explore the limitations and applications of different privileged information techniques in detection systems. Such techniques provide a new means for detection systems to learn from data that would otherwise not be available at runtime.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge