Communication Resources Constrained Hierarchical Federated Learning for End-to-End Autonomous Driving

Paper and Code

Jun 28, 2023

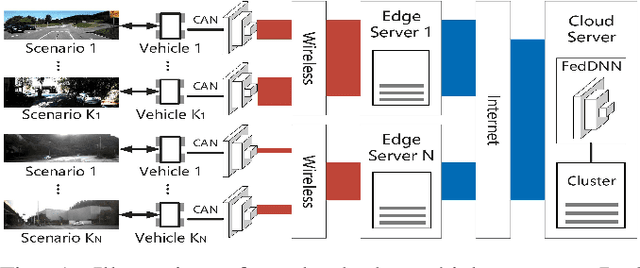

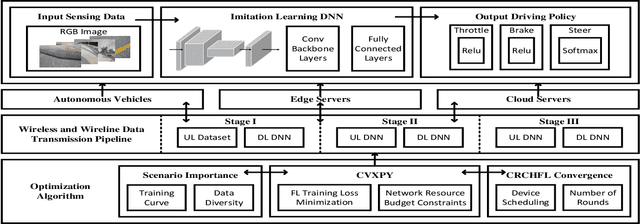

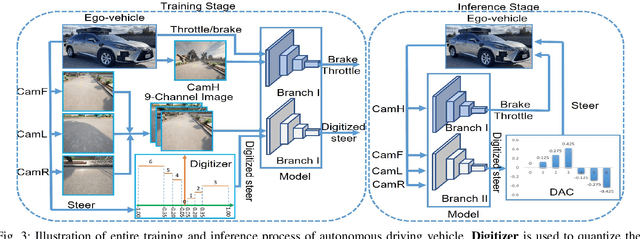

While federated learning (FL) improves the generalization of end-to-end autonomous driving by model aggregation, the conventional single-hop FL (SFL) suffers from slow convergence rate due to long-range communications among vehicles and cloud server. Hierarchical federated learning (HFL) overcomes such drawbacks via introduction of mid-point edge servers. However, the orchestration between constrained communication resources and HFL performance becomes an urgent problem. This paper proposes an optimization-based Communication Resource Constrained Hierarchical Federated Learning (CRCHFL) framework to minimize the generalization error of the autonomous driving model using hybrid data and model aggregation. The effectiveness of the proposed CRCHFL is evaluated in the Car Learning to Act (CARLA) simulation platform. Results show that the proposed CRCHFL both accelerates the convergence rate and enhances the generalization of federated learning autonomous driving model. Moreover, under the same communication resource budget, it outperforms the HFL by 10.33% and the SFL by 12.44%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge