Bi-discriminator Domain Adversarial Neural Networks with Class-Level Gradient Alignment

Paper and Code

Nov 01, 2023

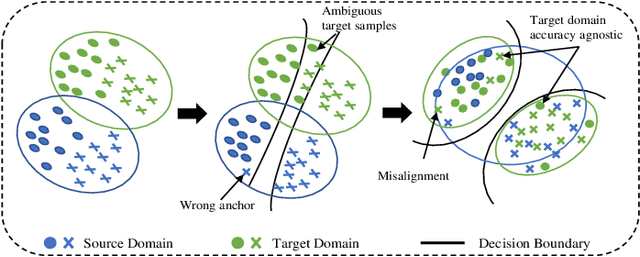

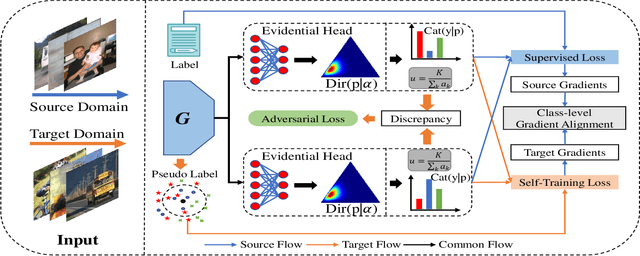

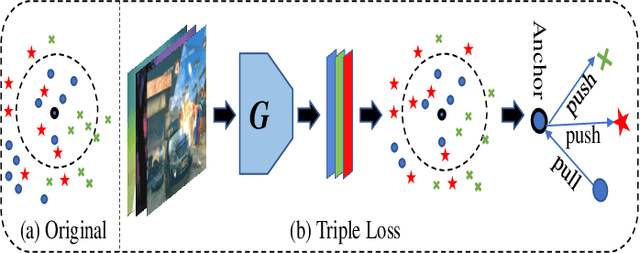

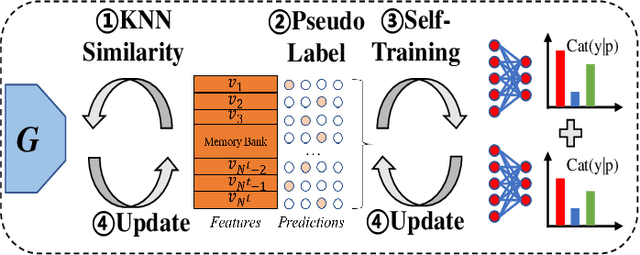

Unsupervised domain adaptation aims to transfer rich knowledge from the annotated source domain to the unlabeled target domain with the same label space. One prevalent solution is the bi-discriminator domain adversarial network, which strives to identify target domain samples outside the support of the source domain distribution and enforces their classification to be consistent on both discriminators. Despite being effective, agnostic accuracy and overconfident estimation for out-of-distribution samples hinder its further performance improvement. To address the above challenges, we propose a novel bi-discriminator domain adversarial neural network with class-level gradient alignment, i.e. BACG. BACG resorts to gradient signals and second-order probability estimation for better alignment of domain distributions. Specifically, for accuracy-awareness, we first design an optimizable nearest neighbor algorithm to obtain pseudo-labels of samples in the target domain, and then enforce the backward gradient approximation of the two discriminators at the class level. Furthermore, following evidential learning theory, we transform the traditional softmax-based optimization method into a Multinomial Dirichlet hierarchical model to infer the class probability distribution as well as samples uncertainty, thereby alleviating misestimation of out-of-distribution samples and guaranteeing high-quality classes alignment. In addition, inspired by contrastive learning, we develop a memory bank-based variant, i.e. Fast-BACG, which can greatly shorten the training process at the cost of a minor decrease in accuracy. Extensive experiments and detailed theoretical analysis on four benchmark data sets validate the effectiveness and robustness of our algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge