Automatic Labeling to Generate Training Data for Online LiDAR-based Moving Object Segmentation

Paper and Code

Jan 12, 2022

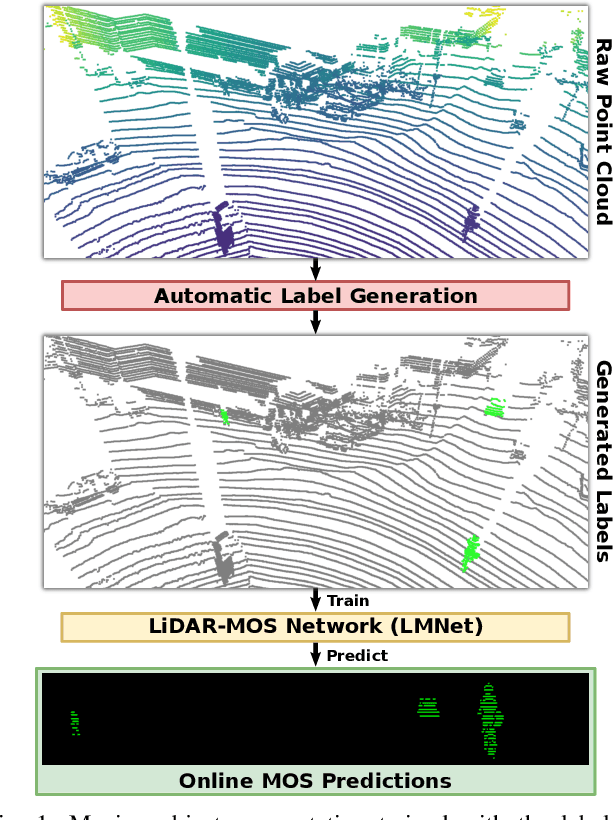

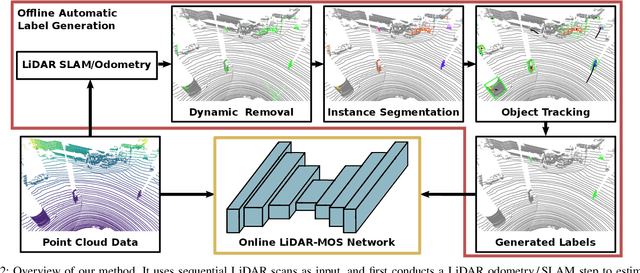

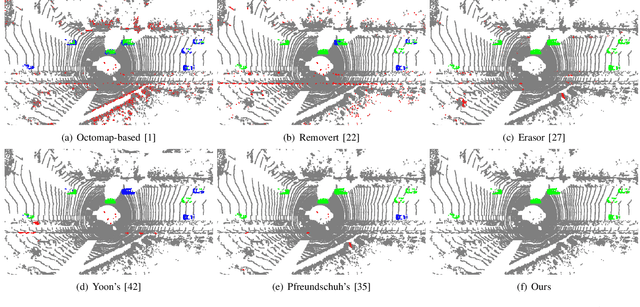

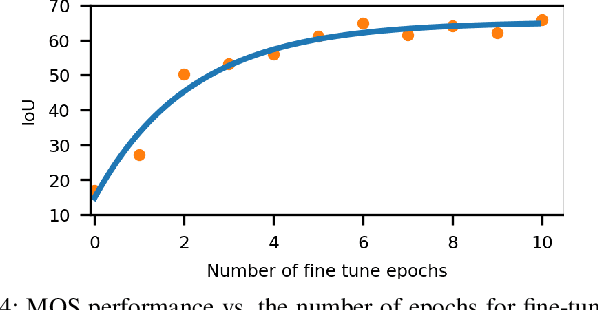

Understanding the scene is key for autonomously navigating vehicles and the ability to segment the surroundings online into moving and non-moving objects is a central ingredient for this task. Often, deep learning-based methods are used to perform moving object segmentation (MOS). The performance of these networks, however, strongly depends on the diversity and amount of labeled training data, information that may be costly to obtain. In this paper, we propose an automatic data labeling pipeline for 3D LiDAR data to save the extensive manual labeling effort and to improve the performance of existing learning-based MOS systems by automatically generating labeled training data. Our proposed approach achieves this by processing the data offline in batches. It first exploits an occupancy-based dynamic object removal to detect possible dynamic objects coarsely. Second, it extracts segments among the proposals and tracks them using a Kalman filter. Based on the tracked trajectories, it labels the actually moving objects such as driving cars and pedestrians as moving. In contrast, the non-moving objects, e.g., parked cars, lamps, roads, or buildings, are labeled as static. We show that this approach allows us to label LiDAR data highly effectively and compare our results to those of other label generation methods. We also train a deep neural network with our auto-generated labels and achieve similar performance compared to the one trained with manual labels on the same data, and an even better performance when using additional datasets with labels generated by our approach. Furthermore, we evaluate our method on multiple datasets using different sensors and our experiments indicate that our method can generate labels in diverse environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge