An Off-Policy Reinforcement Learning Algorithm Customized for Multi-Task Fusion in Large-Scale Recommender Systems

Paper and Code

Apr 19, 2024

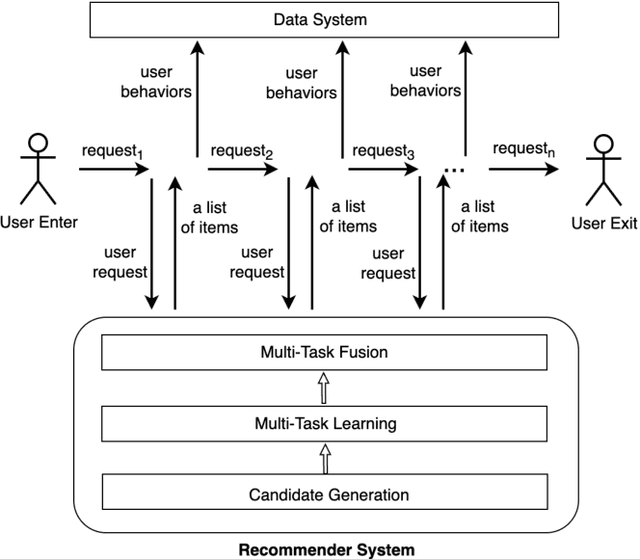

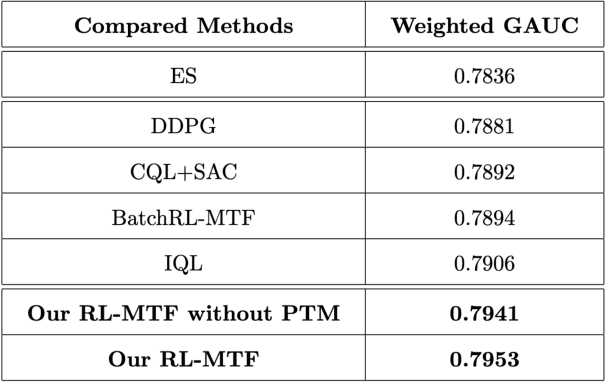

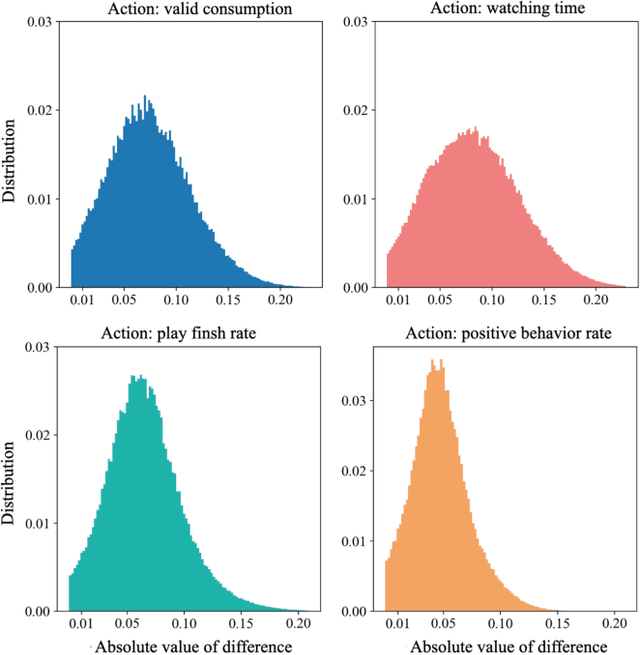

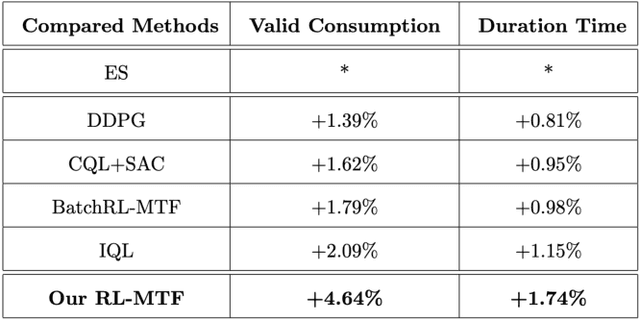

Recommender Systems (RSs) are widely used to provide personalized recommendation service. As the last critical stage of RSs, Multi-Task Fusion (MTF) is responsible for combining multiple scores outputted by Multi-Task Learning (MTL) into a final score to maximize user satisfaction, which determines the ultimate recommendation results. Recently, to optimize long-term user satisfaction within a recommendation session, Reinforcement Learning (RL) is used for MTF in the industry. However, the off-policy RL algorithms used for MTF so far have the following severe problems: 1) to avoid out-of-distribution (OOD) problem, their constraints are overly strict, which seriously damage their performance; 2) they are unaware of the exploration policy used for producing training data and never interact with real environment, so only suboptimal policy can be learned; 3) the traditional exploration policies are inefficient and hurt user experience. To solve the above problems, we propose a novel off-policy RL algorithm customized for MTF in large-scale RSs. Our RL-MTF algorithm integrates off-policy RL model with our online exploration policy to relax overstrict and complicated constraints, which significantly improves the performance of our RL model. We also design an extremely efficient exploration policy, which eliminates low-value exploration space and focuses on exploring potential high-value state-action pairs. Moreover, we adopt progressive training mode to further enhance our RL model's performance with the help of our exploration policy. We conduct extensive offline and online experiments in the short video channel of Tencent News. The results demonstrate that our RL-MTF model outperforms other models remarkably. Our RL-MTF model has been fully deployed in the short video channel of Tencent News for about one year. In addition, our solution has been used in other large-scale RSs in Tencent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge