An Efficient Solution for Breast Tumor Segmentation and Classification in Ultrasound Images Using Deep Adversarial Learning

Paper and Code

Jul 01, 2019

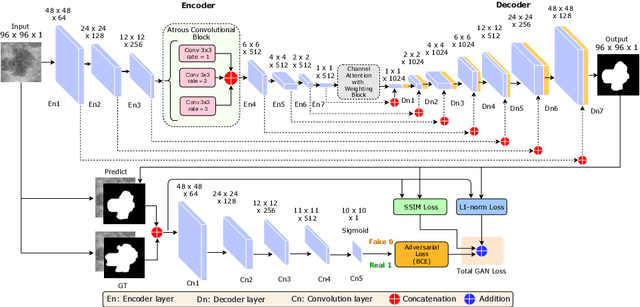

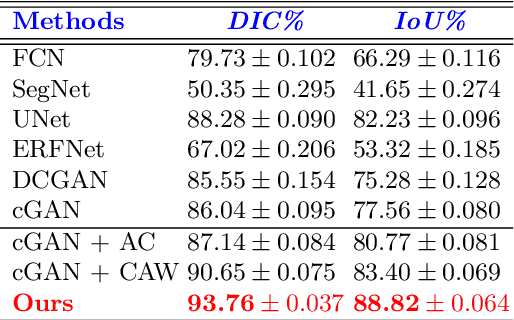

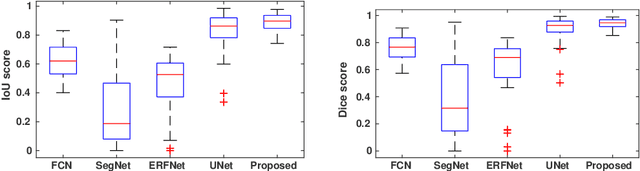

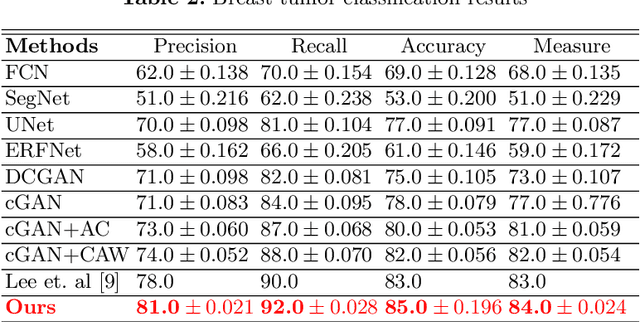

This paper proposes an efficient solution for tumor segmentation and classification in breast ultrasound (BUS) images. We propose to add an atrous convolution layer to the conditional generative adversarial network (cGAN) segmentation model to learn tumor features at different resolutions of BUS images. To automatically re-balance the relative impact of each of the highest level encoded features, we also propose to add a channel-wise weighting block in the network. In addition, the SSIM and L1-norm loss with the typical adversarial loss are used as a loss function to train the model. Our model outperforms the state-of-the-art segmentation models in terms of the Dice and IoU metrics, achieving top scores of 93.76% and 88.82%, respectively. In the classification stage, we show that few statistics features extracted from the shape of the boundaries of the predicted masks can properly discriminate between benign and malignant tumors with an accuracy of 85%$

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge