Advanced Dropout: A Model-free Methodology for Bayesian Dropout Optimization

Paper and Code

Oct 11, 2020

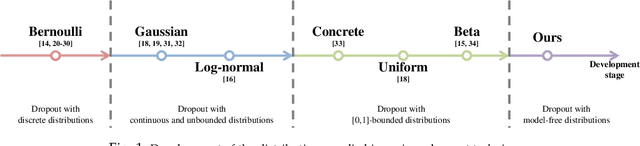

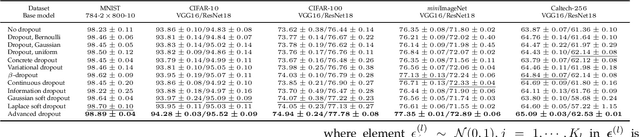

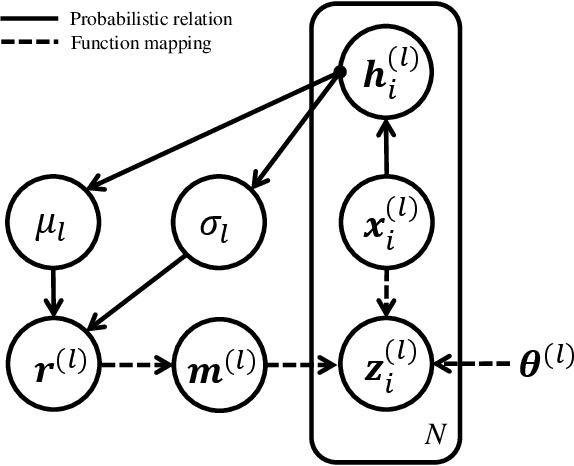

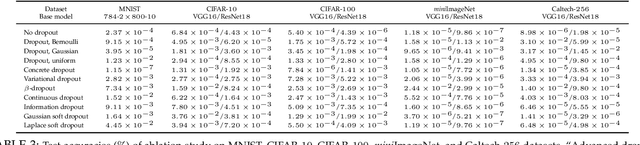

Due to lack of data, overfitting ubiquitously exists in real-world applications of deep neural networks (DNNs). In this paper, we propose advanced dropout, a model-free methodology, to mitigate overfitting and improve the performance of DNNs. The advanced dropout technique applies a model-free and easily implemented distribution with a parametric prior, and adaptively adjusts dropout rate. Specifically, the distribution parameters are optimized by stochastic gradient variational Bayes (SGVB) inference in order to carry out an end-to-end training of DNNs. We evaluate the effectiveness of the advanced dropout against nine dropout techniques on five widely used datasets in computer vision. The advanced dropout outperforms all the referred techniques by 0.83% on average for all the datasets. An ablation study is conducted to analyze the effectiveness of each component. Meanwhile, convergence of dropout rate and ability to prevent overfitting are discussed in terms of classification performance. Moreover, we extend the application of the advanced dropout to uncertainty inference and network pruning, and we find that the advanced dropout is superior to the corresponding referred methods. The advanced dropout improves classification accuracies by 4% in uncertainty inference and by 0.2% and 0.5% when pruning more than 90% of nodes and 99.8% of parameters, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge